2x5 Scalable Cluster Tutorial: Difference between revisions

No edit summary |

|||

| (83 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{howto_header}} | {{howto_header}} | ||

This | {{warning|1=This document is old, abandoned and very out of date. DON'T USE ANYTHING HERE! Consider it only as historical note taking.}} | ||

= The Design = | |||

== | == Storage == | ||

Storage, high-level: | |||

<source lang="text"> | |||

[ Storage Cluster ] | |||

_____________________________ _____________________________ | |||

| [ an-node01 ] | | [ an-node02 ] | | |||

| _____ _____ | | _____ _____ | | |||

| ( HDD ) ( SSD ) | | ( SSD ) ( HDD ) | | |||

| (_____) (_____) __________| |__________ (_____) (_____) | | |||

| | | | Storage =--\ /--= Storage | | | | | |||

| | \----| Network || | | || Network |----/ | | | |||

| \-------------|_________|| | | ||_________|-------------/ | | |||

|_____________________________| | | |_____________________________| | |||

__|_____|__ | |||

| HDD LUN | | |||

| SDD LUN | | |||

|___________| | |||

| | |||

_____|_____ | |||

| Floating | | |||

| SAN IP | | |||

[ VM Cluster ] |___________| | |||

______________________________ | | | | | ______________________________ | |||

| [ an-node03 ] | | | | | | | [ an-node06 ] | | |||

| _________ | | | | | | | _________ | | |||

| | [ vmA ] | | | | | | | | | [ vmJ ] | | | |||

| | _____ | | | | | | | | | _____ | | | |||

| | (_hdd_)-=----\ | | | | | | | /----=-(_hdd_) | | | |||

| |_________| | | | | | | | | | |_________| | | |||

| _________ | | | | | | | | | _________ | | |||

| | [ vmB ] | | | | | | | | | | | [ vmK ] | | | |||

| | _____ | | | | | | | | | | | _____ | | | |||

| | (_hdd_)-=--\ | __________| | | | | | |__________ | /--=-(_hdd_) | | | |||

| |_________| | \--| Storage =--/ | | | \--= Storage |--/ | |_________| | | |||

| _________ \----| Network || | | | || Network |----/ _________ | | |||

| | [ vmC ] | /----|_________|| | | | ||_________|----\ | [ vmL ] | | | |||

| | _____ | | | | | | | | | _____ | | | |||

| | (_hdd_)-=--/ | | | | | \--=-(_hdd_) | | | |||

| |_________| | | | | | |_________| | | |||

|______________________________| | | | |______________________________| | |||

______________________________ | | | ______________________________ | |||

| [ an-node04 ] | | | | | [ an-node07 ] | | |||

| _________ | | | | | _________ | | |||

| | [ vmD ] | | | | | | | [ vmM ] | | | |||

| | _____ | | | | | | | _____ | | | |||

| | (_hdd_)-=----\ | | | | | /----=-(_hdd_) | | | |||

| |_________| | | | | | | | |_________| | | |||

| _________ | | | | | | | _________ | | |||

| | [ vmE ] | | | | | | | | | [ vmN ] | | | |||

| | _____ | | | | | | | | | _____ | | | |||

| | (_hdd_)-=--\ | __________| | | | |__________ | /--=-(_hdd_) | | | |||

| |_________| | \--| Storage =----/ | \----= Storage |--/ | |_________| | | |||

| _________ \----| Network || | || Network |----/ _________ | | |||

| | [ vmF ] | /----|_________|| | ||_________|----\ | [ vmO ] | | | |||

| | _____ | | | | | | | _____ | | | |||

| | (_hdd_)-=--+ | | | \--=-(_hdd_) | | | |||

| | (_ssd_)-=--/ | | | |_________| | | |||

| |_________| | | | | | |||

|______________________________| | |______________________________| | |||

______________________________ | | |||

| [ an-node05 ] | | | |||

| _________ | | | |||

| | [ vmG ] | | | | |||

| | _____ | | | | |||

| | (_hdd_)-=----\ | | | |||

| |_________| | | | | |||

| _________ | | | | |||

| | [ vmH ] | | | | | |||

| | _____ | | | | | |||

| | (_hdd_)-=--\ | | | | |||

| | (_sdd_)-=--+ | __________| | | |||

| |_________| | \--| Storage =------/ | |||

| _________ \----| Network || | |||

| | [ vmI ] | /----|_________|| | |||

| | _____ | | | | |||

| | (_hdd_)-=--/ | | |||

| |_________| | | |||

|______________________________| | |||

</source> | |||

== Long View == | |||

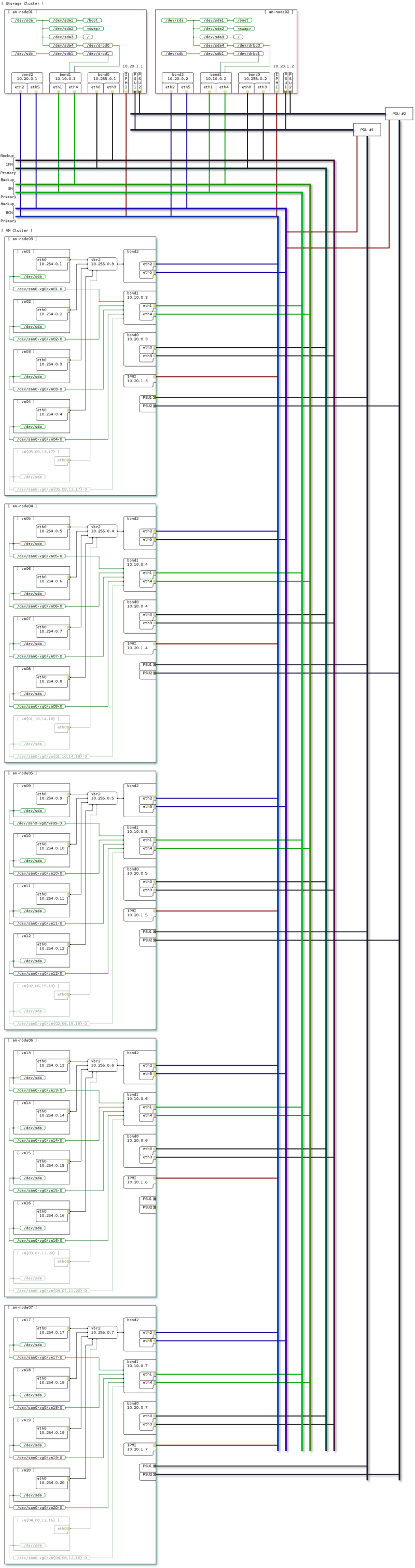

= | {{note|1=Yes, this is a big graphic, but this is also a big project. I am no artist though, and any help making this clearer is greatly appreciated!}} | ||

[[Image:2x5_the-plan_01.png|center|thumb|800px|The planned network. This shows separate IPMI and full redundancy through-out the cluster. This is the way a production cluster should be built, but is not expected for dev/test clusters.]] | |||

== Failure Mapping == | |||

VM Cluster; Guest VM failure migration planning; | |||

* Each node can host 5 VMs @ 2GB/VM. | |||

* This is an N-1 cluster with five nodes; 20 VMs total. | |||

<source lang="text"> | |||

| All | an-node03 | an-node04 | an-node05 | an-node06 | an-node07 | | |||

| on-line | down | down | down | down | down | | |||

----------+-----------+-----------+-----------+-----------+-----------+-----------+ | |||

an-node03 | vm01 | -- | vm01 | vm01 | vm01 | vm01 | | |||

| vm02 | -- | vm02 | vm02 | vm02 | vm02 | | |||

| vm03 | -- | vm03 | vm03 | vm03 | vm03 | | |||

| vm04 | -- | vm04 | vm04 | vm04 | vm04 | | |||

| -- | -- | vm05 | vm09 | vm13 | vm17 | | |||

----------|-----------|-----------|-----------|-----------|-----------|-----------| | |||

an-node04 | vm05 | vm05 | -- | vm05 | vm05 | vm05 | | |||

| vm06 | vm06 | -- | vm06 | vm06 | vm06 | | |||

| vm07 | vm07 | -- | vm07 | vm07 | vm07 | | |||

| vm08 | vm08 | -- | vm08 | vm08 | vm08 | | |||

| -- | vm01 | -- | vm10 | vm14 | vm18 | | |||

----------|-----------|-----------|-----------|-----------|-----------|-----------| | |||

an-node05 | vm09 | vm09 | vm09 | -- | vm09 | vm09 | | |||

| vm10 | vm10 | vm10 | -- | vm10 | vm10 | | |||

| vm11 | vm11 | vm11 | -- | vm11 | vm11 | | |||

| vm12 | vm12 | vm12 | -- | vm12 | vm12 | | |||

| -- | vm02 | vm06 | -- | vm15 | vm19 | | |||

----------|-----------|-----------|-----------|-----------|-----------|-----------| | |||

an-node06 | vm13 | vm13 | vm13 | vm13 | -- | vm13 | | |||

| vm14 | vm14 | vm14 | vm14 | -- | vm14 | | |||

| vm15 | vm15 | vm15 | vm15 | -- | vm15 | | |||

| vm16 | vm16 | vm16 | vm16 | -- | vm16 | | |||

| -- | vm03 | vm07 | vm11 | -- | vm20 | | |||

----------|-----------|-----------|-----------|-----------|-----------|-----------| | |||

an-node07 | vm17 | vm17 | vm17 | vm17 | vm17 | -- | | |||

| vm18 | vm18 | vm18 | vm18 | vm18 | -- | | |||

| vm19 | vm19 | vm19 | vm19 | vm19 | -- | | |||

| vm20 | vm20 | vm20 | vm20 | vm20 | -- | | |||

| -- | vm04 | vm08 | vm12 | vm16 | -- | | |||

----------+-----------+-----------+-----------+-----------+-----------+-----------+ | |||

</source> | |||

== Cluster Overview == | |||

{{note|1=This is not programatically accurate!}} | |||

This is meant to show, at a logical level, how the parts of a cluster work together. It is the first draft and is likely defective in terrible ways. | |||

== | <source lang="text"> | ||

[ Resource Managment ] | |||

___________ ___________ | |||

| | | | | |||

| Service A | | Service B | | |||

|___________| |___________| | |||

| | | | |||

__|_____|__ ___|_______________ | |||

| | | | | |||

| RGManager | | Clustered Storage |================================================. | |||

|___________| |___________________| | | |||

| | | | |||

|__________________|______________ | | |||

| \ | | |||

_________ ____|____ | | | |||

| | | | | | | |||

/------| Fencing |----| Locking | | | | |||

| |_________| |_________| | | | |||

_|___________|_____________|______________________|__________________________________________|_____ | |||

| | | | | | |||

| ______|_____ ____|___ | | | |||

| | | | | | | | |||

| | Membership | | Quorum | | | | |||

| |____________| |________| | | | |||

| |____________| | | | |||

| __|__ | | | |||

| / \ | | | |||

| { Totem } | | | |||

| \_____/ | | | |||

| __________________|_______________________|_______________ ______________ | | |||

| |-----------|-----------|----------------|-----------------|--------------| | | |||

| ___|____ ___|____ ___|____ ___|____ _____|_____ _____|_____ __|___ | |||

| | | | | | | | | | | | | | | | |||

| | Node 1 | | Node 2 | | Node 3 | ... | Node N | | Storage 1 |==| Storage 2 |==| DRBD | | |||

| |________| |________| |________| |________| |___________| |___________| |______| | |||

\_____|___________|___________|________________|_________________|______________| | |||

[ Cluster Communication ] | |||

</source> | |||

== Network IPs == | |||

<source lang="text"> | |||

SAN: 10.10.1.1 | |||

Node: | |||

| IFN | SN | BCN | IPMI | | |||

----------+-------------+------------+-----------+-----------+ | |||

an-node01 | 10.255.0.1 | 10.10.0.1 | 10.20.0.1 | 10.20.1.1 | | |||

an-node02 | 10.255.0.2 | 10.10.0.2 | 10.20.0.2 | 10.20.1.2 | | |||

an-node03 | 10.255.0.3 | 10.10.0.3 | 10.20.0.3 | 10.20.1.3 | | |||

an-node04 | 10.255.0.4 | 10.10.0.4 | 10.20.0.4 | 10.20.1.4 | | |||

an-node05 | 10.255.0.5 | 10.10.0.5 | 10.20.0.5 | 10.20.1.5 | | |||

an-node06 | 10.255.0.6 | 10.10.0.6 | 10.20.0.6 | 10.20.1.6 | | |||

an-node07 | 10.255.0.7 | 10.10.0.7 | 10.20.0.7 | 10.20.1.7 | | |||

----------+-------------+------------+-----------+-----------+ | |||

Aux Equipment: | |||

| BCN | | |||

----------+-------------+ | |||

pdu1 | 10.20.2.1 | | |||

pdu2 | 10.20.2.2 | | |||

switch1 | 10.20.2.3 | | |||

switch2 | 10.20.2.4 | | |||

ups1 | 10.20.2.5 | | |||

ups2 | 10.20.2.6 | | |||

----------+-------------+ | |||

VMs: | |||

| VMN | | |||

----------+-------------+ | |||

vm01 | 10.254.0.1 | | |||

vm02 | 10.254.0.2 | | |||

vm03 | 10.254.0.3 | | |||

vm04 | 10.254.0.4 | | |||

vm05 | 10.254.0.5 | | |||

vm06 | 10.254.0.6 | | |||

vm07 | 10.254.0.7 | | |||

vm08 | 10.254.0.8 | | |||

vm09 | 10.254.0.9 | | |||

vm10 | 10.254.0.10 | | |||

vm11 | 10.254.0.11 | | |||

vm12 | 10.254.0.12 | | |||

vm13 | 10.254.0.13 | | |||

vm14 | 10.254.0.14 | | |||

vm15 | 10.254.0.15 | | |||

vm16 | 10.254.0.16 | | |||

vm17 | 10.254.0.17 | | |||

vm18 | 10.254.0.18 | | |||

vm19 | 10.254.0.19 | | |||

vm20 | 10.254.0.20 | | |||

----------+-------------+ | |||

</source> | |||

= | = Install The Cluster Software = | ||

If you are using Red Hat Enterprise Linux, you will need to add the <span class="code">RHEL Server Optional (v. 6 64-bit x86_64)</span> channel for each node in your cluster. You can do this in [[RHN]] by going the your subscription management page, clicking on each server, clicking on "Alter Channel Subscriptions", click to enable the <span class="code">RHEL Server Optional (v. 6 64-bit x86_64)</span> channel and then by clicking on "Change Subscription". | |||

This actual installation is simple, just use <span class="code">yum</span> to install <span class="code">cman</span>. | |||

<source lang="bash"> | |||

yum install cman fence-agents rgmanager resource-agents lvm2-cluster gfs2-utils python-virtinst libvirt qemu-kvm-tools qemu-kvm virt-manager virt-viewer virtio-win | |||

</source> | |||

== Initial Config == | |||

Everything uses <span class="code">ricci</span>, which itself needs to have a password set. I set this to match <span class="code">root</span>. | |||

''' | '''Both''': | ||

== | <source lang="bash"> | ||

passwd ricci | |||

</source> | |||

<source lang="bash"> | |||

New password: | |||

Retype new password: | |||

passwd: all authentication tokens updated successfully. | |||

</source> | |||

With | With these decisions and the information gathered, here is what our first <span class="code">/etc/cluster/cluster.conf</span> file will look like. | ||

<source lang="bash"> | |||

touch /etc/cluster/cluster.conf | |||

vim /etc/cluster/cluster.conf | |||

</source> | |||

<source lang="xml"> | |||

<?xml version="1.0"?> | |||

<cluster config_version="1" name="an-cluster"> | |||

<cman expected_votes="1" two_node="1" /> | |||

<clusternodes> | |||

<clusternode name="an-node01.alteeve.ca" nodeid="1"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an01" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="1" /> | |||

<device action="reboot" name="pdu2" port="1" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node02.alteeve.ca" nodeid="2"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an02" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="2" /> | |||

<device action="reboot" name="pdu2" port="2" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node03.alteeve.ca" nodeid="3"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an03" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="3" /> | |||

<device action="reboot" name="pdu2" port="3" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node04.alteeve.ca" nodeid="4"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an04" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="4" /> | |||

<device action="reboot" name="pdu2" port="4" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node05.alteeve.ca" nodeid="5"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an05" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="5" /> | |||

<device action="reboot" name="pdu2" port="5" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node06.alteeve.ca" nodeid="6"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an06" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="6" /> | |||

<device action="reboot" name="pdu2" port="6" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node07.alteeve.ca" nodeid="7"> | |||

<fence> | |||

<method name="ipmi"> | |||

<device action="reboot" name="ipmi_an07" /> | |||

</method> | |||

<method name="pdu"> | |||

<device action="reboot" name="pdu1" port="7" /> | |||

<device action="reboot" name="pdu2" port="7" /> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

</clusternodes> | |||

<fencedevices> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node01.ipmi" login="root" name="ipmi_an01" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node02.ipmi" login="root" name="ipmi_an02" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node03.ipmi" login="root" name="ipmi_an03" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node04.ipmi" login="root" name="ipmi_an04" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node05.ipmi" login="root" name="ipmi_an05" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node06.ipmi" login="root" name="ipmi_an06" passwd="secret" /> | |||

<fencedevice agent="fence_ipmilan" ipaddr="an-node07.ipmi" login="root" name="ipmi_an07" passwd="secret" /> | |||

<fencedevice agent="fence_apc_snmp" ipaddr="pdu1.alteeve.ca" name="pdu1" /> | |||

<fencedevice agent="fence_apc_snmp" ipaddr="pdu2.alteeve.ca" name="pdu2" /> | |||

</fencedevices> | |||

<fence_daemon post_join_delay="30" /> | |||

<totem rrp_mode="none" secauth="off" /> | |||

<rm> | |||

<resources> | |||

<ip address="10.10.1.1" monitor_link="on" /> | |||

<script file="/etc/init.d/tgtd" name="tgtd" /> | |||

<script file="/etc/init.d/drbd" name="drbd" /> | |||

<script file="/etc/init.d/clvmd" name="clvmd" /> | |||

<script file="/etc/init.d/gfs2" name="gfs2" /> | |||

<script file="/etc/init.d/libvirtd" name="libvirtd" /> | |||

</resources> | |||

<failoverdomains> | |||

<!-- Used for storage --> | |||

<!-- SAN Nodes --> | |||

<failoverdomain name="an1_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node01.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an2_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node02.alteeve.ca" /> | |||

</failoverdomain> | |||

<!-- VM Nodes --> | |||

<failoverdomain name="an3_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an4_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an5_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an6_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node06.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an7_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node07.alteeve.ca" /> | |||

</failoverdomain> | |||

<!-- Domain for the SAN --> | |||

<failoverdomain name="an1_primary" nofailback="1" ordered="1" restricted="0"> | |||

<failoverdomainnode name="an-node01.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node02.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<!-- Domains for VMs running primarily on an-node03 --> | |||

<failoverdomain name="an3_an4" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an3_an5" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an3_an6" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an3_an7" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<!-- Domains for VMs running primarily on an-node04 --> | |||

<failoverdomain name="an4_an3" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an4_an5" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an4_an6" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an4_an7" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<!-- Domains for VMs running primarily on an-node05 --> | |||

<failoverdomain name="an5_an3" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an5_an4" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an5_an6" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an5_an7" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<!-- Domains for VMs running primarily on an-node06 --> | |||

<failoverdomain name="an6_an3" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an6_an4" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an6_an5" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an6_an7" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<!-- Domains for VMs running primarily on an-node07 --> | |||

<failoverdomain name="an7_an3" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node03.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an7_an4" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node04.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an7_an5" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node05.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

<failoverdomain name="an7_an6" nofailback="1" ordered="1" restricted="1"> | |||

<failoverdomainnode name="an-node07.alteeve.ca" priority="1" /> | |||

<failoverdomainnode name="an-node06.alteeve.ca" priority="2" /> | |||

</failoverdomain> | |||

</failoverdomains> | |||

<!-- SAN Services --> | |||

<service autostart="1" domain="an1_only" exclusive="0" max_restarts="0" name="an1_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="tgtd" /> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an2_only" exclusive="0" max_restarts="0" name="an2_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="tgtd" /> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an1_primary" name="san_ip" recovery="relocate"> | |||

<ip ref="10.10.1.1" /> | |||

</service> | |||

<!-- VM Storage services. --> | |||

<service autostart="1" domain="an3_only" exclusive="0" max_restarts="0" name="an3_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="gfs2"> | |||

<script ref="libvirtd" /> | |||

</script> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an4_only" exclusive="0" max_restarts="0" name="an4_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="gfs2"> | |||

<script ref="libvirtd" /> | |||

</script> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an5_only" exclusive="0" max_restarts="0" name="an5_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="gfs2"> | |||

<script ref="libvirtd" /> | |||

</script> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an6_only" exclusive="0" max_restarts="0" name="an6_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="gfs2"> | |||

<script ref="libvirtd" /> | |||

</script> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an7_only" exclusive="0" max_restarts="0" name="an7_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="gfs2"> | |||

<script ref="libvirtd" /> | |||

</script> | |||

</script> | |||

</script> | |||

</service> | |||

<!-- VM Services --> | |||

<!-- VMs running primarily on an-node03 --> | |||

<vm name="vm01" domain="an03_an04" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm02" domain="an03_an05" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm03" domain="an03_an06" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm04" domain="an03_an07" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<!-- VMs running primarily on an-node04 --> | |||

<vm name="vm05" domain="an04_an03" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm06" domain="an04_an05" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm07" domain="an04_an06" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm08" domain="an04_an07" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<!-- VMs running primarily on an-node05 --> | |||

<vm name="vm09" domain="an05_an03" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm10" domain="an05_an04" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm11" domain="an05_an06" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm12" domain="an05_an07" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<!-- VMs running primarily on an-node06 --> | |||

<vm name="vm13" domain="an06_an03" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm14" domain="an06_an04" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm15" domain="an06_an05" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm16" domain="an06_an07" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<!-- VMs running primarily on an-node07 --> | |||

<vm name="vm17" domain="an07_an03" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm18" domain="an07_an04" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm19" domain="an07_an05" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

<vm name="vm20" domain="an07_an06" path="/shared/definitions/" autostart="0" exclusive="0" recovery="restart" max_restarts="2" restart_expire_time="600"/> | |||

</rm> | |||

</cluster> | |||

</source> | |||

Save the file, then validate it. If it fails, address the errors and try again. | |||

<source lang="bash"> | <source lang="bash"> | ||

ip addr list | grep <ip> | |||

rg_test test /etc/cluster/cluster.conf | |||

ccs_config_validate | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

Configuration validates | |||

</source> | </source> | ||

Push it to the other node: | |||

<source lang="bash"> | <source lang="bash"> | ||

rsync -av /etc/cluster/cluster.conf root@an-node02:/etc/cluster/ | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

sending incremental file list | |||

cluster.conf | |||

sent 781 bytes received 31 bytes 541.33 bytes/sec | |||

total size is 701 speedup is 0.86 | |||

</source> | </source> | ||

Start: | |||

'''''DO NOT PROCEED UNTIL YOUR cluster.conf FILE VALIDATES!''''' | |||

Unless you have it perfect, your cluster will fail. | |||

Once it validates, proceed. | |||

== Starting The Cluster For The First Time == | |||

By default, if you start one node only and you've enabled the <span class="code"><cman two_node="1" expected_votes="1"/></span> option as we have done, the lone server will effectively gain quorum. It will try to connect to the cluster, but there won't be a cluster to connect to, so it will fence the other node after a timeout period. This timeout is <span class="code">6</span> seconds by default. | |||

For now, we will leave the default as it is. If you're interested in changing it though, the argument you are looking for is <span class="code">[[RHCS v3 cluster.conf#post_join_delay|post_join_delay]]</span>. | |||

This behaviour means that we'll want to start both nodes well within six seconds of one another, least the slower one get needlessly fenced. | |||

'''Left off here''' | |||

Note to help minimize dual-fences: | |||

* <span class="code">you could add FENCED_OPTS="-f 5" to /etc/sysconfig/cman on *one* node</span> (ilo fence devices may need this) | |||

== | == DRBD Config == | ||

Install from source: | |||

'''Both''': | |||

= | <source lang="bash"> | ||

# Obliterate peer - fence via cman | |||

wget -c https://alteeve.ca/files/an-cluster/sbin/obliterate-peer.sh -O /sbin/obliterate-peer.sh | |||

chmod a+x /sbin/obliterate-peer.sh | |||

ls -lah /sbin/obliterate-peer.sh | |||

# Download, compile and install DRBD | |||

wget -c http://oss.linbit.com/drbd/8.3/drbd-8.3.11.tar.gz | |||

tar -xvzf drbd-8.3.11.tar.gz | |||

cd drbd-8.3.11 | |||

./configure \ | |||

--prefix=/usr \ | |||

--localstatedir=/var \ | |||

--sysconfdir=/etc \ | |||

--with-utils \ | |||

--with-km \ | |||

--with-udev \ | |||

--with-pacemaker \ | |||

--with-rgmanager \ | |||

--with-bashcompletion | |||

make | |||

make install | |||

chkconfig --add drbd | |||

chkconfig drbd off | |||

</source> | |||

== Configure == | |||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/ | # Configure DRBD's global value. | ||

cp /etc/drbd.d/global_common.conf /etc/drbd.d/global_common.conf.orig | |||

vim /etc/drbd.d/global_common.conf | |||

diff -u /etc/drbd.d/global_common.conf | |||

</source> | |||

<source lang="diff"> | |||

--- /etc/drbd.d/global_common.conf.orig 2011-08-01 21:58:46.000000000 -0400 | |||

+++ /etc/drbd.d/global_common.conf 2011-08-01 23:18:27.000000000 -0400 | |||

@@ -15,24 +15,35 @@ | |||

# out-of-sync "/usr/lib/drbd/notify-out-of-sync.sh root"; | |||

# before-resync-target "/usr/lib/drbd/snapshot-resync-target-lvm.sh -p 15 -- -c 16k"; | |||

# after-resync-target /usr/lib/drbd/unsnapshot-resync-target-lvm.sh; | |||

+ fence-peer "/sbin/obliterate-peer.sh"; | |||

} | |||

startup { | |||

# wfc-timeout degr-wfc-timeout outdated-wfc-timeout wait-after-sb | |||

+ become-primary-on both; | |||

+ wfc-timeout 300; | |||

+ degr-wfc-timeout 120; | |||

} | |||

disk { | |||

# on-io-error fencing use-bmbv no-disk-barrier no-disk-flushes | |||

# no-disk-drain no-md-flushes max-bio-bvecs | |||

+ fencing resource-and-stonith; | |||

} | |||

net { | |||

# sndbuf-size rcvbuf-size timeout connect-int ping-int ping-timeout max-buffers | |||

# max-epoch-size ko-count allow-two-primaries cram-hmac-alg shared-secret | |||

# after-sb-0pri after-sb-1pri after-sb-2pri data-integrity-alg no-tcp-cork | |||

+ allow-two-primaries; | |||

+ after-sb-0pri discard-zero-changes; | |||

+ after-sb-1pri discard-secondary; | |||

+ after-sb-2pri disconnect; | |||

} | |||

syncer { | |||

# rate after al-extents use-rle cpu-mask verify-alg csums-alg | |||

+ # This should be no more than 30% of the maximum sustainable write speed. | |||

+ rate 20M; | |||

} | |||

} | |||

</source> | |||

<source lang="bash"> | |||

vim /etc/drbd.d/r0.res | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

resource r0 { | |||

device /dev/drbd0; | |||

meta-disk internal; | |||

on an-node01.alteeve.ca { | |||

address 192.168.2.71:7789; | |||

disk /dev/sda5; | |||

} | |||

on an-node02.alteeve.ca { | |||

address 192.168.2.72:7789; | |||

disk /dev/sda5; | |||

} | |||

} | |||

</source> | </source> | ||

<source lang="bash"> | <source lang="bash"> | ||

cp /etc/drbd.d/r0.res /etc/drbd.d/r1.res | |||

vim /etc/drbd.d/r1.res | |||

</source> | |||

<source lang="text"> | |||

resource r1 { | |||

device /dev/drbd1; | |||

meta-disk internal; | |||

on an-node01.alteeve.ca { | |||

address 192.168.2.71:7790; | |||

disk /dev/sdb1; | |||

} | |||

on an-node02.alteeve.ca { | |||

address 192.168.2.72:7790; | |||

disk /dev/sdb1; | |||

} | |||

} | |||

</source> | </source> | ||

=== | {{note|1=If you have multiple DRBD resources on on (set of) backing disks, consider adding <span class="code">syncer { after <minor-1>; }</span>. For example, tell <span class="code">/dev/drbd1</span> to wait for <span class="code">/dev/drbd0</span> by adding <span class="code">syncer { after 0; }</span>. This will prevent simultaneous resync's which could seriously impact performance. Resources will wait in <span class="code"></span> state until the defined resource has completed sync'ing.}} | ||

Validate: | |||

<source lang="bash"> | <source lang="bash"> | ||

drbdadm dump | |||

</source> | </source> | ||

<source lang="text"> | |||

--== Thank you for participating in the global usage survey ==-- | |||

The server's response is: | |||

you are the 369th user to install this version | |||

# /usr/etc/drbd.conf | |||

common { | |||

protocol C; | |||

net { | |||

allow-two-primaries; | |||

after-sb-0pri discard-zero-changes; | |||

after-sb-1pri discard-secondary; | |||

after-sb-2pri disconnect; | |||

} | |||

disk { | |||

fencing resource-and-stonith; | |||

} | |||

syncer { | |||

rate 20M; | |||

} | |||

startup { | |||

wfc-timeout 300; | |||

degr-wfc-timeout 120; | |||

become-primary-on both; | |||

} | |||

handlers { | |||

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; | |||

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; | |||

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f"; | |||

fence-peer /sbin/obliterate-peer.sh; | |||

} | |||

} | |||

# resource r0 on an-node01.alteeve.ca: not ignored, not stacked | |||

resource r0 { | |||

on an-node01.alteeve.ca { | |||

device /dev/drbd0 minor 0; | |||

disk /dev/sda5; | |||

address ipv4 192.168.2.71:7789; | |||

meta-disk internal; | |||

} | |||

on an-node02.alteeve.ca { | |||

device /dev/drbd0 minor 0; | |||

disk /dev/sda5; | |||

address ipv4 192.168.2.72:7789; | |||

meta-disk internal; | |||

} | |||

} | |||

# resource r1 on an-node01.alteeve.ca: not ignored, not stacked | |||

resource r1 { | |||

on an-node01.alteeve.ca { | |||

device /dev/drbd1 minor 1; | |||

disk /dev/sdb1; | |||

address ipv4 192.168.2.71:7790; | |||

meta-disk internal; | |||

} | |||

on an-node02.alteeve.ca { | |||

device /dev/drbd1 minor 1; | |||

disk /dev/sdb1; | |||

address ipv4 192.168.2.72:7790; | |||

meta-disk internal; | |||

} | |||

} | |||

</source> | </source> | ||

<source lang="bash"> | |||

rsync -av /etc/drbd.d root@an-node02:/etc/ | |||

</source> | |||

<source lang="text"> | |||

drbd.d/ | |||

drbd.d/global_common.conf | |||

drbd.d/global_common.conf.orig | |||

drbd.d/r0.res | |||

drbd.d/r1.res | |||

sent 3523 bytes received 110 bytes 7266.00 bytes/sec | |||

total size is 3926 speedup is 1.08 | |||

</source> | </source> | ||

= | == Initialize and First start == | ||

'''Both''': | |||

Create the meta-data. | |||

<source lang="bash"> | |||

modprobe | |||

drbdadm create-md r{0,1} | |||

</source> | |||

<source lang="text"> | |||

Writing meta data... | |||

initializing activity log | |||

NOT initialized bitmap | |||

New drbd meta data block successfully created. | |||

success | |||

Writing meta data... | |||

initializing activity log | |||

NOT initialized bitmap | |||

New drbd meta data block successfully created. | |||

success | |||

</source> | |||

Attach, connect and confirm (after both have attached and connected): | |||

<source lang="bash"> | |||

drbdadm attach r{0,1} | |||

drbdadm connect r{0,1} | |||

cat /proc/drbd | |||

</source> | |||

<source lang="text"> | |||

version: 8.3.11 (api:88/proto:86-96) | |||

GIT-hash: 0de839cee13a4160eed6037c4bddd066645e23c5 build by root@an-node01.alteeve.ca, 2011-08-01 22:04:32 | |||

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r----- | |||

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:441969960 | |||

1: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r----- | |||

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:29309628 | |||

</source> | |||

== | There is no data, so force both devices to be instantly UpToDate: | ||

<source lang="bash"> | |||

drbdadm -- --clear-bitmap new-current-uuid r{0,1} | |||

cat /proc/drbd | |||

</source> | |||

<source lang="text"> | |||

version: 8.3.11 (api:88/proto:86-96) | |||

GIT-hash: 0de839cee13a4160eed6037c4bddd066645e23c5 build by root@an-node01.alteeve.ca, 2011-08-01 22:04:32 | |||

0: cs:Connected ro:Secondary/Secondary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

1: cs:Connected ro:Secondary/Secondary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

</source> | |||

Set both to primary and run a final check. | |||

<source lang="bash"> | |||

drbdadm primary r{0,1} | |||

cat /proc/drbd | |||

</source> | |||

<source lang="text"> | |||

version: 8.3.11 (api:88/proto:86-96) | |||

GIT-hash: 0de839cee13a4160eed6037c4bddd066645e23c5 build by root@an-node01.alteeve.ca, 2011-08-01 22:04:32 | |||

0: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:672 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

1: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:672 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

</source> | |||

== Update the cluster == | |||

<source lang="bash"> | |||

vim /etc/cluster/cluster.conf | |||

</source> | |||

<source lang="xml"> | |||

<?xml version="1.0"?> | |||

<cluster config_version="17" name="an-clusterA"> | |||

<cman expected_votes="1" two_node="1"/> | |||

<totem rrp_mode="none" secauth="off"/> | |||

<clusternodes> | |||

<clusternode name="an-node01.alteeve.ca" nodeid="1"> | |||

<fence> | |||

<method name="apc_pdu"> | |||

<device action="reboot" name="pdu2" port="1"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node02.alteeve.ca" nodeid="2"> | |||

<fence> | |||

<method name="apc_pdu"> | |||

<device action="reboot" name="pdu2" port="2"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

</clusternodes> | |||

<fencedevices> | |||

<fencedevice agent="fence_apc" ipaddr="192.168.1.6" login="apc" name="pdu2" passwd="secret"/> | |||

</fencedevices> | |||

<fence_daemon post_join_delay="30"/> | |||

<rm> | |||

<resources> | |||

<ip address="192.168.2.100" monitor_link="on"/> | |||

<script file="/etc/init.d/drbd" name="drbd"/> | |||

<script file="/etc/init.d/clvmd" name="clvmd"/> | |||

<script file="/etc/init.d/tgtd" name="tgtd"/> | |||

</resources> | |||

<failoverdomains> | |||

<failoverdomain name="an1_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node01.alteeve.ca"/> | |||

</failoverdomain> | |||

<failoverdomain name="an2_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node02.alteeve.ca"/> | |||

</failoverdomain> | |||

<failoverdomain name="an1_primary" nofailback="1" ordered="1" restricted="0"> | |||

<failoverdomainnode name="an-node01.alteeve.ca" priority="1"/> | |||

<failoverdomainnode name="an-node02.alteeve.ca" priority="2"/> | |||

</failoverdomain> | |||

</failoverdomains> | |||

<service autostart="0" domain="an1_only" exclusive="0" max_restarts="0" name="an1_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"/> | |||

</script> | |||

</service> | |||

<service autostart="0" domain="an2_only" exclusive="0" max_restarts="0" name="an2_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"/> | |||

</script> | |||

</service> | |||

</rm> | |||

</cluster> | |||

</source> | |||

<source lang="bash"> | |||

rg_test test /etc/cluster/cluster.conf | |||

</source> | |||

<source lang="text"> | |||

Running in test mode. | |||

Loading resource rule from /usr/share/cluster/oralistener.sh | |||

Loading resource rule from /usr/share/cluster/apache.sh | |||

Loading resource rule from /usr/share/cluster/SAPDatabase | |||

Loading resource rule from /usr/share/cluster/postgres-8.sh | |||

Loading resource rule from /usr/share/cluster/lvm.sh | |||

Loading resource rule from /usr/share/cluster/mysql.sh | |||

Loading resource rule from /usr/share/cluster/lvm_by_vg.sh | |||

Loading resource rule from /usr/share/cluster/service.sh | |||

Loading resource rule from /usr/share/cluster/samba.sh | |||

Loading resource rule from /usr/share/cluster/SAPInstance | |||

Loading resource rule from /usr/share/cluster/checkquorum | |||

Loading resource rule from /usr/share/cluster/ocf-shellfuncs | |||

Loading resource rule from /usr/share/cluster/svclib_nfslock | |||

Loading resource rule from /usr/share/cluster/script.sh | |||

Loading resource rule from /usr/share/cluster/clusterfs.sh | |||

Loading resource rule from /usr/share/cluster/fs.sh | |||

Loading resource rule from /usr/share/cluster/oracledb.sh | |||

Loading resource rule from /usr/share/cluster/nfsserver.sh | |||

Loading resource rule from /usr/share/cluster/netfs.sh | |||

Loading resource rule from /usr/share/cluster/orainstance.sh | |||

Loading resource rule from /usr/share/cluster/vm.sh | |||

Loading resource rule from /usr/share/cluster/lvm_by_lv.sh | |||

Loading resource rule from /usr/share/cluster/tomcat-6.sh | |||

Loading resource rule from /usr/share/cluster/ip.sh | |||

Loading resource rule from /usr/share/cluster/nfsexport.sh | |||

Loading resource rule from /usr/share/cluster/openldap.sh | |||

Loading resource rule from /usr/share/cluster/ASEHAagent.sh | |||

Loading resource rule from /usr/share/cluster/nfsclient.sh | |||

Loading resource rule from /usr/share/cluster/named.sh | |||

Loaded 24 resource rules | |||

=== Resources List === | |||

Resource type: ip | |||

Instances: 1/1 | |||

Agent: ip.sh | |||

Attributes: | |||

address = 192.168.2.100 [ primary unique ] | |||

monitor_link = on | |||

nfslock [ inherit("service%nfslock") ] | |||

Resource type: script | |||

Agent: script.sh | |||

Attributes: | |||

name = drbd [ primary unique ] | |||

file = /etc/init.d/drbd [ unique required ] | |||

service_name [ inherit("service%name") ] | |||

Resource type: script | |||

Agent: script.sh | |||

Attributes: | |||

name = clvmd [ primary unique ] | |||

file = /etc/init.d/clvmd [ unique required ] | |||

service_name [ inherit("service%name") ] | |||

== | Resource type: script | ||

Agent: script.sh | |||

Attributes: | |||

name = tgtd [ primary unique ] | |||

file = /etc/init.d/tgtd [ unique required ] | |||

service_name [ inherit("service%name") ] | |||

Resource type: service [INLINE] | |||

Instances: 1/1 | |||

Agent: service.sh | |||

Attributes: | |||

name = an1_storage [ primary unique required ] | |||

domain = an1_only [ reconfig ] | |||

autostart = 0 [ reconfig ] | |||

exclusive = 0 [ reconfig ] | |||

nfslock = 0 | |||

nfs_client_cache = 0 | |||

recovery = restart [ reconfig ] | |||

depend_mode = hard | |||

max_restarts = 0 | |||

restart_expire_time = 0 | |||

priority = 0 | |||

Resource type: service [INLINE] | |||

Instances: 1/1 | |||

Agent: service.sh | |||

Attributes: | |||

name = an2_storage [ primary unique required ] | |||

domain = an2_only [ reconfig ] | |||

autostart = 0 [ reconfig ] | |||

exclusive = 0 [ reconfig ] | |||

nfslock = 0 | |||

nfs_client_cache = 0 | |||

recovery = restart [ reconfig ] | |||

depend_mode = hard | |||

max_restarts = 0 | |||

restart_expire_time = 0 | |||

priority = 0 | |||

== | Resource type: service [INLINE] | ||

Instances: 1/1 | |||

Agent: service.sh | |||

Attributes: | |||

name = san_ip [ primary unique required ] | |||

domain = an1_primary [ reconfig ] | |||

autostart = 0 [ reconfig ] | |||

exclusive = 0 [ reconfig ] | |||

nfslock = 0 | |||

nfs_client_cache = 0 | |||

recovery = relocate [ reconfig ] | |||

depend_mode = hard | |||

max_restarts = 0 | |||

restart_expire_time = 0 | |||

priority = 0 | |||

=== Resource Tree === | |||

service (S0) { | |||

name = "an1_storage"; | |||

domain = "an1_only"; | |||

autostart = "0"; | |||

exclusive = "0"; | |||

nfslock = "0"; | |||

nfs_client_cache = "0"; | |||

recovery = "restart"; | |||

depend_mode = "hard"; | |||

max_restarts = "0"; | |||

restart_expire_time = "0"; | |||

priority = "0"; | |||

script (S0) { | |||

name = "drbd"; | |||

file = "/etc/init.d/drbd"; | |||

service_name = "an1_storage"; | |||

script (S0) { | |||

name = "clvmd"; | |||

file = "/etc/init.d/clvmd"; | |||

service_name = "an1_storage"; | |||

} | |||

} | |||

} | |||

service (S0) { | |||

name = "an2_storage"; | |||

domain = "an2_only"; | |||

autostart = "0"; | |||

exclusive = "0"; | |||

nfslock = "0"; | |||

nfs_client_cache = "0"; | |||

recovery = "restart"; | |||

depend_mode = "hard"; | |||

max_restarts = "0"; | |||

restart_expire_time = "0"; | |||

priority = "0"; | |||

script (S0) { | |||

name = "drbd"; | |||

file = "/etc/init.d/drbd"; | |||

service_name = "an2_storage"; | |||

script (S0) { | |||

name = "clvmd"; | |||

file = "/etc/init.d/clvmd"; | |||

service_name = "an2_storage"; | |||

} | |||

} | |||

} | |||

service (S0) { | |||

name = "san_ip"; | |||

domain = "an1_primary"; | |||

autostart = "0"; | |||

exclusive = "0"; | |||

nfslock = "0"; | |||

nfs_client_cache = "0"; | |||

recovery = "relocate"; | |||

depend_mode = "hard"; | |||

max_restarts = "0"; | |||

restart_expire_time = "0"; | |||

priority = "0"; | |||

ip (S0) { | |||

address = "192.168.2.100"; | |||

monitor_link = "on"; | |||

nfslock = "0"; | |||

} | |||

} | |||

=== Failover Domains === | |||

Failover domain: an1_only | |||

Flags: Restricted No Failback | |||

Node an-node01.alteeve.ca (id 1, priority 0) | |||

Failover domain: an2_only | |||

Flags: Restricted No Failback | |||

Node an-node02.alteeve.ca (id 2, priority 0) | |||

Failover domain: an1_primary | |||

Flags: Ordered No Failback | |||

Node an-node01.alteeve.ca (id 1, priority 1) | |||

Node an-node02.alteeve.ca (id 2, priority 2) | |||

=== Event Triggers === | |||

Event Priority Level 100: | |||

Name: Default | |||

(Any event) | |||

File: /usr/share/cluster/default_event_script.sl | |||

[root@an-node01 ~]# cman_tool version -r | |||

You have not authenticated to the ricci daemon on an-node01.alteeve.ca | |||

Password: | |||

[root@an-node01 ~]# clusvcadm -e service:an1_storage | |||

Local machine trying to enable service:an1_storage...Success | |||

service:an1_storage is now running on an-node01.alteeve.ca | |||

[root@an-node01 ~]# cat /proc/drbd | |||

version: 8.3.11 (api:88/proto:86-96) | |||

GIT-hash: 0de839cee13a4160eed6037c4bddd066645e23c5 build by root@an-node01.alteeve.ca, 2011-08-01 22:04:32 | |||

0: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:924 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

1: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:916 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

</source> | |||

<source lang="bash"> | |||

cman_tool version -r | |||

</source> | |||

<source lang="text"> | |||

You have not authenticated to the ricci daemon on an-node01.alteeve.ca | |||

Password: | |||

</source> | |||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

clusvcadm -e service:an1_storage | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

service:an1_storage is now running on an-node01.alteeve.ca | |||

</source> | </source> | ||

'''<span class="code">an-node02</span>''': | |||

== | <source lang="bash"> | ||

clusvcadm -e service:an2_storage | |||

</source> | |||

<source lang="text"> | |||

service:an2_storage is now running on an-node02.alteeve.ca | |||

</source> | |||

'''Either''' | |||

<source lang="bash"> | |||

cat /proc/drbd | |||

</source> | |||

<source lang="text"> | |||

version: 8.3.11 (api:88/proto:86-96) | |||

GIT-hash: 0de839cee13a4160eed6037c4bddd066645e23c5 build by root@an-node01.alteeve.ca, 2011-08-01 22:04:32 | |||

0: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:924 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

1: cs:Connected ro:Primary/Primary ds:UpToDate/UpToDate C r----- | |||

ns:0 nr:0 dw:0 dr:916 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0 | |||

</source> | |||

== Configure Clustered LVM == | |||

<span class="code">an-node01</span>''': | |||

<source lang="bash"> | |||

cp /etc/lvm/lvm.conf /etc/lvm/lvm.conf.orig | |||

vim /etc/lvm/lvm.conf | |||

diff -u /etc/lvm/lvm.conf.orig /etc/lvm/lvm.conf | |||

</source> | |||

<source lang="diff"> | |||

--- /etc/lvm/lvm.conf.orig 2011-08-02 21:59:01.000000000 -0400 | |||

+++ /etc/lvm/lvm.conf 2011-08-02 22:00:17.000000000 -0400 | |||

@@ -50,7 +50,8 @@ | |||

# By default we accept every block device: | |||

- filter = [ "a/.*/" ] | |||

+ #filter = [ "a/.*/" ] | |||

+ filter = [ "a|/dev/drbd*|", "r/.*/" ] | |||

# Exclude the cdrom drive | |||

# filter = [ "r|/dev/cdrom|" ] | |||

@@ -308,7 +309,8 @@ | |||

# Type 3 uses built-in clustered locking. | |||

# Type 4 uses read-only locking which forbids any operations that might | |||

# change metadata. | |||

- locking_type = 1 | |||

+ #locking_type = 1 | |||

+ locking_type = 3 | |||

# Set to 0 to fail when a lock request cannot be satisfied immediately. | |||

wait_for_locks = 1 | |||

@@ -324,7 +326,8 @@ | |||

# to 1 an attempt will be made to use local file-based locking (type 1). | |||

# If this succeeds, only commands against local volume groups will proceed. | |||

# Volume Groups marked as clustered will be ignored. | |||

- fallback_to_local_locking = 1 | |||

+ #fallback_to_local_locking = 1 | |||

+ fallback_to_local_locking = 0 | |||

# Local non-LV directory that holds file-based locks while commands are | |||

# in progress. A directory like /tmp that may get wiped on reboot is OK. | |||

</source> | |||

<source lang="bash"> | |||

rsync -av /etc/lvm/lvm.conf root@an-node02:/etc/lvm/ | |||

</source> | |||

<source lang="text"> | |||

sending incremental file list | |||

lvm.conf | |||

sent 2412 bytes received 247 bytes 5318.00 bytes/sec | |||

total size is 24668 speedup is 9.28 | |||

</source> | |||

Create the LVM PVs, VGs and LVs. | |||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | |||

pvcreate /dev/drbd{0,1} | |||

</source> | |||

<source lang="text"> | |||

Physical volume "/dev/drbd0" successfully created | |||

Physical volume "/dev/drbd1" successfully created | |||

</source> | |||

'''<span class="code">an-node02</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

pvscan | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

PV /dev/drbd0 lvm2 [421.50 GiB] | |||

:: | PV /dev/drbd1 lvm2 [27.95 GiB] | ||

Total: 2 [449.45 GiB] / in use: 0 [0 ] / in no VG: 2 [449.45 GiB] | |||

</source> | |||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | |||

vgcreate -c y hdd_vg0 /dev/drbd0 && vgcreate -c y sdd_vg0 /dev/drbd1 | |||

</source> | |||

<source lang="text"> | |||

Clustered volume group "hdd_vg0" successfully created | |||

Clustered volume group "ssd_vg0" successfully created | |||

</source> | |||

'''<span class="code">an-node02</span>''': | |||

<source lang="bash"> | |||

vgscan | |||

</source> | |||

<source lang="text"> | |||

Reading all physical volumes. This may take a while... | |||

Found volume group "ssd_vg0" using metadata type lvm2 | |||

Found volume group "hdd_vg0" using metadata type lvm2 | |||

</source> | </source> | ||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

lvcreate -l 100%FREE -n lun0 /dev/hdd_vg0 && lvcreate -l 100%FREE -n lun1 /dev/ssd_vg0 | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

Logical volume "lun0" created | |||

Logical volume "lun1" created | |||

</source> | |||

'''<span class="code">an-node02</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

lvscan | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

ACTIVE '/dev/ssd_vg0/lun1' [27.95 GiB] inherit | |||

ACTIVE '/dev/hdd_vg0/lun0' [421.49 GiB] inherit | |||

</source> | |||

= iSCSI notes = | |||

IET vs tgt pros and cons needed. | |||

default iscsi port is 3260 | |||

''initiator'': This is the client. | |||

''target'': This is the server side. | |||

''sid'': Session ID; Found with <span class="code">iscsiadm -m session -P 1</span>. SID and sysfs path are not persistent, partially start-order based. | |||

''iQN'': iSCSI Qualified Name; This is a string that uniquely identifies targets and initiators. | |||

'''Both''': | |||

<source lang="bash"> | <source lang="bash"> | ||

yum install iscsi-initiator-utils scsi-target-utils | |||

</source> | </source> | ||

'''<span class="code">an-node01</span>''': | |||

<source lang="bash"> | |||

cp /etc/tgt/targets.conf /etc/tgt/targets.conf.orig | |||

vim /etc/tgt/targets.conf | |||

diff -u /etc/tgt/targets.conf.orig /etc/tgt/targets.conf | |||

</source> | |||

<source lang="bash"> | |||

--- /etc/tgt/targets.conf.orig 2011-07-31 12:38:35.000000000 -0400 | |||

+++ /etc/tgt/targets.conf 2011-08-02 22:19:06.000000000 -0400 | |||

@@ -251,3 +251,9 @@ | |||

# vendor_id VENDOR1 | |||

# </direct-store> | |||

#</target> | |||

+ | |||

+<target iqn.2011-08.com.alteeve:an-clusterA.target01> | |||

+ direct-store /dev/drbd0 | |||

+ direct-store /dev/drbd1 | |||

+ vendor_id Alteeve | |||

</source> | |||

<source lang="bash"> | |||

rsync -av /etc/tgt/targets.conf root@an-node02:/etc/tgt/ | |||

</source> | |||

<source lang="text"> | |||

sending incremental file list | |||

targets.conf | |||

sent 909 bytes received 97 bytes 670.67 bytes/sec | |||

total size is 7093 speedup is 7.05 | |||

</source> | </source> | ||

=== Update the cluster === | |||

<source lang="xml"> | |||

<service autostart="0" domain="an1_only" exclusive="0" max_restarts="0" name="an1_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="tgtd"/> | |||

</script> | |||

</script> | |||

</service> | |||

<service autostart="0" domain="an2_only" exclusive="0" max_restarts="0" name="an2_storage" recovery="restart" restart_expire_time="0"> | |||

<script ref="drbd"> | |||

<script ref="clvmd"> | |||

<script ref="tgtd"/> | |||

</script> | |||

</script> | |||

</service> | |||

</source> | |||

= | = Connect to the SAN from a VM node = | ||

'''<span class="code">an-node03+</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

iscsiadm -m discovery -t sendtargets -p 192.168.2.100 | |||

/ | </source> | ||

<source lang="text"> | |||

192.168.2.100:3260,1 iqn.2011-08.com.alteeve:an-clusterA.target01 | |||

</source> | </source> | ||

<source lang="bash"> | |||

iscsiadm --mode node --portal 192.168.2.100 --target iqn.2011-08.com.alteeve:an-clusterA.target01 --login | |||

</source> | |||

<source lang="text"> | |||

Logging in to [iface: default, target: iqn.2011-08.com.alteeve:an-clusterA.target01, portal: 192.168.2.100,3260] | |||

Login to [iface: default, target: iqn.2011-08.com.alteeve:an-clusterA.target01, portal: 192.168.2.100,3260] successful. | |||

</source> | |||

<source lang="bash"> | |||

fdisk -l | |||

</source> | |||

<source lang="text"> | |||

Disk /dev/sda: 500.1 GB, 500107862016 bytes | |||

255 heads, 63 sectors/track, 60801 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00062f4a | |||

* | Device Boot Start End Blocks Id System | ||

/dev/sda1 * 1 33 262144 83 Linux | |||

Partition 1 does not end on cylinder boundary. | |||

/dev/sda2 33 5255 41943040 83 Linux | |||

/dev/sda3 5255 5777 4194304 82 Linux swap / Solaris | |||

== | Disk /dev/sdb: 452.6 GB, 452573790208 bytes | ||

255 heads, 63 sectors/track, 55022 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00000000 | |||

' | Disk /dev/sdb doesn't contain a valid partition table | ||

Disk /dev/sdc: 30.0 GB, 30010245120 bytes | |||

64 heads, 32 sectors/track, 28620 cylinders | |||

Units = cylinders of 2048 * 512 = 1048576 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00000000 | |||

Disk /dev/sdc doesn't contain a valid partition table | |||

</source> | |||

== Setup the VM Cluster == | |||

Install RPMs. | |||

<source lang="bash"> | |||

yum -y install lvm2-cluster cman fence-agents | |||

</source> | |||

Configure <span class="code">lvm.conf</span>. | |||

<source lang="bash"> | |||

cp /etc/lvm/lvm.conf /etc/lvm/lvm.conf.orig | |||

vim /etc/lvm/lvm.conf | |||

diff -u /etc/lvm/lvm.conf.orig /etc/lvm/lvm.conf | |||

</source> | |||

<source lang="diff"> | |||

--- /etc/lvm/lvm.conf.orig 2011-08-02 21:59:01.000000000 -0400 | |||

+++ /etc/lvm/lvm.conf 2011-08-03 00:35:45.000000000 -0400 | |||

@@ -308,7 +308,8 @@ | |||

# Type 3 uses built-in clustered locking. | |||

# Type 4 uses read-only locking which forbids any operations that might | |||

# change metadata. | |||

- locking_type = 1 | |||

+ #locking_type = 1 | |||

+ locking_type = 3 | |||

# Set to 0 to fail when a lock request cannot be satisfied immediately. | |||

wait_for_locks = 1 | |||

@@ -324,7 +325,8 @@ | |||

# to 1 an attempt will be made to use local file-based locking (type 1). | |||

# If this succeeds, only commands against local volume groups will proceed. | |||

# Volume Groups marked as clustered will be ignored. | |||

- fallback_to_local_locking = 1 | |||

+ #fallback_to_local_locking = 1 | |||

+ fallback_to_local_locking = 0 | |||

# Local non-LV directory that holds file-based locks while commands are | |||

# in progress. A directory like /tmp that may get wiped on reboot is OK. | |||

</source> | |||

<source lang="bash"> | <source lang="bash"> | ||

rsync -av /etc/lvm/lvm.conf root@an-node04:/etc/lvm/ | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

sending incremental file list | |||

lvm.conf | |||

sent 873 bytes received 247 bytes 2240.00 bytes/sec | |||

total size is 24625 speedup is 21.99 | |||

</source> | </source> | ||

<source lang="bash"> | |||

rsync -av /etc/lvm/lvm.conf root@an-node05:/etc/lvm/ | |||

</source> | |||

<source lang="text"> | |||

sending incremental file list | |||

lvm.conf | |||

sent 873 bytes received 247 bytes 2240.00 bytes/sec | |||

total size is 24625 speedup is 21.99 | |||

</source> | |||

Config the cluster. | |||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/cluster/cluster.conf | |||

</source> | |||

<source lang="xml"> | |||

<?xml version="1.0"?> | |||

<cluster config_version="5" name="an-clusterB"> | |||

<totem rrp_mode="none" secauth="off"/> | |||

<clusternodes> | |||

<clusternode name="an-node03.alteeve.ca" nodeid="1"> | |||

<fence> | |||

<method name="apc_pdu"> | |||

<device action="reboot" name="pdu2" port="3"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node04.alteeve.ca" nodeid="2"> | |||

<fence> | |||

<method name="apc_pdu"> | |||

<device action="reboot" name="pdu2" port="4"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="an-node05.alteeve.ca" nodeid="3"> | |||

<fence> | |||

<method name="apc_pdu"> | |||

<device action="reboot" name="pdu2" port="5"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

</clusternodes> | |||

<fencedevices> | |||

<fencedevice agent="fence_apc" ipaddr="192.168.1.6" login="apc" name="pdu2" passwd="secret"/> | |||

</fencedevices> | |||

<fence_daemon post_join_delay="30"/> | |||

<rm> | |||

<resources> | |||

<script file="/etc/init.d/iscsi" name="iscsi" /> | |||

<script file="/etc/init.d/clvmd" name="clvmd" /> | |||

</resources> | |||

<failoverdomains> | |||

<failoverdomain name="an3_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node03.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an4_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node04.alteeve.ca" /> | |||

</failoverdomain> | |||

<failoverdomain name="an5_only" nofailback="1" ordered="0" restricted="1"> | |||

<failoverdomainnode name="an-node05.alteeve.ca" /> | |||

</failoverdomain> | |||

</failoverdomains> | |||

<service autostart="1" domain="an3_only" exclusive="0" max_restarts="0" name="an1_storage" recovery="restart"> | |||

<script ref="iscsi"> | |||

<script ref="clvmd"/> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an4_only" exclusive="0" max_restarts="0" name="an2_storage" recovery="restart"> | |||

<script ref="iscsi"> | |||

<script ref="clvmd"/> | |||

</script> | |||

</service> | |||

<service autostart="1" domain="an5_only" exclusive="0" max_restarts="0" name="an2_storage" recovery="restart"> | |||

<script ref="iscsi"> | |||

<script ref="clvmd"/> | |||

</script> | |||

</service> | |||

</rm> | |||

</cluster> | |||

</source> | |||

<source lang="bash"> | |||

ccs_config_validate | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

Configuration validates | |||

</source> | </source> | ||

Make sure iscsi and clvmd do not start on boot, stop both, then make sure they start and stop cleanly. | |||

<source lang="bash"> | <source lang="bash"> | ||

chkconfig clvmd off; chkconfig iscsi off; /etc/init.d/iscsi stop && /etc/init.d/clvmd stop | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

Stopping iscsi: [ OK ] | |||

</source> | |||

<source lang="bash"> | |||

/etc/init.d/clvmd start && /etc/init.d/iscsi start && /etc/init.d/iscsi stop && /etc/init.d/clvmd stop | |||

</source> | |||

<source lang="text"> | |||

Starting clvmd: | |||

Activating VG(s): No volume groups found | |||

[ OK ] | |||

Starting iscsi: [ OK ] | |||

Stopping iscsi: [ OK ] | |||

Signaling clvmd to exit [ OK ] | |||

clvmd terminated [ OK ] | |||

</source> | </source> | ||

Use the cluster to stop (in case it autostarted before now) and then start the services. | |||

<source lang="bash"> | <source lang="bash"> | ||

# Disable (stop) | |||

clusvcadm -d service:an3_storage | |||

clusvcadm -d service:an4_storage | |||

clusvcadm -d service:an5_storage | |||

# Enable (start) | |||

clusvcadm -e service:an3_storage -m an-node03.alteeve.ca | |||

clusvcadm -e service:an4_storage -m an-node04.alteeve.ca | |||

clusvcadm -e service:an5_storage -m an-node05.alteeve.ca | |||

# Check | |||

clustat | |||

</source> | </source> | ||

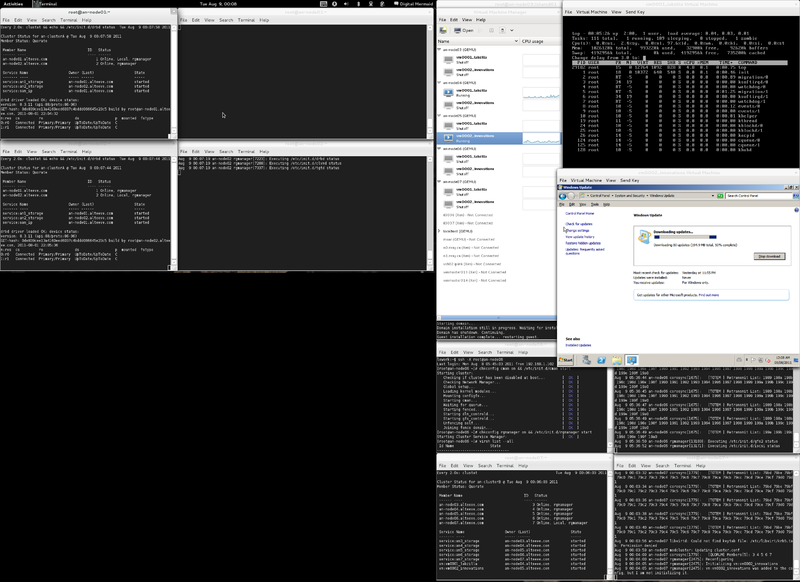

<source lang="text"> | <source lang="text"> | ||

Cluster Status for an-clusterB @ Wed Aug 3 00:25:10 2011 | |||

Member Status: Quorate | |||

Member Name ID Status | |||

------ ---- ---- ------ | |||

an-node03.alteeve.ca 1 Online, Local, rgmanager | |||

an-node04.alteeve.ca 2 Online, rgmanager | |||

an-node05.alteeve.ca 3 Online, rgmanager | |||

Service Name Owner (Last) State | |||

------- ---- ----- ------ ----- | |||

service:an3_storage an-node03.alteeve.ca started | |||

service:an4_storage an-node04.alteeve.ca started | |||

service:an5_storage an-node05.alteeve.ca started | |||

</source> | |||

== Flush iSCSI's Cache == | |||

If you remove an iQN (or change the name of one), the <span class="code">/etc/init.d/iscsi</span> script will return errors. To flush it and re-scan: | |||

I am sure there is a more elegant way. | |||

<source lang="bash"> | |||

/etc/init.d/iscsi stop && rm -rf /var/lib/iscsi/nodes/* && iscsiadm -m discovery -t sendtargets -p 192.168.2.100 | |||

</source> | </source> | ||

== Setup the VM Cluster's Clustered LVM == | |||

=== Partition the SAN disks === | |||

'''<span class="code">an-node03</span>''': | |||

<source lang="bash"> | <source lang="bash"> | ||

fdisk -l | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

Disk /dev/sda: 500.1 GB, 500107862016 bytes | |||

255 heads, 63 sectors/track, 60801 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00062f4a | |||

Device Boot Start End Blocks Id System | |||

/dev/sda1 * 1 33 262144 83 Linux | |||

Partition 1 does not end on cylinder boundary. | |||

/dev/sda2 33 5255 41943040 83 Linux | |||

/dev/sda3 5255 5777 4194304 82 Linux swap / Solaris | |||

Disk /dev/sdc: 30.0 GB, 30010245120 bytes | |||

64 heads, 32 sectors/track, 28620 cylinders | |||

Units = cylinders of 2048 * 512 = 1048576 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00000000 | |||

Disk /dev/sdc doesn't contain a valid partition table | |||

Disk /dev/sdb: 452.6 GB, 452573790208 bytes | |||

255 heads, 63 sectors/track, 55022 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x00000000 | |||

Disk /dev/sdb doesn't contain a valid partition table | |||

</source> | </source> | ||

Create partitions. | |||

= | <source lang="bash"> | ||

fdisk /dev/sdb | |||

</source> | |||

<source lang="text"> | |||

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel | |||

Building a new DOS disklabel with disk identifier 0x403f1fb8. | |||

Changes will remain in memory only, until you decide to write them. | |||

After that, of course, the previous content won't be recoverable. | |||

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) | |||

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to | |||

switch off the mode (command 'c') and change display units to | |||

sectors (command 'u'). | |||

Command (m for help): c | |||

DOS Compatibility flag is not set | |||

Command (m for help): u | |||

Changing display/entry units to sectors | |||

Command (m for help): n | |||

Command action | |||

e extended | |||

p primary partition (1-4) | |||

p | |||

Partition number (1-4): 1 | |||

First cylinder (1-55022, default 1): 1 | |||

Last cylinder, +cylinders or +size{K,M,G} (1-55022, default 55022): | |||

Using default value 55022 | |||

Command (m for help): p | |||

Disk /dev/sdb: 452.6 GB, 452573790208 bytes | |||

255 heads, 63 sectors/track, 55022 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x403f1fb8 | |||

Device Boot Start End Blocks Id System | |||

/dev/sdb1 1 55022 441964183+ 83 Linux | |||

Command (m for help): t | |||

Selected partition 1 | |||

Hex code (type L to list codes): 8e | |||

Changed system type of partition 1 to 8e (Linux LVM) | |||

Command (m for help): p | |||

* | Disk /dev/sdb: 452.6 GB, 452573790208 bytes | ||

255 heads, 63 sectors/track, 55022 cylinders | |||

Units = cylinders of 16065 * 512 = 8225280 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disk identifier: 0x403f1fb8 | |||

Device Boot Start End Blocks Id System | |||

/dev/sdb1 1 55022 441964183+ 8e Linux LVM | |||

Command (m for help): w | |||

The partition table has been altered! | |||

Calling ioctl() to re-read partition table. | |||

Syncing disks. | |||

</source> | |||

<source lang="bash"> | |||

fdisk /dev/sdc | |||

</source> | |||

<source lang="text"> | |||

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel | |||

Building a new DOS disklabel with disk identifier 0xba7503eb. | |||

Changes will remain in memory only, until you decide to write them. | |||

After that, of course, the previous content won't be recoverable. | |||

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) | |||

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to | |||

switch off the mode (command 'c') and change display units to | |||

sectors (command 'u'). | |||

Command (m for help): c | |||

DOS Compatibility flag is not set | |||

Command (m for help): u | |||

Changing display/entry units to sectors | |||