AN!CDB - Cluster Dashboard: Difference between revisions

| Line 438: | Line 438: | ||

<span class="code"></span> | <span class="code"></span> | ||

= | == Building Servers == | ||

KVM/QEMU maintained list of [http://www.linux-kvm.org/page/Guest_Support_Status tested guest OS]. | KVM/QEMU maintained list of [http://www.linux-kvm.org/page/Guest_Support_Status tested guest OS]. | ||

Revision as of 17:19, 21 August 2013

|

AN!Wiki :: AN!CDB - Cluster Dashboard |

AN!CDB, the Alteeve's Niche! Cluster Dashboard, is a management tool for clusters built following the 2-Node Red Hat KVM Cluster Tutorial.

It's first and foremost goal is to be extremely easy to use. No special skills or understanding of HA is required!

To achieve this ease of use, the cluster must be built to fairly specific requirements. Simplicity of use requires many assumptions be made.

AN!CDB provides;

- A single view of all cluster components and their current status.

- Control of the cluster nodes. Nodes can be:

- Powered On, Powered Off and Fenced

- Join to and withdrawn from the cluster

- Control of the virtual servers. Servers can be:

- Booted up, gracefully shut down and forced off

- Migrated between nodes

- Create, modify and delete servers;

- Create and upload installation and driver media

- Provision new servers, installing from media just like bare-iron servers

- Insert and Eject CD/DVD images

- Change allocated RAM and CPUs

- Delete servers that are no longer needed.

- Control of the cluster nodes. Nodes can be:

AN!CDB is designed to run on a machine outside of the cluster. The only customization needed is for the cluster name and the name of the nodes be added to the program. Once done, AN!CDB will collect and cache everything needed to control the cluster, even when both nodes are offline.

Installation

The easiest way to setup AN!CDB is to use the an-cdb-install.sh script.

Install CentOS or RHEL version 6.x on your dashboard server. Configure the network interfaces so that the dashboard can connect to the IFN and BCN.

Once the network is setup and the install is complete, download this file;

curl -O https://raw.github.com/digimer/an-cdb/master/an-cdb-install.sh

chmod 755 an-cdb-install.sh

#

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 5609 100 5609 0 0 37451 0 --:--:-- --:--:-- --:--:-- 238k

Once you have save the install script, edit it with your favourite text editor and change the following;

vi an-cdb-install.sh

# Change the following variables to suit your setup.

PASSWORD="secret"

HOSTNAME="an-m03.alteeve.ca"

CUSTOMER="Alteeve's Niche!"

Once the changes are saved, run the script. It could take quite a while to complete depending on how slow your dashboard machine is, how slow your internet connection is and how much needs to be downloaded.

./an-cdb-install.sh

##############################################################################

# AN!CDB - Alteeve's Niche! - Cluster Dashboard #

# Install Beginning #

##############################################################################

I will set the dashboard's 'admin' user and the local 'alteeve' user passwords

to: [secret]

I will set the hostname for this dashboard to: [an-m03.alteeve.ca]

I will set the client's company name on the dashboard's login prompt to:

[Alteeve's Niche!]

Shall I proceed? (1 -> Yes, 2 -> No)

1) Yes

2) No

#? 1

- Beginning now.

<snip>

##############################################################################

# #

# Dashboard install is complete. #

# #

# When you reboot and log in, you should see a file called: #

# [public_keys.txt] on the desktop. Copy the contents of that file and add #

# them to: [/root/.ssh/authorized_keys] on each cluster node you wish this #

# dashboard to access. #

# #

# Once the keys are added, switch to the: [apache] user and use ssh to #

# connect to each node for the first time. This is needed to add the node's #

# SSH fingerprint to the apache user's: [~/.ssh/known_hosts] file. You only #

# need to do this once per node. #

# #

# Please reboot to ensure the latest kernel is being used. #

# #

# Remember to update: [/var/www/home/ricci_pw.txt] and: [/etc/an/an.conf]!! #

# #

##############################################################################

generally, this will update the kernel and/or install the graphical desktop. Generally you will want to reboot at this point.

As it says above, remember to edit the /var/www/home/ricci_pw.txt and /etc/an/an.conf files to add the information for the Anvil!s you want this dashboard to have access to.

Also remember to log into the apache user's terminal and SSH to each node. This will ask you to verify the node's fingerprint and then record it in the apache user's /var/www/home/.ssh/known_hosts file. The dashboard will not connect to a node until this is done.

AN! generally installed the AN!CDB on ASUS EeeBox PC-EB1033 1-liter nettop PCs. You should be able to use any computer or appliance that can run the 64-bit version RHEL or CentOS version 6.

Adding an Anvil! to a Dashboard

Adding an Anvil! to a given dashboard involves a few steps;

- Setting up SSH access from the dashboard to your nodes.

- Adding the Anvil!'s details to the dashboard's /etc/an/an.conf file.

- Adding the Anvil!'s ricci user's password to the dashboard's /var/www/home/ricci_pw.txt file.

- Adding each node to the dashboard's Virtual Machine Manager application.

Adding the AN!CDB SSH keys to the Nodes

When the dashboard was installed, a desktop file should have been created called public_keys.txt. This file contains the keys needed to provide password-less SSH access for the dashboard's apache, alteeve and root users. Strictly speaking, the only key you need to add to the nodes is the apache user. The dashboard can not authenticate against a node otherwise (at this time... patches welcomed!).

Adding the alteeve user's key allows Virtual Machine Manager to connect without having to enter a password. If you prefer though, you can leave out the alteeve. Adding the root user's key may come in handy if you use the dashboard machine as a gateway into the cluster. Adding the root user's key is the least important and safe to leave out if you prefer.

Open a terminal window as the alteeve user. To do this, log into the dashboard's graphical interface and the open a terminal window by clicking on;

- Applications -> System Tools -> Terminal

At the prompt, type the following;

cat /home/alteeve/Desktop/public_keys.txt | ssh root@an-c05n01 "cat >> /root/.ssh/authorized_keys"

You will be asked to verify the node's SSH fingerprint. If you trust the fingerprint is accurate, type yes.

The authenticity of host '[an-c05n01]:22501 ([216.154.16.237]:22501)' can't be established.

RSA key fingerprint is 44:01:06:85:00:6f:b2:56:51:00:e1:16:af:4c:01:ee.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[an-c05n01]:22501,[216.154.16.237]:22501' (RSA) to the list of known hosts.

The node's fingerprint will be added to the alteeve user's ~/.ssh/known_hosts file. You should not be asked to verify the fingerprint again.

Next, you will need to enter to node's root user's password.

root@an-c05n01's password:

When you enter the password, you will so no feedback at all. If you entered the correct password, it should simply have returned to the terminal. You can verify that this worked by trying to log into the node.

ssh root@an-c05n01

Last login: Sun Jul 28 20:17:14 2013 from alteeve.ca

an-c05n01:~#

Now exit out to return to the dashboard's terminal.

an-c05n01:~# exit

logout

Connection to an-c05n01 closed.

Now switch to the apache user.

su - apache

Enter the apache user's password. This was set when you ran the an-cdb-installer.sh script. There is no default password.

Password:

If the password was correct, you will get the apache user's shell.

-bash-4.1$

Now try using ssh to connect to the node.

ssh root@an-c05n01

As this is a different user, you will again be asked to verify that the SSH fingerprint is accurate. If you trust it, type yes.

The authenticity of host '[an-c05n01]:22501 ([216.154.16.237]:22501)' can't be established.

RSA key fingerprint is 44:01:06:85:00:6f:b2:56:51:00:e1:16:af:4c:01:ee.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[an-c05n01]:22501,[216.154.16.237]:22501' (RSA) to the list of known hosts.

Last login: Sun Jul 28 20:43:46 2013 from alteeve.ca

an-c05n01:~#

Now type exit to return to the dashboard.

exit

logout

Connection to an-c05n01 closed.

That's it!

Repeat this process for your other node, an-c05n02 in this case.

Configuring 'an.conf'

The an.conf file is where you tell AN!CDB which Anvil!s your dashboard will be able to manage.

By default, four sample Anvil!s are pre-loaded in the config. Each Anvil has found variables that need to be set;

- name; This is the Anvil!'s cluster name. If you don't know the cluster name, you can find it on either node at the top of the cluster.conf file in the <cluster ... name="..."> element. If you have console access to one of the nodes, you can run this slightly odd looking bash command;

cat /etc/cluster/cluster.conf |grep "<cluster " |sed 's/<cluster .*name="\(.*\)".*/\1/'

an-cluster-05

- nodes; This is a comma-separated list of the node names. These are the names (or IPs) that the dashboard will call when connecting to the nodes. So please be sure that the names resolve to the proper IPs. Usually, the names should resolve to the cluster's BCN. In most Anvil!s, the short host name can be used to resolve each node's BCN network IP address. If this is true for you as well, then you can get the host names this way;

cat /etc/cluster/cluster.conf |grep "<clusternode " |perl -pe 's/^.*name="(.*?)[\."].*/\1/' | sed ':a;N;$!ba;s/\n/, /g'

an-c05n01, an-c05n02

- company; This is a free-form descriptive field that you can fill out however you wish. The value here is used in the second column of the Anvil! selection screen. Generally, it is the company, institution or organization name that owns the Anvil!.

- description; This is also a free-form descriptive field that you can fill out however you wish. The value here is used in the third column of the Anvil! selection screen. Generally this is some descriptive field used for the informal name of the Anvil!, where it physically is located, etc.

The format of the an.conf configuration file is cluster::X::variable where N is some unique number. The order of the numbers does not matter at all, it simply provides a method of distinguishing the values of one Anvil! from another.

So, by default, the first two sample Anvil! configurations are;

cluster::1::name = an-cluster-01

cluster::1::nodes = an-c01n01, an-c01n02

cluster::1::company = Alteeve's Niche!

cluster::1::description = Cluster 01

cluster::2::name = cc-cluster-01

cluster::2::nodes = cc-c01n01.remote, cc-c01n02.remote

cluster::2::company = Another Company

cluster::2::description = Cluster 01 (in DC)

If you have only one Anvil!, you can simply delete the three other sample entries. Then you can replace the values in the remaining one with the details on your Anvil!.

There is no (practical) upper limit to the number of Anvil!s that a given dashboard can support.

Configuring 'ricci_pw.txt'

In order for AN!CDB to add or remove servers, it must be able to authenticate against the cluster stack. The cluster stack has it's own password for this purpose. This password is set by assigning a password to each node's ricci system account.

The dashboard keeps these passwords separate from the general Anvil! configuration found in an.conf. These passwords instead are saved in /var/www/home/ricci_pw.txt.

This configuration file is much more simple to configure. You need to set the variable name to the cluster name that you set in an.conf's cluster::X::name value. The password for that Anvil! is on the right, separated by an = sign.

So continuing our example above, the Anvil!'s cluster name was an-cluster-05. The ricci password on this Anvil! is secret. So the ricci_pw.txt entry would be:

an-cluster-05 = "secret"

Note that simple passwords do not need to be in double-quotes. However, if your password is complex and has special characters or spaces, the double-quotes are needed to ensure that the password is parsed properly.

You can put as many Anvil! entries in this file as you wish. Simply ensure that each entry is on it's own line.

Configuring Virtual Machine Manager

The Virtual Machine Manager application is a separate tool that is available on the AN!CDB appliances. You can think of it as a kind of KVM switch for your server running on your Anvil!s.

It gives you a way to directly access your servers, just as if you were sitting at a real keyboard, mouse and monitor plugged into a physical server. With it, you can watch your servers boot up, shut down and you can work on your servers when they have no network connection at all.

Normal remote management tools, like RDP for windows servers, SSH for Linux and UNIX and other tools require that the target server be up and running and have a working network connection. Most of the time, these are perfectly fine. Sometimes though, network settings are configured improperly, bad firewall rules lock out remote access and so on. These time require direct access and that is where Virtual Machine Manager comes in very handy.

| Virtual Machine Manager | ||

|---|---|---|

|

| |

|

|

|

The steps to add the nodes are (in the same order as the images above);

- On the dashboard's desktop is an icon called "Virtual Machine Manager".

- Double-click on it and it will start the program.

- Click on "File" -> "Add Connection"

- Fill in the details for the first node in your Anvil!.

- Click to check "Connect to remote host"

- Enter the host name of the node (same as you put in an.conf earlier)

- Click to check "Autoconnect"

- Clock on "Connect".

- The first node should appear in the main window. If you already have servers running on that node, they will appear. Otherwise they will appear when they are booted or created.

- Repeat steps #3 and #4 to add the second node.

That's it! You now have direct access to your servers. Simply run "Virtual Machine Manager" when ever you want. It's use is totally independent of the AN!CDB dashboard proper.

Using AN!CDB

Feel free to jump to the section you are interested in. The order below was compiled as a "story line" of how someone might use AN!CDB to "cold boot" a new Anvil!, create some servers, take a node out for maintenance, manage existing servers, boot a windows server off of the install disk for recovery, "cold stop" and Anvil! and delete servers.

All screen shots shown here were taken in late July 2013. AN!CDB is always evolving so what you see today may differ somewhat from what you see here.

Connecting to the Dashboard

| Connecting to the Dashboard |

|---|

|

Open a web browser and browse to the dashboard. Here we will use the actual dashboard's desktop, so we will browse to http://localhost. If you connect from another computer, browse to the IP address or host name you setup for the dashboard.

When you first connect, you will be asked to log into the dashboard. The default user name is admin. There is no default password, but it usually set to the same password used when logging into the dashboard's desktop as the alteeve user.

If you do not know what the password is, please contact support. We can not recover your password, but we can help you reset it.

Choosing the Anvil! to Work On

| Choosing an Anvil! |

|---|

|

If you have configured your dashboard to work on two or more Anvil!s, the first screen you see will ask you to select the one you wish to work on. This list is created by reading your /etc/an/an.conf file on the dashboard. By default, four sample entries are created. You will need to edit the an.conf configuration file to point to your Anvil!(s) before you can use the dashboard to control them.

If you have only one Anvil!, you will not see this screen.

If the dashboard sees only one Anvil! in the configuration file, it will automatically be selected.

The Loading Screen

| Choosing an Anvil! |

|---|

|

The dashboard does not cache or record an Anvil!'s state. Each time the dashboard needs to display information about the Anvil! and before performing an action on an Anvil!, the dashboard calls the nodes directly and checks their current state.

What this means is that you will frequently see the dashboard loading screen. This is an animated pinwheel asking you to wait while it gathers information.

When you see this screen, please be patient. The dashboard will wait up to 1 hour and 40 minutes, by default, for the cluster to return information. This is far longer than any action should take, of course. If you wait several minutes and the loading screen is still displayed, feel free to click on the "reload" icon to the right of the top logo.

"Cold Start" the Anvil!

If you Anvil! nodes are both powered off, you will see the "Cold Start" screen. If your dashboard is connected to the Anvil!'s "Back-Channel Network" (BCN), you will be given the option to turn on both nodes or just one node.

In almost all cases, you will want to start both nodes at the same time.

To start both nodes, simply click on the "Power On Both" button. Should you ever want to turn on just one of the nodes, click on the "Power On" button that is on the same line as the name of the node you wish to boot.

Once you click on "Power on Both" or "Power On" for a single node, you will be asked to confirm the action. Click on the "Confirm" button and the dashboard will connect to each node's IPMI interface and ask it to boot the nodes.

After confirming the action, the dashboard will do an initial scan to make sure that the nodes can still be turned on. If someone else turned them on already, the dashboard will abort and tell you why. These checks are done because multiple people can use the dashboard at one time. In most cases though, your action will complete successfully.

If you go back to the main page before the nodes are booting, you will see one of two screens;

- "No Access"; This tells you that the dashboard can see that the node is powered up, but it was unable to connect via ssh to the node. This means that the node is still powering up, a process that can take several minutes on most modern hardware servers.

- "Connection Refused"; This tells you that the node's operating system is booting up, but not quite ready to accept ssh connections. The node should become accessible very soon. Click on the "refresh" icon at the top-right and you should be able to connect to the Anvil! in just a moment.

Start the Cluster Stack

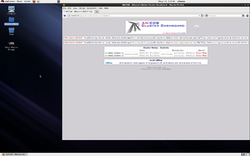

| Starting the Cluster Stack | |||

|---|---|---|---|

|

|

|

|

To prevent a possible condition called a "Fence Loop", the cluster nodes are not allowed to automatically start the cluster stack when they boot.

After a cold start of the cluster, your Anvil! will not yet be able to run servers. The next step that is needed is to start the cluster stack by pressing the "Start Cluster" button. You will be asked to confirm the action, press "Confirm".

The two nodes in the cluster must have their stacks started at the same time (as opposed to one after the other). For this reason, the data coming back from the nodes can be mixed together. The main effect of this is that, sometimes, the formatting of the output can be skewed, as seen in the third screen shot above. This is harmless and safe to ignore.

When you click on the "back" button, you will see that the cluster stack is either starting or fully started. The fourth screen shot above shows the cluster after it has fully started. If you see anything that isn't green, please wait a moment and then click on the "refresh" button. Within a minute or two, everything should become green and you will be ready to proceed.

Building Servers

KVM/QEMU maintained list of tested guest OS.

Windows 7

Windows 7 Professional has a limit of two sockets. The hypervisor presents each core as a socket, so Windows 7 will only use up to two cores.

Solaris 11

There is a harmless but verbose bug when using Solaris 11. You will see errors like:

WARNING: /pci@0,0/pci1af4,1100@1,2 (uhci0): No SOF interrupts have been received

, this USB UHCI host controller is unusable

Ignore this until the install is complete. Once the OS is installed, run:

rem_drv uhci

The errors will no longer appear.

Solution found here.

| Any questions, feedback, advice, complaints or meanderings are welcome. | |||

| Alteeve's Niche! | Enterprise Support: Alteeve Support |

Community Support | |

| © Alteeve's Niche! Inc. 1997-2024 | Anvil! "Intelligent Availability®" Platform | ||

| legal stuff: All info is provided "As-Is". Do not use anything here unless you are willing and able to take responsibility for your own actions. | |||