Red Hat Cluster Service 2 Tutorial - Archive: Difference between revisions

No edit summary |

m (Digimer moved page Red Hat Cluster Service 2 Tutorial to Red Hat Cluster Service 2 Tutorial - Archive: Moving to rename to archival.) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{howto_header}} | {{howto_header}} | ||

{{ | {{warning|1=This tutorial is officially deprecated and has been replaced by [[AN!Cluster Tutorial 2]]. Please do not follow this tutorial any more.}} | ||

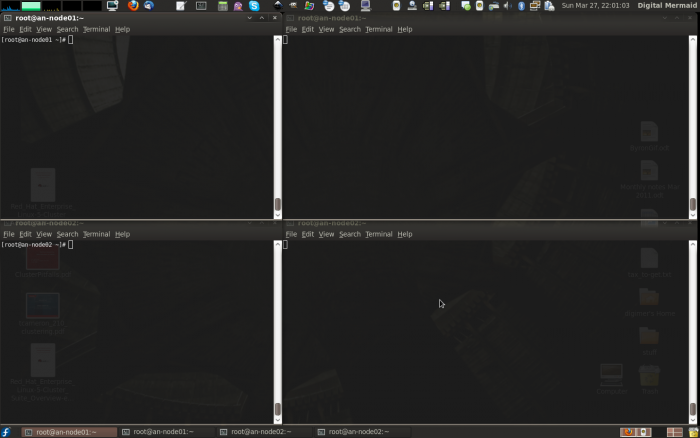

This paper has one goal; | This paper has one goal; | ||

* Creating a 2-node, high-availability cluster hosting [[ | * Creating a 2-node, high-availability cluster hosting [[Xen]] virtual machines using [[RHCS]] "stable 2" with [[DRBD]] and clustered [[LVM]] for synchronizing storage data. | ||

Grab a coffee, put on some nice music and settle in for some geekly fun. | We'll create a dedicated firewall VM to isolate and protect the VM network, discuss provisioning and maintaining Xen VMs, explore some basics of daily administration of a VM cluster and test various failures and how to recover from them. | ||

Grab a coffee, a comfy chair, put on some nice music and settle in for some geekly fun. | |||

= The Task Ahead = | = The Task Ahead = | ||

| Line 15: | Line 17: | ||

== Technologies We Will Use == | == Technologies We Will Use == | ||

* '' | * ''Enterprise Linux 5''; specifically we will be using [[CentOS]] v5.6. | ||

* ''Red Hat Cluster Services'' "Stable" version | * ''Red Hat Cluster Services'' "Stable" version 2. This describes the following core components: | ||

** '' | ** ''OpenAIS''; Provides cluster communications using the [[totem]] protocol. | ||

** ''Cluster Manager'' (<span class="code">[[cman]]</span>); Manages the starting, stopping and managing of the cluster. | ** ''Cluster Manager'' (<span class="code">[[cman]]</span>); Manages the starting, stopping and managing of the cluster. | ||

** ''Resource Manager'' (<span class="code">[[rgmanager]]</span>); Manages cluster resources and services. Handles service recovery during failures. | ** ''Resource Manager'' (<span class="code">[[rgmanager]]</span>); Manages cluster resources and services. Handles service recovery during failures. | ||

** '' | ** ''Cluster Logical Volume Manager'' (<span class="code">[[clvm]]</span>); Cluster-aware (disk) volume manager. Backs [[GFS2]] [[filesystem]]s and [[Xen]] virtual machines. | ||

** ''Global File Systems'' version 2 (<span class="code">[[gfs2]]</span>); Cluster-aware, concurrently mountable file system. | ** ''Global File Systems'' version 2 (<span class="code">[[gfs2]]</span>); Cluster-aware, concurrently mountable file system. | ||

* ''Distributed Redundant Block Device'' ([[DRBD]]); Keeps shared data synchronized across cluster nodes. | * ''Distributed Redundant Block Device'' ([[DRBD]]); Keeps shared data synchronized across cluster nodes. | ||

* '' | * ''Xen''; [[Hypervisor]] that controls and supports virtual machines. | ||

== A Note on Patience == | == A Note on Patience == | ||

| Line 31: | Line 33: | ||

You '''must''' have patience. Lots of it. | You '''must''' have patience. Lots of it. | ||

Many technologies can be learned by creating a very simple base and then building on it. The classic "Hello, World!" script created when first learning a programming language is an example of this. Unfortunately, there is no real | Many technologies can be learned by creating a very simple base and then building on it. The classic "Hello, World!" script created when first learning a programming language is an example of this. Unfortunately, there is no real analog to this in clustering. Even the most basic cluster requires several pieces be in place and working together. If you try to rush by ignoring pieces you think are not important, you will almost certainly waste time. A good example is setting aside [[fencing]], thinking that your test cluster's data isn't important. The cluster software has no concept of "test". It treats everything as critical all the time and ''will'' shut down if anything goes wrong. | ||

Take your time, work through these steps, and you will have the foundation cluster sooner than you realize. Clustering is fun '''because''' it is a challenge. | Take your time, work through these steps, and you will have the foundation cluster sooner than you realize. Clustering is fun '''because''' it is a challenge. | ||

| Line 37: | Line 39: | ||

== Prerequisites == | == Prerequisites == | ||

It is assumed that you are familiar with Linux systems administration, specifically [[Red Hat]] [[Enterprise Linux]] and its derivatives. You will need to have somewhat advanced networking experience as well. You should be comfortable working in a terminal (directly or over <span class="code">[[ssh]]</span>). Familiarity with [[XML]] will help, but is not terribly required as it's use here is pretty self-evident | It is assumed that you are familiar with Linux systems administration, specifically [[Red Hat]] [[EL|Enterprise Linux]] and its derivatives. You will need to have somewhat advanced networking experience as well. You should be comfortable working in a terminal (directly or over <span class="code">[[ssh]]</span>). Familiarity with [[XML]] will help, but is not terribly required as it's use here is pretty self-evident. | ||

Patience is vastly more important than any pre-existing skill. | If you feel a little out of depth at times, don't hesitate to set this tutorial aside. Branch over to the components you feel the need to study more, then return and continue on. Finally, and perhaps most importantly, you '''must''' have patience! If you have a manager asking you to "go live" with a cluster in a month, tell him or her that it simply won't happen. If you rush, you will skip important points and '''you will fail'''. Patience is vastly more important than any pre-existing skill. | ||

== Focus and Goal == | == Focus and Goal == | ||

| Line 47: | Line 47: | ||

There is a different cluster for every problem. Generally speaking though, there are two main problems that clusters try to resolve; Performance and High Availability. Performance clusters are generally tailored to the application requiring the performance increase. There are some general tools for performance clustering, like [[Red Hat]]'s [[LVS]] (Linux Virtual Server) for load-balancing common applications like the [[Apache]] web-server. | There is a different cluster for every problem. Generally speaking though, there are two main problems that clusters try to resolve; Performance and High Availability. Performance clusters are generally tailored to the application requiring the performance increase. There are some general tools for performance clustering, like [[Red Hat]]'s [[LVS]] (Linux Virtual Server) for load-balancing common applications like the [[Apache]] web-server. | ||

This tutorial will focus on High Availability clustering, often shortened to simply '''HA''' and not to be confused with the [[Linux-HA]] "heartbeat" cluster suite, which we will not be using here. The cluster will provide a shared file systems and will provide for the high availability on [[ | This tutorial will focus on High Availability clustering, often shortened to simply '''HA''' and not to be confused with the [[Linux-HA]] "heartbeat" cluster suite, which we will not be using here. The cluster will provide a shared file systems and will provide for the high availability on [[Xen]]-based virtual servers. The goal will be to have the virtual servers live-migrate during planned node outages and automatically restart on a surviving node when the original host node fails. | ||

Below is a ''very'' brief overview; | Below is a ''very'' brief overview; | ||

| Line 59: | Line 59: | ||

== Platform == | == Platform == | ||

This tutorial was written using [[ | This tutorial was written using [[CentOS]] version 5.6, [[x86_64]]. No attempt was made to test on [[i686]] or other [[EL5]] derivatives. That said, there is no reason to believe that this tutorial will not apply to any variant. As much as possible, the language will be distro-agnostic. For reasons of memory constraints, it is advised that you use an [[x86_64]] (64-[[bit]]) platform if at all possible. | ||

Do note that as of [[EL5]].4 and above, significant changes were made to how [[RHCS]] handles virtual machines. It is strongly advised that you use at least version 5.4 or newer while working with this tutorial. | |||

== A Word On Complexity == | == A Word On Complexity == | ||

Introducing the <span class="code"> | Introducing the <span class="code">Fabbione Principle</span> (aka: <span class="code">fabimer theory</span>): | ||

Clustering is not inherently hard, but it is inherently complex. Consider; | Clustering is not inherently hard, but it is inherently complex. Consider; | ||

* Any given program has <span class="code">N</span> bugs. | * Any given program has <span class="code">N</span> bugs. | ||

** [[RHCS]] uses; <span class="code">cman</span>, <span class="code"> | ** [[RHCS]] uses; <span class="code">cman</span>, <span class="code">openais</span>, <span class="code">totem</span>, <span class="code">fenced</span>, <span class="code">rgmanager</span> and <span class="code">dlm</span>. | ||

** We will be adding <span class="code">DRBD</span>, <span class="code">GFS2</span>, <span class="code">CLVM</span> and <span class="code">Xen</span>. | |||

** Right there, we have <span class="code">N^10</span> possible bugs. We'll call this <span class="code">A</span>. | ** Right there, we have <span class="code">N^10</span> possible bugs. We'll call this <span class="code">A</span>. | ||

* A cluster has <span class="code">Y</span> nodes. | * A cluster has <span class="code">Y</span> nodes. | ||

** In our case, <span class="code">2</span> nodes, each with <span class="code">3</span> networks | ** In our case, <span class="code">2</span> nodes, each with <span class="code">3</span> networks. | ||

** The network infrastructure (Switches, routers, etc). | ** The network infrastructure (Switches, routers, etc). If you use managed switches, add another layer of complexity. | ||

** This gives us another <span class="code">Y^(2* | ** This gives us another <span class="code">Y^(2*3)</span>, and then <span class="code">^2</span> again for managed switches. We'll call this <span class="code">B</span>. | ||

* Let's add the human factor. Let's say that a person needs roughly 5 years of cluster experience to be considered an | * Let's add the human factor. Let's say that a person needs roughly 5 years of cluster experience to be considered an expert. For each year less than this, add a <span class="code">Z</span> "oops" factor, <span class="code">(5-Z)^2</span>. We'll call this <span class="code">C</span>. | ||

* So, finally, add up the complexity, using this tutorial's layout, 0-years of experience and managed switches. | * So, finally, add up the complexity, using this tutorial's layout, 0-years of experience and managed switches. | ||

** <span class="code">(N^10) * (Y^(2* | ** <span class="code">(N^10) * (Y^(2*3)^2) * ((5-0)^2) == (A * B * C)</span> == an-unknown-but-big-number. | ||

This isn't meant to scare you away, but it is meant to be a sobering statement. Obviously, those numbers are somewhat artificial, but the point remains. | This isn't meant to scare you away, but it is meant to be a sobering statement. Obviously, those numbers are somewhat artificial, but the point remains. | ||

| Line 101: | Line 103: | ||

== Component; cman == | == Component; cman == | ||

This was, traditionally, the <span class="code">c</span>luster <span class="code">man</span>ager. In the 3.0 series | This was, traditionally, the <span class="code">c</span>luster <span class="code">man</span>ager. In the 3.0 series, <span class="code">cman</span> acts mainly as a [[quorum]] provider, tallying votes and deciding on a critical property of the cluster: quorum. In the 3.1 series, <span class="code">cman</span> will be removed entirely. | ||

== Component; openais / corosync == | |||

OpenAIS is the heart of the cluster. All other computers operate though this component, and no cluster component can work without it. Further, it is shared between both Pacemaker and RHCS clusters. | |||

In Red Hat clusters, <span class="code">openais</span> is configured via the central <span class="code">cluster.conf</span> file. In Pacemaker clusters, it is configured directly in <span class="code">openais.conf</span>. As we will be building an RHCS, we will only use <span class="code">cluster.conf</span>. That said, (almost?) all <span class="code">openais.conf</span> options are available in <span class="code">cluster.conf</span>. This is important to note as you will see references to both configuration files when searching the Internet. | |||

=== A Little History === | === A Little History === | ||

| Line 117: | Line 115: | ||

There were significant changes between [[RHCS]] version 2, which we are using, and version 3 available on [[EL6]] and recent [[Fedora]]s. | There were significant changes between [[RHCS]] version 2, which we are using, and version 3 available on [[EL6]] and recent [[Fedora]]s. | ||

In the RHCS version 2, there was a component called <span class="code">openais</span> which | In the RHCS version 2, there was a component called <span class="code">openais</span> which handled <span class="code">totem</span>. The OpenAIS project was designed to be the heart of the cluster and was based around the [http://www.saforum.org/ Service Availability Forum]'s [http://www.saforum.org/Application-Interface-Specification~217404~16627.htm Application Interface Specification]. AIS is an open [[API]] designed to provide inter-operable high availability services. | ||

In 2008, it was decided that the AIS specification was overkill for most clustered applications being developed in the open source community. At that point, OpenAIS was split in to two projects: Corosync and OpenAIS. The former, Corosync, provides | In 2008, it was decided that the AIS specification was overkill for most clustered applications being developed in the open source community. At that point, OpenAIS was split in to two projects: Corosync and OpenAIS. The former, Corosync, provides cluster membership, messaging, and basic APIs for use by clustered applications, while the OpenAIS project is specifically designed to act as an optional add-on to corosync for users who want AIS functionality. | ||

You will see a lot of references to OpenAIS while searching the web for information on clustering. Understanding it's evolution will hopefully help you avoid confusion. | You will see a lot of references to OpenAIS while searching the web for information on clustering. Understanding it's evolution will hopefully help you avoid confusion. | ||

| Line 125: | Line 123: | ||

== Concept; quorum == | == Concept; quorum == | ||

[[Quorum]] is defined as the minimum set of hosts required in order to provide | [[Quorum]] is defined as the minimum set of hosts required in order to provide service and is used to prevent split-brain situations. | ||

The quorum algorithm used by the RHCS cluster is called "simple majority quorum", which means that more than half of the hosts must be online and communicating in order to provide service. While simple majority quorum a very common quorum algorithm, other quorum algorithms exist ([[grid quorum]], [[YKD Dyanamic Linear Voting]], etc.). | The quorum algorithm used by the RHCS cluster is called "simple majority quorum", which means that more than half of the hosts must be online and communicating in order to provide service. While simple majority quorum a very common quorum algorithm, other quorum algorithms exist ([[grid quorum]], [[YKD Dyanamic Linear Voting]], etc.). | ||

The idea behind quorum is that | The idea behind quorum is that, which ever group of machines has it, can safely start clustered services even when defined members are not accessible. | ||

Take this scenario; | Take this scenario; | ||

| Line 141: | Line 139: | ||

** The partition without quorum will withdraw from the cluster and shut down all cluster services. | ** The partition without quorum will withdraw from the cluster and shut down all cluster services. | ||

When the cluster reconfigures and the partition wins quorum, it will fence the node(s) in the partition without quorum. Once the fencing | When the cluster reconfigures and the partition wins quorum, it will fence the node(s) in the partition without quorum. Once the fencing hes been confirmed successful, the partition with quorum will begin accessing clustered resources, like shared filesystems, thus guaranteeing the safety of those shared resources. | ||

This also helps explain why an even <span class="code">50%</span> is not enough to have quorum, a common question for people new to clustering. Using the above scenario, imagine if the split were 2 and 2 nodes. Because either can't be sure what the other would do, neither can safely proceed. If we allowed an even 50% to have quorum, both partition might try to take over the clustered services and disaster would soon follow. | This also helps explain why an even <span class="code">50%</span> is not enough to have quorum, a common question for people new to clustering. Using the above scenario, imagine if the split were 2 and 2 nodes. Because either can't be sure what the other would do, neither can safely proceed. If we allowed an even 50% to have quorum, both partition might try to take over the clustered services and disaster would soon follow. | ||

| Line 153: | Line 151: | ||

== Concept; Virtual Synchrony == | == Concept; Virtual Synchrony == | ||

All cluster operations, like fencing, distributed locking and so on, have to occur in the same order across all nodes. This concept is called "virtual synchrony". | |||

This is provided by <span class="code"> | This is provided by <span class="code">openais</span> using "closed process groups", <span class="code">[[CPG]]</span>. A closed process group is simply a private group of processes in a cluster. Within this closed group, all messages are ordered and consistent. | ||

Let's look at | Let's look at how locks are handled on clustered file systems as an example. | ||

* | * As various nodes want to work on files, they send a lock request to the cluster. When they are done, they send a lock release to the cluster. | ||

** Lock and unlock messages must arrive in the same order to all nodes, regardless of the real chronological order that they were issued. | |||

* | * Let's say one node sends out messages "<span class="code">a1 a2 a3 a4</span>". Meanwhile, the other node sends out "<span class="code">b1 b2 b3 b4</span>". | ||

* | ** All of these messages go to <span class="code">openais</span> which gathers them up, puts them into an order and then sends them out in that order. | ||

** It is totally possible that <span class="code">openais</span> will get the messages as "<span class="code">a2 b1 b2 a1 b4 a3 a4 b4</span>". What order is used is not important, only that the order is consistent across all nodes. | |||

** | ** The <span class="code">openais</span> application will then ensure that all nodes get the messages in the above order, one at a time. All nodes must confirm that they got a given message before the next message is sent to any node. | ||

** | |||

* The <span class="code"> | |||

All of this ordering, within the closed process group, is "virtual synchrony". | |||

This will tie into fencing and <span class="code">totem</span>, as we'll see in the next sections. | |||

== Concept; Fencing == | == Concept; Fencing == | ||

| Line 190: | Line 180: | ||

So then, let's discuss fencing. | So then, let's discuss fencing. | ||

When a node stops responding, an internal timeout and counter start ticking away. During this time, no | When a node stops responding, an internal timeout and counter start ticking away. During this time, no messages are moving through the cluster because virtual synchrony is no longer possible and the cluster is, essentially, hung. If the node responds in time, the timeout and counter reset and the cluster begins operating properly again. | ||

If, on the other hand, the node does not respond in time, the node will be declared dead and the process of ejecting it from the cluster begins. | |||

The cluster will take a "head count" to see which nodes it still has contact with and will determine then if there are enough votes from those nodes to have quorum. If you are using <span class="code">[[qdisk]]</span>, it's heuristics will run and then it's votes will be added. If there is sufficient votes for quorum, the cluster will issue a "fence" against the lost node. A fence action is a call sent to <span class="code">fenced</span>, the fence daemon. | |||

Which physical node sends the fence call is somewhat random and irrelevant. What matters is that the call comes from the [[CPG]] which has quorum. | |||

The fence daemon will look at the cluster configuration and get the fence devices configured for the dead node. Then, one at a time and in the order that they appear in the configuration, the fence daemon will call those fence devices, via their fence agents, passing to the fence agent any configured arguments like username, password, port number and so on. If the first fence agent returns a failure, the next fence agent will be called. If the second fails, the third will be called, then the forth and so on. Once the last (or perhaps only) fence device fails, the fence daemon will retry again, starting back at the start of the list. It will do this indefinitely until one of the fence devices success. | The fence daemon will look at the cluster configuration and get the fence devices configured for the dead node. Then, one at a time and in the order that they appear in the configuration, the fence daemon will call those fence devices, via their fence agents, passing to the fence agent any configured arguments like username, password, port number and so on. If the first fence agent returns a failure, the next fence agent will be called. If the second fails, the third will be called, then the forth and so on. Once the last (or perhaps only) fence device fails, the fence daemon will retry again, starting back at the start of the list. It will do this indefinitely until one of the fence devices success. | ||

| Line 196: | Line 192: | ||

Here's the flow, in point form: | Here's the flow, in point form: | ||

* The | * The <span class="code">openais</span> program collects messages and sends them off, one at a time, to all nodes. | ||

* | * All nodes respond, and the next message is sent. Repeat continuously during normal operation. | ||

* Suddenly, one node stops responding. | * Suddenly, one node stops responding. | ||

** A timeout starts (~<span class="code">238</span>ms by default), and each time the timeout is hit, and error counter increments | ** Communication freezes while the cluster waits for the silent node. | ||

** The silent node responds before the | ** A timeout starts (~<span class="code">238</span>ms by default), and each time the timeout is hit, and error counter increments. | ||

*** The | ** The silent node responds before the counter reaches the limit. | ||

*** The counter is reset to <span class="code">0</span> | |||

*** The cluster operates normally again. | *** The cluster operates normally again. | ||

* Again, one node stops responding. | * Again, one node stops responding. | ||

** Again, the timeout begins. As each totem | ** Again, the timeout begins. As each totem packet times out, a new packet is sent and the error count increments. | ||

** The error counts exceed the limit (<span class="code">4</span> errors is the default); Roughly one second has passed (<span class="code">238ms * 4</span> plus some overhead). | ** The error counts exceed the limit (<span class="code">4</span> errors is the default); Roughly one second has passed (<span class="code">238ms * 4</span> plus some overhead). | ||

** The node is declared dead. | ** The node is declared dead. | ||

| Line 210: | Line 207: | ||

*** If there are too few votes for quorum, the cluster software freezes and the node(s) withdraw from the cluster. | *** If there are too few votes for quorum, the cluster software freezes and the node(s) withdraw from the cluster. | ||

*** If there are enough votes for quorum, the silent node is declared dead. | *** If there are enough votes for quorum, the silent node is declared dead. | ||

**** <span class="code"> | **** <span class="code">openais</span> calls <span class="code">fenced</span>, telling it to fence the node. | ||

**** Which fence device(s) to use, that is, what <span class="code">fence_agent</span> to call and what arguments to pass, is gathered. | **** Which fence device(s) to use, that is, what <span class="code">fence_agent</span> to call and what arguments to pass, is gathered. | ||

**** For each configured fence device: | **** For each configured fence device: | ||

| Line 217: | Line 213: | ||

***** The <span class="code">fence_agent</span>'s exit code is examined. If it's a success, recovery starts. If it failed, the next configured fence agent is called. | ***** The <span class="code">fence_agent</span>'s exit code is examined. If it's a success, recovery starts. If it failed, the next configured fence agent is called. | ||

**** If all (or the only) configured fence fails, <span class="code">fenced</span> will start over. | **** If all (or the only) configured fence fails, <span class="code">fenced</span> will start over. | ||

**** <span class="code">fenced</span> will wait and loop forever until a fence agent succeeds. During this time, '''the cluster is | **** <span class="code">fenced</span> will wait and loop forever until a fence agent succeeds. During this time, '''the cluster is hung'''. | ||

** Once a <span class="code">fence_agent</span> succeeds, the cluster is reconfigured. | |||

*** | *** A new closed process group (<span class="code">cpg</span>) is formed. | ||

*** A new fence domain is formed. | |||

* Normal cluster operation is restored | *** Lost cluster resources are recovered as per <span class="code">rgmanager</span>'s configuration (including file system recovery as needed). | ||

*** Normal cluster operation is restored. | |||

This skipped a few key things, but the general flow of logic should be there. | This skipped a few key things, but the general flow of logic should be there. | ||

This is why fencing is so important. Without a properly configured and tested fence device or devices, the cluster will never successfully fence and the cluster will | This is why fencing is so important. Without a properly configured and tested fence device or devices, the cluster will never successfully fence and the cluster will stay hung forever. | ||

== Component; totem == | == Component; totem == | ||

The <span class="code">[[totem]]</span> protocol defines message passing within the cluster and it is used by <span class="code"> | The <span class="code">[[totem]]</span> protocol defines message passing within the cluster and it is used by <span class="code">openais</span>. A token is passed around all the nodes in the cluster, and the timeout discussed in [[Red_Hat_Cluster_Service_3_Tutorial#Concept;_Fencing|fencing]] above is actually a token timeout. The counter, then, is the number of lost tokens that are allowed before a node is considered dead. | ||

The <span class="code">totem</span> protocol supports something called '<span class="code">rrp</span>', '''R'''edundant '''R'''ing '''P'''rotocol. Through <span class="code">rrp</span>, you can add a second backup ring on a separate network to take over in the event of a failure in the first ring. In RHCS, these rings are known as "<span class="code">ring 0</span>" and "<span class="code">ring 1</span>" | The <span class="code">totem</span> protocol supports something called '<span class="code">rrp</span>', '''R'''edundant '''R'''ing '''P'''rotocol. Through <span class="code">rrp</span>, you can add a second backup ring on a separate network to take over in the event of a failure in the first ring. In RHCS, these rings are known as "<span class="code">ring 0</span>" and "<span class="code">ring 1</span>". | ||

== Component; rgmanager == | == Component; rgmanager == | ||

When the cluster membership changes, <span class="code"> | When the cluster membership changes, <span class="code">openais</span> tells the cluster that it needs to recheck it's resources. This causes <span class="code">rgmanager</span>, the resource group manager, to run. It will examine what changed and then will start, stop, migrate or recover cluster resources as needed. | ||

Within <span class="code">rgmanager</span>, one or more ''resources'' are brought together as a ''service''. This service is then optionally assigned to a ''failover domain'', an subset of nodes that can have preferential ordering. | Within <span class="code">rgmanager</span>, one or more ''resources'' are brought together as a ''service''. This service is then optionally assigned to a ''failover domain'', an subset of nodes that can have preferential ordering. | ||

== Component; qdisk == | == Component; qdisk == | ||

| Line 251: | Line 246: | ||

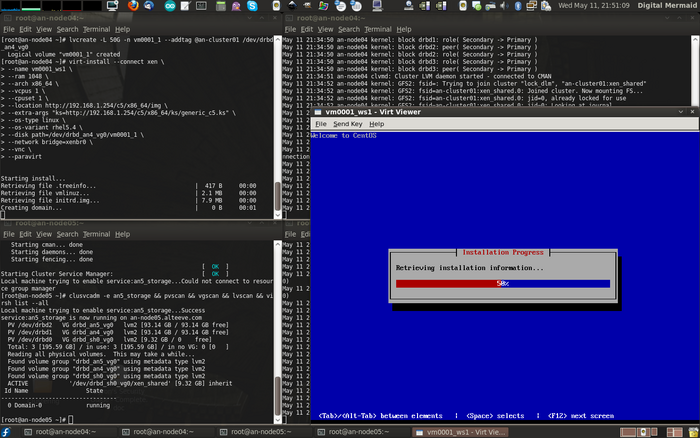

== Component; DRBD == | == Component; DRBD == | ||

[[DRBD]]; Distributed Replicating Block Device, is a technology that takes raw storage from two or more nodes and keeps their data synchronized in real time. It is sometimes described as "RAID 1 over | [[DRBD]]; Distributed Replicating Block Device, is a technology that takes raw storage from two or more nodes and keeps their data synchronized in real time. It is sometimes described as "RAID 1 over Nodes", and that is conceptually accurate. In this tutorial's cluster, DRBD will be used to provide that back-end storage as a cost-effective alternative to a tranditional [[SAN]] or [[iSCSI]] device. | ||

Don't worry if this seems illogical at this stage. The main thing to look at are the <span class="code">drbdX</span> devices and how they each tie back to a corresponding <span class="code">sdaY</span> device on either node. | To help visualize DRBD's use and role, Take a look at how we will implement our [[Red Hat Cluster Service 2 Tutorial#Visualizing Storage|cluster's storage]]. Don't worry if this seems illogical at this stage. The main thing to look at are the <span class="code">drbdX</span> devices and how they each tie back to a corresponding <span class="code">sdaY</span> device on either node. | ||

<source lang="text"> | <source lang="text"> | ||

[ an-node04 ] | |||

______ ______ ______ __[sda4]__ | |||

| | | sda1 | | sda2 | | sda3 | | ______ | _______ ______________ ______________________________ | ||

| | |______| |______| |______| | | sda5 |-+------| drbd0 |--| drbd_sh0_vg0 |--| /dev/drbd_sh0_vg0/xen_shared | | ||

| | | | |______| | /--|_______| |______________| |______________________________| | |||

| | ___|___ _|_ ____|____ | ______ | | _______ ______________ ____________________________ | ||

| | | /boot | | / | | <swap> | | | sda6 |-+---+----| drbd1 |--| drbd_an4_vg0 |--| /dev/drbd_an4_vg0/vm0001_1 | | ||

|_______| |___| |_________| | |______| | | /--|_______| |______________| |____________________________| | |||

| | | ______ | | | _______ ______________ ____________________________ | ||

| | | | sda7 |-+---+-+----| drbd2 |--| drbd_an5_vg0 |--| /dev/drbd_an4_vg0/vm0002_1 | | ||

| | | |______| | | | /--|_______| |______________| |____________________________| | ||

| ______ | | | | | | _______________________ | |||

| | | | sda8 |-+---+-+-+--\ | \---| Example LV for 2nd VM | | ||

| | | |______| | | | | | | |_______________________| | ||

|__________| | | | | | _______________________ | |||

| | [ an-node05 ] | | | | \-----| Example LV for 3rd VM | | ||

| | ______ ______ ______ __[sda4]__ | | | | |_______________________| | ||

| | | sda1 | | sda2 | | sda3 | | ______ | | | | | | ||

| | |______| |______| |______| | | sda5 |-+---/ | | | _______ __________________ | ||

| | | | | | |______| | | | \--| drbd3 |--| Spare PV for | | ||

___|___ _|_ ____|____ | ______ | | | /--|_______| | future expansion | | |||

| /boot | | / | | <swap> | | | sda6 |-+-----/ | | |__________________| | |||

| | |_______| |___| |_________| | |______| | | | | ||

| ______ | | | | |||

| | sda7 |-+-------/ | | |||

| | | |______| | | | ||

| | | ______ | | | ||

| | | | sda8 |-+----------/ | ||

| | | |______| | | ||

| | |__________| | ||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

. | . | ||

</source> | </source> | ||

== Component; | == Component; CLVM == | ||

With [[DRBD]] providing the raw storage for the cluster, we must next consider partitions. This is where Clustered [[LVM]], known as [[CLVM]], comes into play. | With [[DRBD]] providing the raw storage for the cluster, we must next consider partitions. This is where Clustered [[LVM]], known as [[CLVM]], comes into play. | ||

CLVM is ideal in that by using [[DLM]], the distributed lock manager | CLVM is ideal in that by using [[DLM]], the distributed lock manager, it won't allow access to cluster members outside of <span class="code">openais</span>'s closed process group, which, in turn, requires quorum. | ||

It is ideal because it can take one or more raw devices, known as "physical volumes", or simple as [[PV]]s, and combine their raw space into one or more "volume groups", known as [[VG]]s. These volume groups then act just like a typical hard drive and can be "partitioned" into one or more "logical volumes", known as [[LV]]s. These LVs are where [[ | It is ideal because it can take one or more raw devices, known as "physical volumes", or simple as [[PV]]s, and combine their raw space into one or more "volume groups", known as [[VG]]s. These volume groups then act just like a typical hard drive and can be "partitioned" into one or more "logical volumes", known as [[LV]]s. These LVs are where [[Xen]]'s [[domU]] virtual machines will exist and where we will create our [[GFS2]] clustered file system. | ||

LVM is particularly attractive because of how flexible it is. We can easily add new physical volumes later, and then grow an existing volume group to use the new space. This new space can then be given to existing logical volumes, or entirely new logical volumes can be created. This can all be done while the cluster is online offering an upgrade path with no down time. | LVM is particularly attractive because of how incredibly flexible it is. We can easily add new physical volumes later, and then grow an existing volume group to use the new space. This new space can then be given to existing logical volumes, or entirely new logical volumes can be created. This can all be done while the cluster is online offering an upgrade path with no down time. | ||

== Component; GFS2 == | == Component; GFS2 == | ||

With [[DRBD]] providing the clusters raw storage space, and [[Clustered LVM]] providing the logical partitions, we can now look at the clustered file system. This is the role of the Global File System version 2, known simply as [[GFS2]]. | With [[DRBD]] providing the clusters raw storage space, and [[CLVM|Clustered LVM]] providing the logical partitions, we can now look at the clustered file system. This is the role of the Global File System version 2, known simply as [[GFS2]]. | ||

It works much like standard filesystem, with user-land tools like <span class="code">mkfs.gfs2</span>, <span class="code">fsck.gfs2</span> and so on. The major difference is that it and <span class="code">clvmd</span> use the cluster's [[DLM|distributed locking mechanism]] provided by the <span class="code">dlm_controld</span> daemon. Once formatted, the GFS2-formatted partition can be mounted and used by any node in the cluster's [[CPG|closed process group]]. All nodes can then safely read from and write to the data on the partition simultaneously. | It works much like standard filesystem, with user-land tools like <span class="code">mkfs.gfs2</span>, <span class="code">fsck.gfs2</span> and so on. The major difference is that it and <span class="code">clvmd</span> use the cluster's [[DLM|distributed locking mechanism]] provided by the <span class="code">dlm_controld</span> daemon. Once formatted, the GFS2-formatted partition can be mounted and used by any node in the cluster's [[CPG|closed process group]]. All nodes can then safely read from and write to the data on the partition simultaneously. | ||

| Line 345: | Line 302: | ||

== Component; DLM == | == Component; DLM == | ||

One of the major roles of a cluster is to provide [[DLM|distributed locking]] | One of the major roles of a cluster is to provide [[DLM|distributed locking]] on clustered storage. In fact, storage software can not be clustered without using [[DLM]], as provided by the <span class="code">dlm_controld</span> daemon and using <span class="code">openais</span>'s virtual synchrony via [[CPG]]. | ||

Through DLM, all nodes accessing clustered storage are guaranteed to get [[POSIX]] locks, called <span class="code">plock</span>s, in the same order across all nodes. Both [[CLVM]] and [[GFS2]] rely on DLM, though other clustered storage, like OCFS2, use it as well. | |||

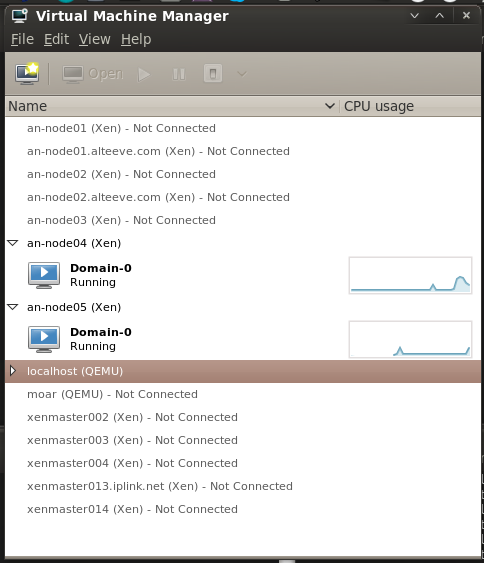

== Component; | == Component; Xen == | ||

Two of the most popular open-source virtualization platforms available in the Linux world today and [[Xen]] and [[KVM]]. The former is maintained by [http://www.citrix.com/xenserver Citrix] and the other by [http://www.redhat.com/solutions/virtualization/ Redhat]. It would be difficult to say which is "better", as they're both very good. Xen can be argued to be more mature where KVM is the "official" solution supported by Red Hat in [[EL6]]. | Two of the most popular open-source virtualization platforms available in the Linux world today and [[Xen]] and [[KVM]]. The former is maintained by [http://www.citrix.com/xenserver Citrix] and the other by [http://www.redhat.com/solutions/virtualization/ Redhat]. It would be difficult to say which is "better", as they're both very good. Xen can be argued to be more mature where KVM is the "official" solution supported by Red Hat in [[EL6]]. | ||

We will be using the | We will be using the Xen [[hypervisor]] and a "host" virtual server called [[dom0]]. In Xen, every machine is a virtual server, including the system you installed when you built the server. This is possible thanks to a small Xen micro-operating system that initially boots, then starts up your original installed operating system as a virtual server with special access to the underlying hardware and hypervisor management tools. | ||

The rest of the virtual servers in a Xen environment are collectively called "[[domU]]" virtual servers. These will be the highly-available resource that will migrate between nodes during failure events in our cluster. | |||

= Base Setup = | |||

Before we can look at the cluster, we must first build two cluster nodes and then install the operating system. | |||

== Hardware Requirements == | |||

The bare minimum requirements are; | |||

* All hardware must be supported by [[EL5]]. It is strongly recommended that you check compatibility before making any purchases. | |||

* A dual-core [[CPU]] with hardware virtualization support. | |||

* Three network cards; At least one should be gigabit or faster. | |||

* One hard drive. | |||

* 2 [[GiB]] of [[RAM]] | |||

* A [[fence|fence device]]. This can be an [[IPMI]]-enabled server, a [http://nodeassassin.org Node Assassin], a [http://www.apc.com/products/resource/include/techspec_index.cfm?base_sku=AP7900 switched PDU] or similar. | |||

This tutorial was written using the following hardware: | |||

* AMD Athlon [http://products.amd.com/en-us/DesktopCPUDetail.aspx?id=610 II X4 600e Processor] | |||

* ASUS [http://www.asus.com/product.aspx?P_ID=LVmksAnszmVimOOp M4A785T-M/CSM] | |||

* 4GB Kingston [http://www.ec.kingston.com/ecom/configurator_new/partsinfo.asp?root=&LinkBack=&ktcpartno=KVR1333D3N9K2/4G KVR1333D3N9K2/4G], 4GB (2x2GB) DDR3-1333, Non-ECC | |||

* Seagate [http://www.seagate.com/ww/v/index.jsp?vgnextoid=70f4bfafecadd110VgnVCM100000f5ee0a0aRCRD ST9500420AS] 2.5" SATA HDD | |||

* 2x Intel [http://www.intel.com/products/desktop/adapters/gigabit-ct/gigabit-ct-overview.htm Pro/1000CT EXPI9301CT] PCIe NICs | |||

* [[Node Assassin v1.1.4]] | |||

This is not an endorsement of the above hardware. I put a heavy emphasis on minimizing power consumption and bought what was within my budget. This hardware was never meant to be put into production, but instead was chosen to serve the purpose of my own study and for creating this tutorial. What you ultimately choose to use, provided it meets the minimum requirements, is entirely up to you and your requirements. | |||

{| | {{note|1=I use three physical [[NIC]]s, but you can get away with two by merging the storage and back-channel networks, which we will discuss shortly. If you are really in a pinch, you could create three aliases on on interface and isolate them using [[VLAN]]s. If you go this route, please ensure that your VLANs are configured and working before beginning this tutorial. Pay close attention to multicast traffic.}} | ||

== | == Pre-Assembly == | ||

Before you assemble your nodes, take a moment to record the [[MAC]] addresses of each network interface and then note where each interface is physically installed. This will help you later when configuring the networks. I generally create a simple text file with the MAC addresses, the interface I intend to assign to it and where it physically is located. | |||

=== | <source lang="text"> | ||

-=] an-node04 | |||

48:5B:39:3C:53:15 # eth0 - onboard interface | |||

00:1B:21:72:9B:5A # eth1 - right-most PCIe interface | |||

00:1B:21:72:96:EA # eth2 - left-most PCIe interface | |||

-=] an-node05 | |||

48:5B:39:3C:53:13 # eth0 - onboard interface | |||

00:1B:21:72:99:AB # eth1 - right-most PCIe interface | |||

00:1B:21:72:96:A6 # eth2 - left-most PCIe interface | |||

</source> | |||

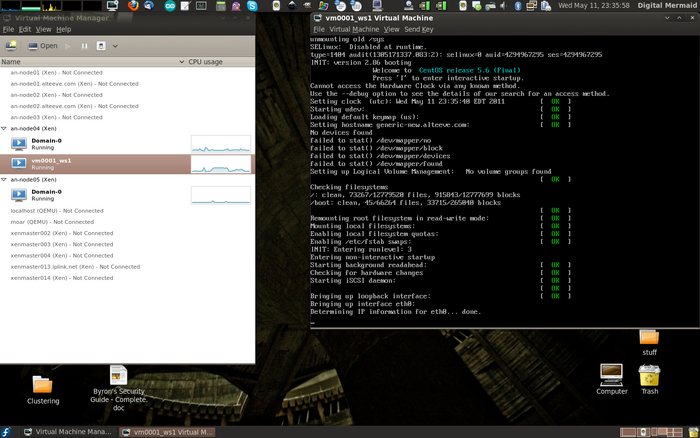

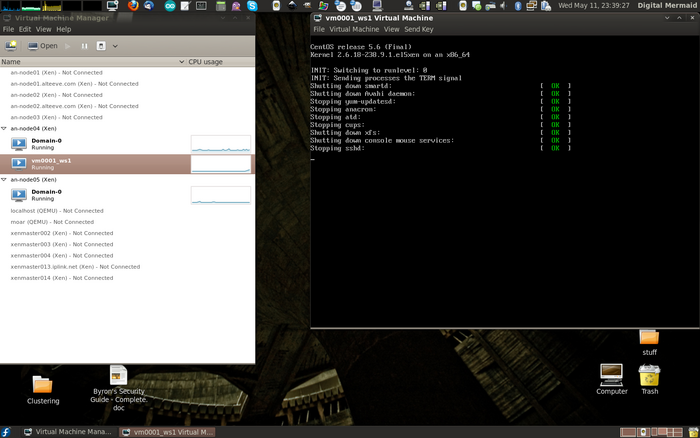

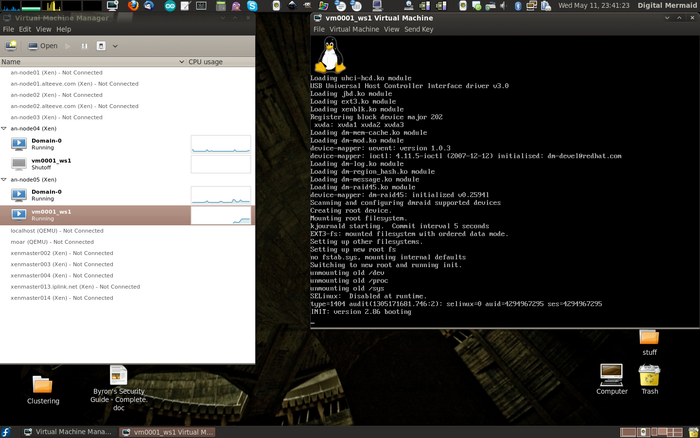

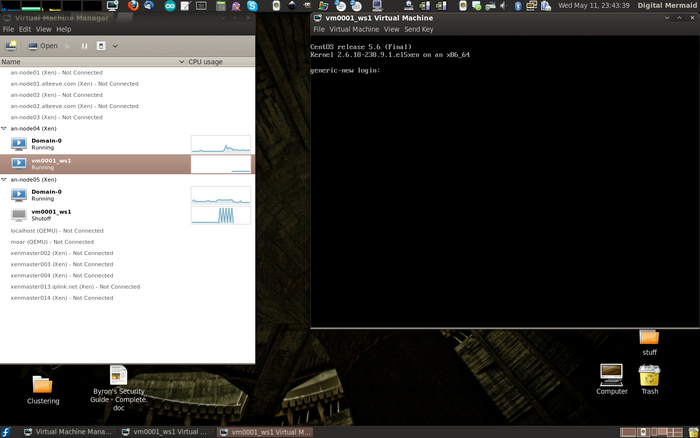

== OS Install == | |||

Later steps will include packages to install, so the initial OS install can be minimal. I like to change the default run-level to <span class="code">3</span>, remove <span class="code">rhgb quiet</span> from the [[grub]] menu, disable the firewall and disable [[SELinux]]. In a production cluster, you will want to use firewalling and <span class="code">selinux</span>, but until you finish studying, leave it off to keep things simple. | |||

{{note|1=Before [[EL5]].4, you could not use SELinux. It is now possible to use it, and it is recommended that you do so in any production cluster.}} | |||

{{note|1=Ports and protocols to open in a firewall will be discussed later in the networking section.}} | |||

I like to minimize and automate my installs as much as possible. To that end, I run a little [[Setting Up a PXE Server in Fedora|PXE]] server on my network and use a [[kickstart]] script to automate the install. Here is a simple one for use on a single-drive node: | |||

* [[generic_el5_node.ks]] | |||

If you decide to manually install [[EL5]] on your nodes, please try to keep the installation as small as possible. The fewer packages installed, the fewer sources of problems and vectors for attack. | |||

== Post Install OS Changes == | |||

This section discusses changes I recommend, but are not required. If you wish to adapt any of the steps below, please do so but be sure to keep the changes consistent through out the implementation of this tutorial. | |||

=== Network Planning === | |||

The most important change that is recommended is to get your nodes into a consistent networking configuration. This will prove very handy when trying to keep track of your networks and where they're physically connected. This becomes exponentially more helpful as your cluster grows. | |||

The first step is to understand the three networks we will be creating. Once you understand their role, you will need to decide which interface on the nodes will be used for each network. | |||

=== | ==== Cluster Networks ==== | ||

The three networks are; | |||

{|class="wikitable" | |||

!Network | |||

!Acronym | |||

!Use | |||

|- | |||

|Back-Channel Network | |||

|'''BCN''' | |||

|Private cluster communications, virtual machine migrations, fence devices | |||

|- | |||

|Storage Network | |||

|'''SN''' | |||

|Used exclusively for storage communications. Possible to use as totem's redundant ring. | |||

|- | |||

|Internet-Facing Network | |||

|'''IFN''' | |||

|Internet-polluted network. No cluster, storage or cluster device communication. | |||

|} | |||

==== Things To Consider ==== | |||

When planning which interfaces to connect to each network, consider the following, in order of importance: | |||

* If your nodes have [[IPMI]] and an interface sharing a physical [[RJ-45]] connector, this must be on the '''Back-Channel Network'''. The reasoning is that having your fence device accessible on the '''Internet-Facing Network''' poses a ''major'' security risk. Having the IPMI interface on the '''Storage Network''' can cause problems if a fence is fired and the network is saturated with storage traffic. | |||

* The lowest-latency network interface should be used as the '''Back-Channel Network'''. The cluster is maintained by [[multicast]] messaging between the nodes using something called the [[totem]] protocol. Any delay in the delivery of these messages can risk causing a failure and ejection of effected nodes when no actual failure existed. This will be discussed in greater detail later. | |||

* ''' | * The network with the most raw bandwidth should be used for the '''Storage Network'''. All disk writes must be sent across the network and committed to the remote nodes before the write is declared complete. This causes the network to become the disk I/O bottle neck. Using a network with jumbo frames and high raw throughput will help minimize this bottle neck. | ||

* During the live migration of virtual machines, the VM's RAM is copied to the other node using the '''BCN'''. For this reason, the second fastest network should be used for back-channel communication. However, these copies can saturate the network, so care must be taken to ensure that cluster communications get higher priority. This can be done using a managed switch. If you can not ensure priority for totem multicast, then be sure to configure Xen later to use the storage network for migrations. | |||

* The remain, slowest interface should be used for the '''IFN'''. | |||

==== Planning the Networks ==== | |||

This paper will use the following setup. Feel free to alter the interface to network mapping and the [[IP]] [[subnet]]s used to best suit your needs. For reasons completely my own, I like to start my cluster IPs final [[octal]] at <span class="code">71</span> for node 1 and then increment up from there. This is entirely arbitrary, so please use what ever makes sense to you. The remainder of this tutorial will follow the convention below: | |||

{|class="wikitable" | {|class="wikitable" | ||

! | !Network | ||

! | !Interface | ||

! | !Subnet | ||

|- | |- | ||

| | |'''IFN''' | ||

|<span class="code"> | |<span class="code">eth0</span> | ||

|<span class="code"> | |<span class="code">192.168.1.0/24</span> | ||

|- | |- | ||

|<span class="code"> | |'''SN''' | ||

|<span class="code"> | |<span class="code">eth1</span> | ||

|<span class="code"> | |<span class="code">192.168.2.0/24</span> | ||

|<span class="code"> | |- | ||

|'''BCN''' | |||

|<span class="code">eth2</span> | |||

|<span class="code">192.139.3.0/24</span> | |||

|} | |} | ||

This translates to the following per-node configuration: | |||

{|class="wikitable" | |||

!colspan="2"| | |||

!colspan="2"|an-node04 | |||

!colspan="2"|an-node05 | |||

|- | |||

! | |||

!Interface | |||

!IP Address | |||

!Host Name(s) | |||

!IP Address | |||

!Host Name(s) | |||

|- | |||

!IFN | |||

|align="center"|<span class="code">eth0</span> | |||

|<span class="code">192.168.1.74</span> | |||

|<span class="code">an-node04.ifn</span> | |||

|<span class="code">192.168.1.75</span> | |||

|<span class="code">an-node05.ifn</span> | |||

|- | |||

!SN | |||

|align="center"|<span class="code">eth1</span> | |||

|<span class="code">192.168.2.74</span> | |||

|<span class="code">an-node04.sn</span> | |||

|<span class="code">192.168.2.75</span> | |||

|<span class="code">an-node05.sn</span> | |||

|- | |||

!BCN | |||

|align="center"|<span class="code">eth2</span> | |||

|<span class="code">192.168.3.74</span> | |||

|<span class="code">an-node04 an-node04.alteeve.ca an-node04.bcn</span> | |||

|<span class="code">192.168.3.75</span> | |||

|<span class="code">an-node05 an-node05.alteeve.ca an-node05.bcn</span> | |||

|} | |||

=== Network Configuration === | |||

So now we've planned the network, so it is time to implement it. | |||

==== Warning About Managed Switches ==== | |||

{{warning|1=The vast majority of cluster problems end up being network related. The hardest ones to diagnose are usually [[multicast]] issues.}} | |||

If you use a managed switch, be careful about enabling and configuring [[Multicast IGMP Snooping]] or [[Spanning Tree Protocol]]. They have been known to cause problems by not allowing multicast packets to reach all nodes fast enough or at all. This can cause somewhat random break-downs in communication between your nodes, leading to seemingly random fences and DLM lock timeouts. If your switches support [[PIM Routing]], be sure to use it! | |||

= | If you have problems with your cluster not forming, or seemingly random fencing, try using a cheap [http://dlink.ca/products/?pid=230 unmanaged] switch. If the problem goes away, you are most likely dealing with a managed switch configuration problem. | ||

==== Disable Firewalling ==== | |||

To "keep things simple", we will disable all firewalling on the cluster nodes. This is not recommended in production environments, obviously, so below will be a table of ports and protocols to open when you do get into production. Until then, we will simply use <span class="code">chkconfig</span> to disable <span class="code">iptables</span> and <span class="code">ip6tables</span>. | |||

{{note|1=Cluster 2 does not support [[IPv6]], so you can skip or ignore it if you wish. I like to disable it just to be certain that it can't cause issues though.}} | |||

<source lang="bash"> | <source lang="bash"> | ||

chkconfig iptables off | |||

chkconfig ip6tables off | |||

/etc/init.d/iptables stop | |||

/etc/init.d/ip6tables stop | |||

</source> | </source> | ||

Now confirm that they are off by having <span class="code">iptables</span> and <span class="code">ip6tables</span> list their rules. | |||

<source lang="bash"> | <source lang="bash"> | ||

iptables -L | |||

</source> | </source> | ||

<source lang="text"> | |||

Chain INPUT (policy ACCEPT) | |||

target prot opt source destination | |||

Chain FORWARD (policy ACCEPT) | |||

target prot opt source destination | |||

Chain OUTPUT (policy ACCEPT) | |||

target prot opt source destination | |||

</source> | </source> | ||

<source lang="bash"> | <source lang="bash"> | ||

ip6tables -L | |||

</source> | </source> | ||

<source lang="text"> | |||

Chain INPUT (policy ACCEPT) | |||

target prot opt source destination | |||

Chain FORWARD (policy ACCEPT) | |||

target prot opt source destination | |||

Chain OUTPUT (policy ACCEPT) | |||

target prot opt source destination | |||

</source> | |||

When you do prepare to go into production, these are the protocols and ports you need to open between cluster nodes. Remember to allow multicast communications as well! | |||

{|class="wikitable" | |||

!Port | |||

!Protocol | |||

!Component | |||

|- | |||

|<span class="code">5404</span>, <span class="code">5405</span> | |||

|[[UDP]] | |||

|<span class="code">[[cman]]</span> | |||

|- | |||

|<span class="code">8084</span>, <span class="code">5405</span> | |||

|[[TCP]] | |||

|<span class="code">[[luci]]</span> | |||

|- | |||

|<span class="code">11111</span> | |||

|[[TCP]] | |||

|<span class="code">[[ricci]]</span> | |||

|- | |||

|<span class="code">14567</span> | |||

|[[TCP]] | |||

|<span class="code">[[gnbd]]</span> | |||

|- | |||

|<span class="code">16851</span> | |||

|[[TCP]] | |||

|<span class="code">[[modclusterd]]</span> | |||

|- | |||

|<span class="code">21064</span> | |||

|[[TCP]] | |||

|<span class="code">[[dlm]]</span> | |||

|- | |||

|<span class="code">50006</span>, <span class="code">50008</span>, <span class="code">50009</span> | |||

|[[TCP]] | |||

|<span class="code">[[ccsd]]</span> | |||

|- | |||

|<span class="code">50007</span> | |||

|[[UDP]] | |||

|<span class="code">[[ccsd]]</span> | |||

|} | |||

==== Disable NetworkManager, Enable network ==== | |||

The <span class="code">NetworkManager</span> daemon is an excellent daemon in environments where a system connects to a variety of networks. The <span class="code">NetworkManager</span> daemon handles changing the networking configuration whenever it senses a change in the network state, like when a cable is unplugged or a wireless network comes or goes. As useful as this is on laptops and workstations, it can be detrimental in a cluster. | |||

To prevent the networking from changing once we've got it setup, we want to replace <span class="code">NetworkManager</span> daemon with the <span class="code">network</span> initialization script. The <span class="code">network</span> script will start and stop networking, but otherwise it will leave the configuration alone. This is ideal in servers, and doubly-so in clusters given their sensitivity to transient network issues. | |||

Start by removing <span class="code">NetworkManager</span>: | |||

<source lang="bash"> | <source lang="bash"> | ||

yum remove NetworkManager NetworkManager-glib NetworkManager-gnome NetworkManager-devel NetworkManager-glib-devel | |||

</source> | </source> | ||

Now you want to ensure that <span class="code">network</span> starts with the system. | |||

<source lang="bash"> | <source lang="bash"> | ||

chkconfig network on | |||

</source> | </source> | ||

'''<span class="code"> | ==== Setup /etc/hosts ==== | ||

The <span class="code">/etc/hosts</span> file, by default, will resolve the hostname to the <span class="code">lo</span> (<span class="code">127.0.0.1</span>) interface. The cluster uses this name though for knowing which interface to use for the [[totem]] protocol (and thus all cluster communications). To this end, we will remove the hostname from <span class="code">127.0.0.1</span> and instead put it on the IP of our '''BCN''' interface. We will also add entries for all other networks for both nodes in the cluster along with entries for the fence device(s). | |||

Once done, the edited <span class="code">/etc/hosts</span> file should be suitable for copying to both nodes in the cluster. | |||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/ | vim /etc/hosts | ||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

# | # Do not remove the following line, or various programs | ||

# that require network functionality will fail. | |||

127.0.0.1 localhost.localdomain localhost | |||

::1 localhost6.localdomain6 localhost6 | |||

192.168.1.74 an-node04.ifn | |||

192.168.2.74 an-node04.sn | |||

192.168.3.74 an-node04 an-node04.bcn an-node04.alteeve.ca | |||

192.168.1.75 an-node05.ifn | |||

192.168.2.75 an-node05.sn | |||

192.168.3.75 an-node05 an-node05.bcn an-node05.alteeve.ca | |||

192.168.3.61 fence_na01.alteeve.ca # Node Assassin | |||

</source> | </source> | ||

==== Mapping Interfaces to ethX Names ==== | |||

< | |||

Chances are good that the assignment of <span class="code">ethX</span> interface names to your physical network cards is not ideal. There is no strict technical reason to change the mapping, but it will make your life a lot easier if all nodes use the same <span class="code">ethX</span> names for the same subnets. | |||

</ | |||

The actual process of changing the mapping is a little involved. For this reason, there is a dedicated mini-tutorial which you can find below. Please jump to it and then return once your mapping is as you like it. | |||

* [[Changing the ethX to Ethernet Device Mapping in EL5]] | |||

==== Set IP Addresses ==== | |||

The last step in setting up the network interfaces is to manually assign the IP addresses and define the subnets for the interfaces. This involves directly editing the <span class="code">/etc/sysconfig/network-scripts/ifcfg-ethX</span> files. There are a large set of options that can be set in these configuration files, but most are outside the scope of this tutorial. To get a better understanding of the available options, please see: | |||

</ | * [http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/5/html/Deployment_Guide/s1-networkscripts-interfaces.html Red Hat's Interface Configuration Guide] | ||

=== | {{note|1=Later on, we will be creating two bridges, <span class="code">xenbr0</span> and <span class="code">xenbr2</span> which we will then connect [[dom0]]'s <span class="code">eth0</span> and <span class="code">eth2</span> to. These bridges then become available to the [[Xen]]'s [[domU]] VMs. Bridge options and arguments can be found in the link above.}} | ||

Here are the three configuration files from <span class="code">an-node04</span> which you can use as guides. Please '''do not''' copy these over your files! Doing so will cause your interfaces to fail outright as every interface's [[MAC]] address is unique. Adapt these to suite your needs. | |||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/sysconfig/network-scripts/ifcfg-eth0 | vim /etc/sysconfig/network-scripts/ifcfg-eth0 | ||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

# | # Realtek Semiconductor Co., Ltd. RTL8111/8168B PCI Express Gigabit Ethernet controller | ||

HWADDR= | HWADDR=48:5B:39:3C:53:14 | ||

DEVICE= | DEVICE=eth0 | ||

BOOTPROTO=static | |||

ONBOOT= | ONBOOT=yes | ||

IPADDR=192.168.1.74 | |||

NETMASK=255.255.255.0 | |||

GATEWAY=192.168.1.254 | |||

DNS1=192.139.81.117 | |||

DNS2=192.139.81.1 | |||

</source> | </source> | ||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/sysconfig/network-scripts/ifcfg- | vim /etc/sysconfig/network-scripts/ifcfg-eth1 | ||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

# | # Intel Corporation 82574L Gigabit Network Connection | ||

HWADDR= | HWADDR=00:1B:21:72:9B:5A | ||

DEVICE= | DEVICE=eth1 | ||

BOOTPROTO=static | |||

ONBOOT= | ONBOOT=yes | ||

IPADDR=192.168.2.74 | |||

NETMASK=255.255.255.0 | |||

</source> | </source> | ||

<source lang="bash"> | <source lang="bash"> | ||

vim /etc/sysconfig/network-scripts/ifcfg- | vim /etc/sysconfig/network-scripts/ifcfg-eth2 | ||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

# | # Intel Corporation 82574L Gigabit Network Connection | ||

HWADDR= | HWADDR=00:1B:21:72:96:EA | ||

DEVICE= | DEVICE=eth2 | ||

BOOTPROTO=static | |||

ONBOOT= | ONBOOT=yes | ||

IPADDR=192.168.3.74 | |||

NETMASK=255.255.255.0 | |||

</source> | </source> | ||

If you do not want to use the <span class="code">DNSx=</span> options, you will need to setup the <span class="code">/etc/resolv.conf</span> file for [[DNS]] resolution. You can learn more about this file's purpose by reading it's [[man]] page; <span class="code">man resolv.conf</span>. | |||

Finally, restart <span class="code">network</span> and you should have you interfaces setup properly. | |||

<source lang="bash"> | <source lang="bash"> | ||

/etc/init.d/network restart | |||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

Shutting down interface eth0: [ OK ] | |||

Shutting down interface eth1: [ OK ] | |||

Shutting down interface eth2: [ OK ] | |||

Shutting down loopback interface: [ OK ] | |||

Bringing up loopback interface: [ OK ] | |||

Bringing up interface eth0: [ OK ] | |||

Bringing up interface eth1: [ OK ] | |||

Bringing up interface eth2: [ OK ] | |||

</source> | </source> | ||

You can verify your configuration using the <span class="code">ifconfig</span> tool. The output below is from <span class="code">an-node04</span>. | |||

<source lang="bash"> | <source lang="bash"> | ||

ifconfig | |||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

eth0 Link encap:Ethernet HWaddr 48:5B:39:3C:53:14 | |||

inet addr:192.168.1.74 Bcast:192.168.1.255 Mask:255.255.255.0 | |||

inet6 addr: fe80::4a5b:39ff:fe3c:5314/64 Scope:Link | |||

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | |||

RX packets:3974 errors:0 dropped:0 overruns:0 frame:0 | |||

TX packets:1810 errors:0 dropped:0 overruns:0 carrier:0 | |||

collisions:0 txqueuelen:1000 | |||

RX bytes:1452567 (1.3 MiB) TX bytes:237057 (231.5 KiB) | |||

Interrupt:246 Base address:0xe000 | |||

eth1 Link encap:Ethernet HWaddr 00:1B:21:72:9B:5A | |||

inet addr:192.168.2.74 Bcast:192.168.2.255 Mask:255.255.255.0 | |||

inet6 addr: fe80::21b:21ff:fe72:9b5a/64 Scope:Link | |||

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | |||

RX packets:117 errors:0 dropped:0 overruns:0 frame:0 | |||

TX packets:62 errors:0 dropped:0 overruns:0 carrier:0 | |||

collisions:0 txqueuelen:1000 | |||

RX bytes:30766 (30.0 KiB) TX bytes:16018 (15.6 KiB) | |||

Interrupt:17 Memory:feae0000-feb00000 | |||

eth2 Link encap:Ethernet HWaddr 00:1B:21:72:96:EA | |||

inet addr:192.168.3.74 Bcast:192.168.3.255 Mask:255.255.255.0 | |||

inet6 addr: fe80::21b:21ff:fe72:96ea/64 Scope:Link | |||

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | |||

RX packets:54 errors:0 dropped:0 overruns:0 frame:0 | |||

TX packets:60 errors:0 dropped:0 overruns:0 carrier:0 | |||

collisions:0 txqueuelen:1000 | |||

RX bytes:11492 (11.2 KiB) TX bytes:15638 (15.2 KiB) | |||

Interrupt:16 Memory:fe9e0000-fea00000 | |||

lo Link encap:Local Loopback | |||

inet addr:127.0.0.1 Mask:255.0.0.0 | |||

inet6 addr: ::1/128 Scope:Host | |||

UP LOOPBACK RUNNING MTU:16436 Metric:1 | |||

RX packets:34 errors:0 dropped:0 overruns:0 frame:0 | |||

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0 | |||

collisions:0 txqueuelen:0 | |||

RX bytes:9268 (9.0 KiB) TX bytes:9268 (9.0 KiB) | |||

</source> | </source> | ||

{{note|1=You may see a <span class="code">virbr0</span> interface. You can safely ignore it, we will remove it later.}} | |||

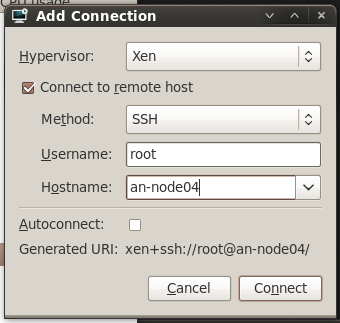

=== Setting up SSH === | |||

Setting up [[SSH]] shared keys will allow your nodes to pass files between one another and execute commands remotely without needing to enter a password. This will be needed later when we want to enable applications like <span class="code">libvirtd</span> and <span class="code">virt-manager</span>. | |||

SSH is, on it's own, a very big topic. If you are not familiar with SSH, please take some time to learn about it before proceeding. A great first step is the [http://en.wikipedia.org/wiki/Secure_Shell Wikipedia] entry on SSH, as well as the SSH [[man]] page; <span class="code">man ssh</span>. | |||

[[SSH]] can be a bit confusing keeping connections straight in you head. When you connect to a remote machine, you start the connection on your machine as the user you are logged in as. This is the source user. When you call the remote machine, you tell the machine what user you want to log in as. This is the remote user. | |||

You will need to create an SSH key for each source user on each node, and then you will need to copy the newly generated public key to each remote machine's user directory that you want to connect to. In this example, we want to connect to either node, from either node, as the <span class="code">root</span> user. So we will create a key for each node's <span class="code">root</span> user and then copy the generated public key to the ''other'' node's <span class="code">root</span> user's directory. | |||

For each user, on each machine you want to connect '''from''', run: | |||

<source lang="bash"> | <source lang="bash"> | ||

# The '2047' is just to screw with brute-forces a bit. :) | |||

ssh-keygen -t rsa -N "" -b 2047 -f ~/.ssh/id_rsa | |||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

Generating public/private rsa key pair. | |||

Created directory '/root/.ssh'. | |||

Your identification has been saved in /root/.ssh/id_rsa. | |||

Your public key has been saved in /root/.ssh/id_rsa.pub. | |||

The key fingerprint is: | |||

a1:65:a9:50:bb:15:ae:b1:6e:06:12:4a:29:d1:68:f3 root@an-node04.alteeve.ca | |||

</source> | </source> | ||

== | This will create two files: the private key called <span class="code">~/.ssh/id_rsa</span> and the public key called <span class="code">~/.ssh/id_rsa.pub</span>. The private '''''must never''''' be group or world readable! That is, it should be set to mode <span class="code">0600</span>. | ||

The two files should look like: | |||

'''Private key''': | |||

<source lang="bash"> | <source lang="bash"> | ||

/ | cat ~/.ssh/id_rsa | ||

</source> | |||

<source lang="text"> | |||

-----BEGIN RSA PRIVATE KEY----- | |||

MIIEnwIBAAKCAQBTNg6FZyDKm4GAm7c+F2enpLWy+t8ZZjm4Z3Q7EhX09ukqk/Qm | |||

MqprtI9OsiRVjce+wGx4nZ8+Z0NHduCVuwAxG0XG7FpKkUJC3Qb8KhyeIpKEcfYA | |||

tsDUFnWddVF8Tsz6dDOhb61tAke77d9E01NfyHp88QBxjJ7w+ZgB2eLPBFm6j1t+ | |||

K50JHwdcFfxrZFywKnAQIdH0NCs8VaW91fQZBupg4OGOMpSBnVzoaz2ybI9bQtbZ | |||

4GwhCghzKx7Qjz20WiqhfPMfFqAZJwn0WXfjALoioMDWavTbx+J2HM8KJ8/YkSSK | |||

dDEgZCItg0Q2fC35TDX+aJGu3xNfoaAe3lL1AgEjAoIBABVlq/Zq+c2y9Wo2q3Zd | |||

yjJsLrj+rmWd8ZXRdajKIuc4LVQXaqq8kjjz6lYQjQAOg9H291I3KPLKGJ1ZFS3R | |||

AAygnOoCQxp9H6rLHw2kbcJDZ4Eknlf0eroxqTceKuVzWUe3ev2gX8uS3z70BjZE | |||

+C6SoydxK//w9aut5UJN+H5f42p95IsUIs0oy3/3KGPHYrC2Zgc2TIhe25huie/O | |||

psKhHATBzf+M7tHLGia3q682JqxXru8zhtPOpEAmU4XDtNdL+Bjv+/Q2HMRstJXe | |||

2PU3IpVBkirEIE5HlyOV1T802KRsSBelxPV5Y6y5TRq+cEwn0G2le1GiFBjd0xQd | |||

0csCgYEA2BWkxSXhqmeb8dzcZnnuBZbpebuPYeMtWK/MMLxvJ50UCUfVZmA+yUUX | |||

K9fAUvkMLd7V8/MP7GrdmYq2XiLv6IZPUwyS8yboovwWMb+72vb5QSnN6LAfpUEk | |||

NRd5JkWgqRstGaUzxeCRfwfIHuAHikP2KeiLM4TfBkXzhm+VWjECgYBilQEBHvuk | |||

LlY2/1v43zYQMSZNHBSbxc7R5mnOXNFgapzJeFKvaJbVKRsEQTX5uqo83jRXC7LI | |||

t14pC23tpW1dBTi9bNLzQnf/BL9vQx6KFfgrXwy8KqXuajfv1ECH6ytqdttkUGZt | |||

TE/monjAmR5EVElvwMubCPuGDk9zC7iQBQKBgG8hEukMKunsJFCANtWdyt5NnKUB | |||

X66vWSZLyBkQc635Av11Zm8qLusq2Ld2RacDvR7noTuhkykhBEBV92Oc8Gj0ndLw | |||

hhamS8GI9Xirv7JwYu5QA377ff03cbTngCJPsbYN+e/uj6eYEE/1X5rZnXpO1l6y | |||

G7QYcrLE46Q5YsCrAoGAL+H5LG4idFEFTem+9Tk3hDUhO2VpGHYFXqMdctygNiUn | |||

lQ6Oj7Z1JbThPJSz0RGF4wzXl/5eJvn6iPbsQDpoUcC1KM51FxGn/4X2lSCZzgqr | |||

vUtslejUQJn96YRZ254cZulF/YYjHyUQ3byhDRcr9U2CwUBi5OcbFTomlvcQgHcC | |||

gYEAtIpaEWt+Akz9GDJpKM7Ojpk8wTtlz2a+S5fx3WH/IVURoAzZiXzvonVIclrH | |||

5RXFiwfoXlMzIulZcrBJZfTgRO9A2v9rE/ZRm6qaDrGe9RcYfCtxGGyptMKLdbwP | |||

UW1emRl5celU9ZEZRBpIVTES5ZVWqD2RkkkNNJbPf5F/x+w= | |||

-----END RSA PRIVATE KEY----- | |||

</source> | </source> | ||

'''Public key''' (wrapped to make it more readable): | |||

<source lang="bash"> | <source lang="bash"> | ||

cat ~/.ssh/id_rsa.pub | |||

</source> | </source> | ||

<source lang="text"> | <source lang="text"> | ||

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQBTNg6FZyDKm4GAm7c+F2enpLWy+t8Z | |||

Zjm4Z3Q7EhX09ukqk/QmMqprtI9OsiRVjce+wGx4nZ8+Z0NHduCVuwAxG0XG7FpK | |||

kUJC3Qb8KhyeIpKEcfYAtsDUFnWddVF8Tsz6dDOhb61tAke77d9E01NfyHp88QBx | |||

jJ7w+ZgB2eLPBFm6j1t+K50JHwdcFfxrZFywKnAQIdH0NCs8VaW91fQZBupg4OGO | |||

MpSBnVzoaz2ybI9bQtbZ4GwhCghzKx7Qjz20WiqhfPMfFqAZJwn0WXfjALoioMDW | |||

avTbx+J2HM8KJ8/YkSSKdDEgZCItg0Q2fC35TDX+aJGu3xNfoaAe3lL1 root@an | |||

-node04.alteeve.ca | |||

</source> | |||

Copy the public key and then <span class="code">ssh</span> normally into the remote machine as the <span class="code">root</span> user. Create a file called <span class="code">~/.ssh/authorized_keys</span> and paste in the key. | |||

From '''an-node04''', type: | |||

<source lang="bash"> | |||

ssh root@an-node05 | |||

</source> | |||

<source lang="text"> | |||

The authenticity of host 'an-node05 (192.168.3.75)' can't be established. | |||

RSA key fingerprint is 55:58:c3:32:e4:e6:5e:32:c1:db:5c:f1:36:e2:da:4b. | |||

Are you sure you want to continue connecting (yes/no)? yes | |||

Warning: Permanently added 'an-node05,192.168.3.75' (RSA) to the list of known hosts. | |||

Last login: Fri Mar 11 20:45:58 2011 from 192.168.1.202 | |||

</source> | </source> | ||

You will now be logged into <span class="code">an-node05</span> as the <span class="code">root</span> user. Create the <span class="code">~/.ssh/authorized_keys</span> file and paste into it the public key from <span class="code">an-node04</span>. If the remote machine's user hasn't used <span class="code">ssh</span> yet, their <span class="code">~/.ssh</span> directory will not exist. | |||

(Wrapped to make it more readable) | |||

<source lang="bash"> | |||

cat ~/.ssh/authorized_keys | |||

</source> | |||

<source lang="text"> | |||

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQBTNg6FZyDKm4GAm7c+F2enpLWy+t8Z | |||

Zjm4Z3Q7EhX09ukqk/QmMqprtI9OsiRVjce+wGx4nZ8+Z0NHduCVuwAxG0XG7FpK | |||

kUJC3Qb8KhyeIpKEcfYAtsDUFnWddVF8Tsz6dDOhb61tAke77d9E01NfyHp88QBx | |||

jJ7w+ZgB2eLPBFm6j1t+K50JHwdcFfxrZFywKnAQIdH0NCs8VaW91fQZBupg4OGO | |||

MpSBnVzoaz2ybI9bQtbZ4GwhCghzKx7Qjz20WiqhfPMfFqAZJwn0WXfjALoioMDW | |||

avTbx+J2HM8KJ8/YkSSKdDEgZCItg0Q2fC35TDX+aJGu3xNfoaAe3lL1 root@an | |||

-node04.alteeve.ca | |||

</source> | |||

Now log out and then log back into the remote machine. This time, the connection should succeed without having entered a password! | |||

Various applications will connect to the other node using different methods and networks. Each connection, when first established, will prompt for you to confirm that you trust the authentication, as we saw above. Many programs can't handle this prompt and will simply fail to connect. So to get around this, I will <span class="code">ssh</span> into both nodes using all hostnames. This will populate a file called <span class="code">~/.ssh/known_hosts</span>. Once you do this on one node, you can simply copy the <span class="code">known_hosts</span> to the other nodes and user's <span class="code">~/.ssh/</span> directories. | |||

I simply paste this into a terminal, answering <span class="code">yes</span> and then immediately exiting from the <span class="code">ssh</span> session. This is a bit tedious, I admit. Take the time to check the fingerprints as they are displayed to you. It is a bad habit to blindly type <span class="code">yes</span>. | |||

Alter this to suit your host names. | |||

<source lang="bash"> | <source lang="bash"> | ||

ssh root@an-node04 && \ | |||

ssh root@an-node04.alteeve.ca && \ | |||

ssh root@an-node04.bcn && \ | |||

ssh root@an-node04.sn && \ | |||

ssh root@an-node04.ifn && \ | |||

ssh root@an-node05 && \ | |||

ssh root@an-node05.alteeve.ca && \ | |||

ssh root@an-node05.bcn && \ | |||

ssh root@an-node05.sn && \ | |||

ssh root@an-node05.ifn | |||

</source> | </source> | ||

== | Once you've done this on one node, you can simply copy the <span class="code">~/.ssh/known_hosts</span> file to the other node. In my case, I ran the above commands on <span class="code">an-node04</span>, so I will copy the file to <span class="code">an-node05</span>. | ||

<source lang="bash"> | |||

rsync -av root@192.168.1.74:/root/.ssh/known_hosts ~/.ssh/ | |||

</source> | |||

<source lang="text"> | |||

receiving file list ... done | |||

known_hosts | |||

sent 96 bytes received 2165 bytes 4522.00 bytes/sec | |||

total size is 7629 speedup is 3.37 | |||

</source> | |||

== Installing Packages We Will Use == | |||

There are several packages we will need. They can all be installed in one go with the following command. | |||

If you have a slow or metered Internet connection, you may want to alter <span class="code">/etc/yum.conf</span> and change <span class="code">keepcache=0</span> to <span class="code">keepcache=1</span> before installing packages. This way, you can then run your updates and installs on one node and then <span class="code">rsync</span> the downloaded files from the first node to the second node. Once done, when you run the updates and installs on that second node, nothing more will be downloaded. To copy the cached [[RPM]]s, simply run <span class="code">rsync -av /var/cache/yum root@an-node05:/var/cache/</span> (assuming you did the initial downloads from <span class="code">an-node04</span>). | |||

= | {{note|1=If you are using [[RHEL]] 5.x proper, you will need to manually download and install the [[DRBD]] RPMs from [http://www.linbit.com/support/ Linbit].}} | ||

<source lang="bash"> | |||

yum install cman openais rgmanager lvm2-cluster gfs2-utils xen xen-libs kmod-xenpv \ | |||

drbd83 kmod-drbd83-xen virt-manager virt-viewer libvirt libvirt-python \ | |||

python-virtinst luci ricci ntp bridge-utils system-config-cluster | |||

</source> | |||

This will drag in a good number of dependencies, which is fine. | |||

== Keeping Time In Sync == | |||

It is very important that time on both nodes be kept in sync. The way to do this is to setup [[[NTP]], the network time protocol. I like to use the <span class="code">tick.redhat.com</span> time server, though you are free to substitute your preferred time source. | |||

First, add the timeserver to the NTP configuration file by appending the following lines to the end of it. <span class="code"></span> | |||

<source lang="bash"> | <source lang="bash"> | ||

echo server tick.redhat.com$'\n'restrict tick.redhat.com mask 255.255.255.255 nomodify notrap noquery >> /etc/ntp.conf | |||

tail -n 4 /etc/ntp.conf | |||

</source> | </source> | ||

<source lang=" | <source lang="text"> | ||

# Specify the key identifier to use with the ntpq utility. | |||

#controlkey 8 | |||

server tick.redhat.com | |||

restrict tick.redhat.com mask 255.255.255.255 nomodify notrap noquery | |||

</source> | </source> | ||

Now make sure that the <span class="code">ntpd</span> service starts on boot, then start it manually. | |||

<source lang="bash"> | |||

chkconfig ntpd on | |||

/etc/init.d/ntpd start | |||

</source> | |||

<source lang="text"> | |||

Starting ntpd: [ OK ] | |||

</source> | |||

== Altering Boot Up == | |||

= | {{note|1=The next two steps are optional.}} | ||

There are two changes I like to make on my nodes. These are not required, but I find it helps to keep things as simple as possible. Particularly in the earlier learning and testing stages. | |||

=== Changing the Default Run-Level === | |||

If you choose not to implement it, please change any referenced to <span class="code">/etc/rc3.d</span> to <span class="code">/etc/rc5.d</span> later in this tutorial. | |||

I prefer to minimize the running daemons and apps on my nodes for two reasons; Performance and security. One of the simplest ways to minimize the number of running programs is to change the run-level to <span class="code">3</span> by editing <span class="code">/etc/inittab</span>. This tells the node when it boots not to start the graphical interface and instead simply boot to a <span class="code">[[bash]]</span> shell. | |||

This change is actually quite simple. Simply edit <span class="code">/etc/inittab</span> and change the line <span class="code">id:5:initdefault:</span> to <span class="code">id:3:initdefault:</span>. | |||

<source lang=" | <source lang="bash"> | ||

< | cp /etc/inittab /etc/inittab.orig | ||

< | sed -i 's/id:5:initdefault/id:3:initdefault/g' /etc/inittab | ||

diff -u /etc/inittab.orig /etc/inittab | |||

</source> | |||

<source lang="diff"> | |||

--- /etc/inittab.orig 2011-05-01 20:54:35.000000000 -0400 | |||

+++ /etc/inittab 2011-05-01 20:56:43.000000000 -0400 | |||

@@ -15,7 +15,7 @@ | |||

# 5 - X11 | |||

# 6 - reboot (Do NOT set initdefault to this) | |||

# | |||

-id:5:initdefault: | |||

+id:3:initdefault: | |||

# System initialization. | |||

si::sysinit:/etc/rc.d/rc.sysinit | |||

</source> | </source> | ||