What is an Anvil! and why do I care?

The most basic answer to this question is;

- An Anvil! is one of the most resilient platforms for running servers on. If you have a server that, if it failed, would really hurt, you probably want to put it on an Anvil! system.

Now, the longer answer.

Overview

The Anvil! platform is an Intelligent Availability platform. This means it has the following core features;

- It proactively protects hosted servers. It predicts failures where possible and accounts for changing threats.

- Full stack redundancy. All components can be completely removed and replaced without a maintenance window.

- Availability is prioritized before all else. Thin provisioning is not supported and compartmentalization is prioritized.

- Performance is kept consistent in a degraded state. It's not enough to be up if your performance falls below what is needed.

- Focus on automation. Even the best people make mistakes, so automate as much as possible.

It's natural to compare the Anvil! platform to a cloud solution.

- Both host virtual servers.

- Both simplify the creation and management of servers.

This is not valid, though.

- Cloud solutions generally focus on resource utilization efficiency.

- Cloud solutions generally integrate with web-based services.

- Cloud solutions often run "agents" or custom kernels on the guest servers.

On the other hand;

- The Anvil! puts nothing before stability and availability. Period.

- The Anvil! is designed to be self-contained and is fully functional without any external network connection.

- The Anvil! emulates traditional hardware as completely as possible, requiring no special software inside the guest servers.

Before we proceed, lets cover some terminology;

- Fault Tolerant; This is the gold-standard of availability. A fault-tolerant system is one that can suffer an unexpected failure without causing any interruption.

- High Availability; HA system are ones that can detect a fault and recover automatically and rapidly, but causes an interruption.

- Intelligent Availability™; IA systems use intelligence software to detect changing threats and autonomously takes action to mitigate threats.

In short; Intelligent Availability tries to bridge to take action so that traditionally HA systems behave in a more FT manner.

| Note: Caveat: "Intelligent Availability" is a trademarked term created by Alteeve in order to define the next generation of availability. Any system can be made IA and describe itself as such. Alteeve's purpose in trademarking the term was to avoid the diluting of the definition. |

Let's get into detail now!

How Does The Anvil! Work?

Many projects and products promise you the world. It's made us cynical, too.

Important Note!

Nothing is perfect.

Period.

The Anvil! is no different. We know we're not perfect and that is a strength.

Once you think you are perfect, that you have it all figured out, you will stop looking for ways to improve. You will start to make assumptions. You will eat humble pie and it will taste bitter.

Full Stack Redundancy - An Overview

In traditional "High Availability", full-stack redundancy is not required. Many "HA" solutions are simply a SAN and a few nodes, which leaves several single points of failure.

- A bad SAN firmware update, pulling the wrong drive from a degraded array and so on could take the storage down.

- A tripped UPS or PDU breaker can sever all power.

- A failed ethernet switch could sever network communication.

The Anvil! has been designed to provide no single points of failure. In fact, a guiding ethos of "Intelligent Availability" says that it is not enough to survive a failure, but also to survive recovery.

"So does this rule out blade chassis? SANs, too?"

Yup.

Consider this;

- Your blade/SAN chassis has redundant power backplanes. A fault causes a short and physically damages one of the power rails. You survive this fault successfully.

How do you repair it?

You would have to cut all power to safely open that chassis to remove and replace the shorted circuit board. This means you need to schedule a maintenance window and hope that, once you open the chassis, you in fact have all the parts you need to effect repair.

The Anvil! solves this problem by sticking to old fashioned, mechanically and electrically separated components.

- Standard rack mount machines for the nodes.

- Standard rack mount ethernet switches.

It's not sexy, but if there was an electrical short like above, we simply pull the dead part out and replace it. There is no need to take the backup component offline, so you can repair or replace without a maintenance window.

- "What about DC-sized UPSes? Can I use them instead of a couple smaller UPSes?"

No.

Or rather, yes, sure, but you will still need the two small UPSes.

We've seen DC-sized UPSes fail numerous times. Causes include human error, like forgetting to put the power into bypass before maintenance, firmware faults and other failures. In all cases, all power was lost and all systems went offline.

A pair of UPSes will insulate you against a failure in the UPS as the second UPS is fully independent and sufficient to power the full Anvil!. Second, the Anvil!uses the data from the UPSes in the ScanCore decision engine, which we will talk about later.

- "What's wrong with a SAN? $Vendor tells me they are totally redundant!"

Well, maybe. Consider the issues discussed above; Can the vendor's SAN solve those problems? We've not seen it. Further, SANs generally use fiber-channel or iSCSI network connections to access the storage. This is avoidable complexity, and complexity is the enemy of availability.

Now, if you really want to use a SAN for capacity or performance reasons that local (direct-attached) storage can't provide, then fine. In this case, to be compatible with the Anvil!, use two SANs and attach each SAN's LUN to either node and treat them as DAS after that.

Storage Replication

This part of the Anvil! doesn't have hardware, per-se, but it needs to be discussed right off the top.

We discussed in the previous section how most SANs have a fundamental flaw, from a pure availability perspective. So then, how to we solve this problem? Replicated Storage!

In the Anvil!, we have a special partitions on each node, and both nodes in the node pair have the same sized partition. We use DRBD, an open-source software package, to "mirror" the data on these partitions. If you have ever build a RAID level 1 array before, then you already familiar with how data can be written to two locations at the same time. Later, if a drive failed, you would be protected because the data is still available on the remaining good drive.

DRBD works the same way, just over the network.

So imagine this traditional software mdadm array;

- /dev/sda5 + /dev/sdb5 -> /dev/md0

In this case, once the md0 device is created, that is what you use from then on. The mdadm software deals behind the scenes to copy the data to both locations, deal with failed drives and rebuild on a replacement drive.

In the DRBD world, it works like this:

- node1:/dev/sda5 + node2:/dev/sda5 -> /dev/drbd0

Both nodes would be able to see the new /dev/drbd0 device, and writes to either node will be synchronously replicated to the peer node.

In our world, if we had a server running on a node's 'subnode 1', then each write it makes will be committed to the backing device on both subnodes before the write is considered complete. This way, if 'subnode 1' were destroyed, the server would boot up on 'subnode 2' right where it left off. File systems would replay their journals, databases would replay their write-ahead logs, etc. The recovery would be the same as a traditional bare iron server having lost power and then turned back on.

The Anvil! Dissected

To understand how the Anvil! achieves full-stack redundancy, we need to need a few parts.

Physical Components

The Anvil! is based on three main component groups;

- . Foundation Pack

- . Node Pairs

- . Striker Dashboards

Briefly;

- A foundation pack consists on the foundation equipment; Two UPSes, two switched PDUs and two ethernet switches.

- Node Pairs are where the hosted servers live. Servers can migrate back and forth between nodes without interruption. Servers can be cold-migrated between pairs.

- Striker Dashboards host the web interface and store the ScanCore database data.

Now lets look at these parts in more detail.

Foundation Pack

Lets look closer at the foundation on top of which the Anvil! node pairs and Striker dashboards will live.

Networked UPSes

Each UPS is connected to a dedicated mains circuit providing enough power (voltage and amperage) suitable to power the entire Anvil!.

When a building is serviced by two power companies, one circuit from either provider will be used to power either UPS. When only one provider is available, then two separate circuits are required. When possible, take two circuits from different panels.

The goal is to minimize the chance that both UPSes will lose power at the same time.

| Warning: It is critical that the power feeding the UPS, and the UPS itself, is of sufficient capacity that it can provide the required minimum hold-up time when the peer UPS has failed! |

The network connection is used by ScanCore scan agents to monitor the quality of the input power, the health of the UPSes, the estimated hold-up time and the charge rate. We will cover this in more detail later.

Switched PDUs

The switched PDUs each plug into their respective UPSes. These are, at their heart, network-connected power strips that can turn each outlet on and off, independent of one another.

This capability provides a critical safety capability as a backup fence device. We will go into more detail on this later.

All other devices with redundant power supplies will plug one supply into the first PDU and the backup supply to the second PDU. When devices without redundant power supplies are used, such as most Striker dashboard machines, the first device will draw power from the first UPS and the second, backup system will draw power from the second PDU.

Given that each PDU connects to a different UPS, and each UPS comes from a different circuit, power feeding the Anvil! is made as redundant as possible.

Ethernet Switches

The Anvil! uses three separate, VLAN-isolated networks. Each network is designed to provide isolation from the others, for both security and performance stability reasons.

They are:

- Back-Channel Network - Used for all internal Anvil! communication.

- Storage Network - Used for synchronous storage replication.

- Internet-Facing Network - Linkage between hosted servers and the outside network.

The Anvil! node pairs use all three networks. Connections to each network are over two active-passive bonded network interfaces. Each link in a bonded pair must span two different network interfaces (we'll explore this more later).

_________________________

| [ an-a01n01 ] |

| ________________| ______________

| | ___________| | bcn1_bond1 |

| | P | bcn1_link1 =----------------------=-----+--------=----{ Switch

| | C |__________|| | | |

| | I | /------------------=-----/ |

| | e ___________| | |______________|

| | | ifn1_link1 =----------------\ ______________

| | 1 |__________|| | | | ifn1_bond1 |

| | | | \-----=-----+--------=----{ Switch

| | ___________| | | | |

| | | sn1_link1 =---------\ /---------=-----/ |

| | |__________|| | | | |______________|

| | | | | | ______________

| | ___________| | | | | sn1_bond1 |

| | | mn1_link1 =------\ \------------=-----+--------=----{ Switch

| | |__________|| | | | | | | or Peer

| |________________| | | | /------=-----/ |

| ________________| | | | | |______________|

| | ___________| | | | | ______________

| | P | bcn1_link2 =---/ | | | | mn1_bond1 |

| | C |__________|| \---------------=-----+--------=----{ Peer

| | I | | | | | |

| | e ___________| | | /---=-----/ |

| | | ifn1_link2 =------------/ | | |______________|

| | 2 |__________|| | |

| | | | |

| | ___________| | |

| | | sn1_link2 =---------------/ |

| | |__________|| |

| | | |

| | ___________| |

| | | mn1_link2 =------------------/

| | |__________||

| |________________|

|_________________________|

With this configuration, the network on the node pairs are made fully redundant. Lets look at a few common failure scenarios;

- If the primary switch or the first PCIe controller is lost, all bonded interfaces will fail over to the backup links.

- If the backup switch or the second PCIe controller is lost, the bonds will degrade but no cut-over will be required.

As we will see later, if a node needs to be ejected from the Anvil! system, the IPMI interfaces will be connected to the primary switch and the switched PDUs will be connected to the backup switch. These two devices provide alternate mechanism for ejecting a failed node, so the loss of either switch will not remove the ability to eject a failed node.

Even the Anvil! platform's safety systems have backups!

Anvil! Nodes

An Anvil! node consists of two physical subnodes, each capable alone of powering all hosted servers with sufficient speed to deliver operation performance requirements.

The nodes themselves can vary in capacity and performance a great deal from one pair to the next. Despite this, there are architectural requirements that must always be met. They are:

- CPUs that support hardware virtualization (VT-d). Almost all modern CPUs provide this, though it is often disabled in the BIOS by default.

- Sufficient RAM for all hosted servers, plus at least 4 GiB for the host.

- Six network interfaces.

- IPMI (out-of-band) support, known as 'iRMC' on Fujitsu/Siemens, 'RSA' on IBM/Lenove, 'DRAC' on Dell 'iLO' on HP, and so on.

- Redundant Power Supplies

This configuration is the minimum configuration needed to provide no single point of failure. Additional features, like RAID for storage, mirrored RAM, 3x dual-port network cards and similar are highly recommended as they increase the fault tolerance of each node.

Wait, Why Pairs?

Buckle up, we're going on a tangent.

A frequently asked question is "why does the Anvil! only support pairs of nodes? How do you scale?".

The answer is, quite simply, "simplicity".

In availability, there is nothing more important that continuous operation. Every single proposed feature must be looked at through this lens.

A 2-node setup, much like RAID level 1, trades efficiency for simplicity. In a two-node configuration, you are able to gain access to all the protection you require to protect the hosted servers.

Adding a third or fourth node would increase complexity in exchange for better resource utilization. The idea, and it is a sensible one, is that one spare node could protect two or more active server hosts. This is true, but then you need to introduce external, fully redundant storage which will be accessed over less reliable network or fiber-channel links. You also have much more complex cluster resource management configurations. You also increase the network traffic needed to keep all the nodes in sync, and so on.

Availability is not an efficiency game. Cloud technologies are.

But I Was Told That 2-Nodes Can't Be Highly Available?

Here's the longer answer;

This is a common myth in the availability industry, and it comes from people and vendors misunderstanding the role of "quorum" and "fencing" (also called "stonith").

In short; Fencing is mandatory, quorum is optional.

People who say that 2-node clusters can't work say so because they get this backwards.

To answer this, we first must now explain fencing.

Problem; Split-Brains

In a fully redundant system like the Anvil!, it is theoretically possible that both nodes could partition. That is to say, they can each think that their peer is dead and thus try to operate alone. This condition is called a "split-brain" and it is one of the most dangerous conditions for an availability platform.

- At the very least, you have a very troublesome and drawn out recovery process.

- At worst, you corrupt your data.

Consider this scenario;

A fault in the network stack is such that inter-node communication is lost. This could be something as simple as an errant firewall rule, or it could be a weird fault in the switches. The cause itself doesn't matter. The result, though, is that both nodes lose contact with their peer, but are otherwise functional.

If an assumption was made that "Hey, I can't talk to my peer, so it must be dead", then this is what would happen.

- . The node that was hosting the servers goes back to operating normally, as though nothing happened.

- . The peer node, thinking the old host is dead, now boots all of the servers in an attempt to restore service.

How bad is this?

|

That depends, how lucky were you?

If the replicated storage split at the same time, then it means that each copy of the identical server is now operating independently and your data is diverging. Setting aside concerns of two identical servers claiming the same hostname, IP and MAC address, you now have a problem of deciding which copy's data is "more" better.

To recover, you have to tell one node to shut down it's copy and then discard all of the changes that happened after the break. If you are super lucky, this might be an easy decision to make. In most cases though, you are going to have to lose data.

You could take one node's servers, backup the data to an external source, discard the changes and then manually restore just the data you need to merge back. How hard is this depends on your application and data structure. It certainly won't be a fast recovery.

You can't automate this. Do you discard the side with the smallest changes? Perhaps the side with the oldest changes? What if you had some users connect to one server and they recorded financial information, patient data or similarly small but important data. Later, other users who accessed the other node uploaded a DVD disc image. If you discard oldest or smallest changes, you wipe the obviously more important data.

In the worst case scenario, you might still have storage replicating normally. The replicated storage subsystem looks at byte streams, not data structures. If data comes in from both nodes, as happens normally with cluster-aware filesystems, the replicated storage simply ensures that the changes from either node make it to the peer.

If you boot the same server on the second node, it will start to write data to the same blocks. Congratulations, you just corrupted your server's storage.

Q. So how do you prevent this?

A. Fencing.

Fencing - Ejecting Nodes For Great Success!

In the above horror scenario, the root of the problem was that the nodes were allowed to make assumptions. Sure, in a demo to a client this is safe because you actually kill a node. Great.

That's not how it goes in the real world, though.

When a node stops responding, the only thing we know is that we don't know what it is doing...

- Maybe it's running fine but we can't talk to it.

- Maybe it's hung and might recover later.

- Maybe it's a smouldering pile for iron and sadness.

Fencing is, simply, a tool for blocking the cluster until the peer can be put into a known state. The most common way of doing this is to turn the node off.

In the Anvil!, this is done by first connecting to the lost node's IPMI baseboard management controller. The BMC is basically an independent computer that lives on the host's mainboard. It has its own CPU, RAM, MAC and IP address and it can function no matter whether it's host is powered on or not. It can read the host's sensors and press its power and reset buttons.

We saw above that each node's IPMI interface is plugged into ethernet switch 1 on the BCN. The good node will log into the peer's IPMI and ask the BMC "hey, please power off your host". The BMC does this by pressing and holding the power switch until the host shuts down. Once off, the good node is informed that the peer is dead and recovery (if needed) can begin safely.

There's a flaw with this, however.

The BMC draws its power from the same source as the host itself. So in the case of a catastrophic failure, the BMC will die along with its host. So in this case, the good node will say try to talk to the BMC, but it will get no response.

So what then?

If this was the only fence device, then the good node would stay blocked. The logic is that, as bad as it is to hang the cluster, it is better than risking data loss.

Thankfully, we have a backup!

In the Anvil!, the good node would time-out waiting for a reply from the BMC and give up. It would then move on to the backup fence method; The switched PDUs. Note that the PDUs are plugged into the second switch.

Node 1 knows which outlets node 2 draws its power from. Likewise, Node 2 knows which outlets node 1 is plugged into. So if the lost peer can't be reached over IPMI, the good node logs into both PDUs and cuts the power to both power supplies feeding the peer.

Now, we can be sure that the peer is dead! Recovery will proceed, safe in the knowledge that the peer is not running the servers.

It's brutal, but it works.

Quorum - When All Is Well

The basic principle of quorum is that you have a "tie-breaker" node.

The idea is that a majority of nodes need to be communicating with each other in order to be allowed to host and run any cluster resources, like virtual servers.

The mistake people make is that they think that this tie-breaker vote can prevent a split-brain.

It can not.

The problem is that quorum only works when all nodes are behaving in a predictable manner.

In an ideal world, if a node lost access to the peers, it would shut down any hosted servers because it would become inquorate it had a minority of votes. If it really did this, then yes, quorum would prevent a split-brain. Unfortunately, the real world of failures is too messy to ever make this assumption.

Consider this ideal 3-node, 3-vote scenario;

Your server is running on Node 1. Someone throws up an errant firewall rule and blocks communication with nodes 2 and 3. Node 1 says "well, I am by myself, so I have 1 vote. I'm inquorate, so I am going to kill my hosted servers". Meanwhile, nodes 2 and 3 declare node 1 lost, see that they have 2 votes and are therefore quorate. They assume that node 1 must be shutting down it's servers, so they decide to go ahead and boot the servers on node 2.

Excellent. Wonderful. Too bad that doesn't always happen.

Consider this realistic 3-node, 3-vote scenario;

Node 1 is again hosting a server. That server is in the middle of a write to disk, and for some reason the host node hangs hard. Nodes 2 and 3 re-vote, declare they have quorum and recover the server by booting it on node 2.

Now here is where it all goes sideways;

At some point later, Node 1 recovers. It has no concept of time passing, so it has no reason in that moment to check if it is still quorate or not. The server completes its write and voila, you've corrupted your storage. Sure, node 1 will realize it has lost quorum and try to shut down, but by then the damage has been done.

Quorum can be useful, but it is not required. It is certainly NOT a substitute for fencing.

Can Both Nodes Fence Each Other?

Short answer; Yes.

Long answer; Almost never.

Consider again the scenario where both nodes in a 2-node cluster are alive, but communication is severed. Both nodes will try to shoot the other.

|

This is often portrayed as a "wild-west" style shoot-out. The fastest node to pull the trigger lives and the slower node dies.

This is, basically, what happens in real life. However, we can bias fencing to ensure that one node will live in a case like this.

In the Anvil!, the node hosting the servers is given a 15 second head start of fencing the peer. In a case where both nodes are alive, the node without servers is told to wait 15 seconds, where the node with the server pulls the trigger immediately. So in this case, the node with the servers will always win, avoiding the need to recover the servers at all.

If, however, node 1 was truly failed, then node 2 would exit the wait and shoot node 1. Once shot, it would reboot the servers, no different than if a bare-iron server lost power and was restarted.

Fencing vs. Quorum - In Summary

This provides, I hope, a complete answer to why the myth that you can't do availability on 2-node platforms is not just wrong, but backwards.

There is a saying in the availability world;

- "A cluster is not beautiful when there is nothing left to add. It is beautiful when there is nothing left to take away."

A 2-node platform is significantly simpler and totally safe, thanks to fencing.

Scaling The Anvil! - Compartmentalization

The Anvil! scales by adding node pairs.

Each pair is an autonomous unit that operates independent of other nodes. The servers on one pair can live-migrate between the nodes without interruption. Servers can, when needed, be cold-migrated to a new node pair if needed.

A single foundation pack can host two or three node pairs. Once a foundation pack is filled, a new foundation pack is deployed and then additional node pairs can be deployed. In this way, you can run as many pairs as you like.

Wait, isn't that really wasteful?

Yes, but recall that availability is the goal, not resource utilization efficiency.

The benefit of keeping the node pair to foundation pack ratio high is that is provides healthy compartmentalization. A multi-component disaster on one foundation pack will effect only its hosted node pairs. In a large deployement, this could mean that a subset of systems are lost, which is certainly a very bad thing, but the damage will be limited.

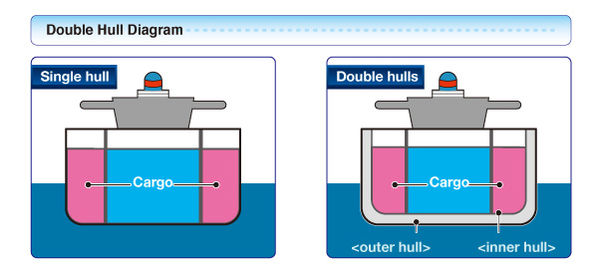

Think of it like floodable compartments on a ship (an imperfect analogy, but work with me on this).

|

In a normal double-haul ship, you can think of the outer and inner hauls as you traditional redundancy. If you hit an iceberg, you will tear the outer haul, but not the inner haul. Excellent.

|

What if your ship is a military vessel and is hit by a torpedo? Both hauls will be breached and water will start to ingress, and now you need to focus on damage control. Whatever was on the other side of the haul is lost, but it doesn't mean your ship is going to sink. If your ship has water tight compartments, you can seal off the destroyed portion and limit the amount of water taken on. Your ship is crippled, but not sunk.

That is the idea behind compartmentalization in the Anvil! system.

Striker Dashboards

Striker dashboard systems play three roles;

- . They provide a web-interface designed to provide a simple user interface that covers 90% of day-to-day use of the Anvil! systems.

- . They host the ScanCore database where all sensor data from all connected Anvil! node pairs is stored.

- . They determine when it is safe to recover an 'Anvil! node or node pair after an emergency shut down or unexpected power loss.

A single pair of Striker dashboards can, theoretically, manage any number of Anvil! node pairs.

In practice, the number of functionally supported node pairs is limited to the dashboard's performance. The incoming ScanCore sensor and state data is auto-archived, but there is still a functional limit to what the dashboard hardware can support. The more powerful the dashboard hardware, the more Anvil! pairs they can manage.

Striker dashboards are rarely inherently redundant. Redundancy is achieved by there being two of them.

- The first dashboard draws its power from the first power rail and connects into the first ethernet switch.

- The second dashboard draws its power from the second power rail and connects into the second ethernet switch.

In this way, no matter what fails, one of the dashboards will be up and running, providing full redundancy.

The dashboards themselves do not have a concept of "primary" or "backup". Each one is fully independent, but they are able to merge in data that was collected by another dashboard while it was offline. The resyncronization can happen in both directions at the same time, and the synchronization is not limited to two dashboards. There is no hard limit on how many dashboards can work together and maintain data sync. Of course, the same limitations on load versus hardware capacity remain.

We will explore ScanCore later, as it is the intelligence in "Intelligent Availability" and requires a section of its own.

Rack Configuration

Below is a very typical Anvil! load-out. Note that this is a half-height rack and a single foundation pack hosting two node pairs. The Striker dashboards are typically Intel NUCs and sit on the shelf.

| NetShelter SX 24U | ||

| 24 | -- | 24 |

| 23 | -- | 23 |

| 22 | -- | 22 |

| 21 | -- | 21 |

| 20 | Shelf | 20 |

| 19 | an-a01n01 | 19 |

| 18 | 18 | |

| 17 | an-a01n02 | 17 |

| 16 | 16 | |

| 15 | an-a02n01 | 15 |

| 14 | 14 | |

| 13 | an-a02n02 | 13 |

| 12 | 12 | |

| 11 | an-switch01 | 11 |

| 10 | an-switch02 | 10 |

| 9 | <network cables> | 9 |

| 8 | an-pdu01 | 8 |

| 7 | <power cables> | 7 |

| 6 | an-pdu02 | 6 |

| 5 | <power cables> | 5 |

| 4 | an-ups01 | 4 |

| 3 | 3 | |

| 2 | an-ups02 | 2 |

| 1 | 1 | |

Physical Configuration - Consistency

Recall how we said that nothing is perfect? We said this is a strength, and now we will explain why.

When you accept that everything can fail, you start to ask yourself, "If there is a failure, what can I do now to minimize the mean time to recover?".

One of the strongest tools you have is consistency.

If you make sure that every installation is as identical as possible, then you won't have to reference documentation to find out what ports were used, how devices were racks, etc. Building on this, you will want to label everything. You want to be able to walk up to any system and immediately confirm that a given cable is, in fact, the cable you expect it to be.

A few hours of work pre-deployment can significantly reduce your recovery time in a disaster. This can not be emphasized enough.

In the Anvil! system, this means that the ports used on the ethernet switches, switched PDUs and position of devices in the rack are always the same. You can walk up to an Anvil! that was installed years ago that you have never seen before and how it is racked and assembled will be familiar.

This extends into the system as well.

Network interface names, node names, resource names... It is all consistent install after install.

A good Anvil! system is one that you will pretty much forget that you have. In the early days, we would provide training to clients so that they could learn the guts of the system. In some cases, we would be hired to walk the client's staff thought the entire manual build process so that they could fully understand the system. These training sessions had value, but one thing became very clear; People forget.

The problem is that the time span between training and problem could be 6, 12, 18 months or more. No matter how smart the user, it was just too little exposure.

Providing consistency is one of the best tools you have to help mitigate this.

Beneficial Assumptions

One of the biggest benefits of consistency in design is that it allows you to make a very large number of assumptions in your software.

Humans make mistakes, and that is unavoidable.

Accepting this means that you will design the software to minimize the surface area where users can break things. If you ask the user to tell you if this given system has this or that setup, they will eventually get it wrong. By building on top of an extremely consistent system, you can remove most of the guess work and dramatically simplify your user interface.

Striker has taken advantage of this fact. It is far from the most powerful UI, but that's by design. Recall; Nothing is more important than availability.

The purpose of the Striker UI is not to help the user. It is to protect the system.

When you start looking at the user interface this way, your design decisions change fairly dramatically. You are not competing against the feature set of cloud platforms anymore.

A Logical Breakdown

Thus far, we've talked about the Anvil! at a fairly high level.

Lets break it down.

Logical Block Diagram

Below is a full block-diagram map of the Anvil! cluster, with two nodes. The first node also shows the striker dashboards.

Consider what we covered above, and this should start to make sense.

-=] Strikers and an-anvil-01

\_ IFN 1 _/

Striker Dashboards \_ LAN _/

_______________________________ \___/ _______________________________

| an-striker01.alteeve.com | | | an-striker02.alteeve.com |

| __________________| | |___________________ |

| | ifn1_link1 =---------------------------------\ /-------------------------------------------------------------------------= ifn1_likn1 | |

| | 10.255.4.1 || | | | || 10.255.4.2 | |

| |__________________|| | | | ||__________________| |

| ___________________| | | | |___________________ |

| | bcn1_link1 =---------------------------------|-|-----------------------------------\ /-----------------------------------= bcn1_link1 | |

| | 10.201.4.1 || | | | | | || 10.201.4.2 | |

| |__________________|| | | | | | ||__________________| |

| | | | | | | | |

| _______| | | | | | |_______ |

| | PSU 1 | ____________________ | | | | | ____________________ | PSU 1 | |

|_______________________|_______|==={_to_an-pdu01_port-8_} | | | | | {_to_an-pdu02_port-8_}===|_______|_______________________|

| | | | |

-=] an-anvil-02 (Node 2) | | | | |

____________________________________________________________________________ | | _____|____ | | ____________________________________________________________________________

| an-a01n01.alteeve.com | | | /---------{_intranet_}----------\ | | | an-a01n02.alteeve.com |

| Network: _________________| | | | | | | |_________________ Network: |

| _________________ | ifn1_bond1 | | | | _________________________ | | | | ifn1_bond1 | _________________ |

| Servers: | ifn1_bridge1 |----| <ip on bridge> | | | | | an-switch01 | | | | | <ip on bridge> |----| ifn1_bridge1 | Servers: |

| __________________________ | 10.255.10.1 | | _____________| | | | |____ Internet-Facing ____| | | | |____________ | | 10.255.10.2 | ............................ |

| | [ srv01-sql ] | |_________________| | | ifn1_link1 =---------=_01_] Network [_02_=---------= ifn_link1 | | |_________________| : [ srv01-sql ] : |

| | __________________| | | | | | | |____________|| \------=_09_]_______________[_24_=--/ | | ||___________| | : : : : : :__________________ : |

| | | NIC 1 =----/ | | | | | | | | | an-switch02 | | | | | : : : : -----= NIC 1 | : |

| | | 10.255.1.1 || | | | | | _____________| | | |____ ____| | | |____________ | : : : : :| 10.255.1.1 | : |

| | |_________________|| | | | | | | ifn1_link2 =---------=_01_] VLAN ID 300 [_02_=---------= ifn_link2 | | : : : : :|_________________| : |

| | | | | | | | |____________|| \-|--=_09_]_______________[_24_=--\ | | ||___________| | : : : : : : |

| | ____ | | | | | |_________________| | | | | |_________________| : : : : : ____ : |

| /--=--[_c:_] | | | | | | \-------------------------------/ | | | : : : : : [_c:_]--=--\ |

| | |__________________________| | | | | _________________| | | |_________________ : : : : :..........................: | |

| | | | | | | sn1_bond1 | | | | sn1_bond1 | : : : : | |

| | __________________________ | | | | | 10.101.10.1 | | | | 10.101.10.2 | : : : : ............................ | |

| | | [ srv02-app1 ] | | | | | | ____________| | | |____________ | : : : : : [ srv02-app1 ] : | |

| | | __________________| | | | | | | sn1_link1 =---------------------------------------------= sn1_link1 | | : : : : :__________________ : | |

| | | | NIC 1 =-----/ | | | | |___________|| | | ||___________| | : : : ------= NIC 1 | : | |

| | | | 10.255.1.2 || | | | | | | | | | : : : :| 10.255.1.2 | : | |

| | | |_________________|| | | | | ____________| | | |____________ | : : : :|_________________| : | |

| | | | | | | | | sn1_link2 =---------------------------------------------= sn1_link2 | | : : : : : | |

| | | ____ | | | | /---------| |___________|| | | ||___________| |---------\ : : : : ____ : | |

| +---=--[_c:_] | | | | | |_________________| | | |_________________| | : : : : [_c:_]--=---+ |

| | |__________________________| | | | | | | | | | : : : :..........................: | |

| | | | | | _________________| | | |_________________ | : : : | |

| | __________________________ | | | | | mn1_bond1 | | | | mn1_bond1 | | : : : ............................ | |

| | | [ srv03-app2 ] | | | | | | 10.199.10.1 | | | | 10.199.10.2 | | : : : : [ srv03-app2 ] : | |

| | | __________________| | | | | | ____________| | | |____________ | | : : : :__________________ : | |

| | | | NIC 1 =-------/ | | | | | mn1_link1 =---------------------------------------------= mn1_link1 | | | : : --------= NIC 1 | : | |

| | | | 10.255.1.3 || | | | | |___________|| | | ||___________| | | : : :| 10.255.1.3 | : | |

| | | |_________________|| | | | | | | | | | | : : :|_________________| : | |

| | | | | | | | ____________| | | |____________ | | : : : : | |

| | | ____ | | | | | | mn1 link2 =---------------------------------------------= mn1_link2 | | | : : : ____ : | |

| +--=--[_c:_] | | | | | |___________|| | | ||___________| | | : : : [_c:_]--=---+ |

| | |__________________________| | | | |_________________| | | |_________________| | : : :..........................: | |

| | | | | | | | | | : : | |

| | __________________________ | | | _________________| | | |_________________ | : : ............................ | |

| | | [ srv04-app3 ] | | | | | bcn1_bond1 | _________________________ | | | bcn1_bond1 | | : : : [ srv04-app3 ] : | |

| | | __________________| | | | | <ip on bridge> | | an-switch01 | | | | <ip on bridge> | | : : :__________________ : | |

| | | | NIC 1 =---------/ | | | _____________| |____ Back-Channel ____| | | |_____________ | | : ----------= NIC 1 | : | |

| | | | 10.255.1.4 || | | | | bcn1_link1 =---------=_13_] Network [_14_=---------= bcn1_link1 | | | : :| 10.255.1.4 | : | |

| | | |_________________|| | | | |____________|| |____________________[_19_=----/ | ||____________| | | : :|_________________| : | |

| | | | | | | | | an-switch02 | | | | | : : : | |

| | | ____ | | | | _____________| |____ ____| | |_____ _______ | | : : ____ : | |

| +--=--[_c:_] | | | | | bcn1_link2 =---------=_13_] VLAN ID 100 [_14_=---------= bcn1_link2 | | | : : [_c:_]--=---+ |

| | |__________________________| | | | |____________|| |____________________[_19_=------/ ||____________| | | : :..........................: | |

| | | | |_________________| |_________________| | : | |

| | __________________________ | | | | | | | : ............................ | |

| | | [ srv05-admin ] | | | _______|_________ | | _________|_______ | : : [ srv05-admin ] : | |

| | | __________________| | | | bcn1_bridge1 | | | | bcn1_bridge1 | | : :__________________ : | |

| | | | NIC 1 =-----------/ | | 10.201.10.1 | | | | 10.201.10.2 | | ------------= NIC 1 | : | |

| | | | 10.255.1.250 || | |_________________| | | |_________________| | :| 10.255.1.250 | : | |

| | | |_________________|| | | | | | | :|_________________| : | |

| | | | | | | | | | : : | |

| | | __________________| | | | | | | :__________________ : | |

| | | | NIC 2 =----------------------/ | | \----------------------= NIC 2 | : | |

| | | | 10.201.1.250 || | | | | :| 10.201.1.250 | : | |

| | | |_________________|| | | | | :|_________________| : | |

| | | _____ | | | | | : _____ : | |

| +---=--[_vda_] | | | | | : [_vda_]--=---+ |

| | |__________________________| | | | | :..........................: | |

| | | | | | | |

| | | | | | | |

| | | | | | | |

| | | | | | | |

| | Storage: | | | | Storage: | |

| | __________ | | | | __________ | |

| | [_/dev/sda_] | | | | [_/dev/sda_] | |

| | | ___________ ___________ | | | | ___________ ___________ | | |

| | +--[_/dev/sda1_]-------[_/boot/efi_] | | | | [_/boot/efi_]-------[_/dev/sda1_]--+ | |

| | | ___________ _______ | | | | _______ ___________ | | |

| | +--[_/dev/sda2_]-------[_/boot_] | | | | [_/boot_]-------[_/dev/sda1_]--+ | |

| | | ___________ ___________ | | | | ___________ ___________ | | |

| | +--[_/dev/sda3_]-------[_<lvm:_pv>_] | | | | [_<lvm:_pv>_]-------[_/dev/sda1_]--+ | |

| | ___________________| \------------------\ | | /------------------/ |___________________ | |

| | _|___________________ | | | | ___________________|_ | |

| | [_<lvm:_vg_an-a01n01>_] | | | | [_<lvm:_vg_an-a01n01>_] | |

| | _|___________________ ________ | | | | ________ ___________________|_ | |

| | [_/dev/an-a01n01/swap_]--[_<swap>_] | | | | [_<swap>_]--[_/dev/an-a01n02/swap_] | |

| | _|___________________ ___ | | | | ___ ___________________|_ | |

| | [_/dev/an-a01n01/root_]--[_/_] | | | | [_/_]--[_/dev/an-a01n02/root_] | |

| | |___________________________ | | | | ___________________________|_ | |

| | [_/dev/an-a01n01/srv01-psql_0_] | | | | [_/dev/an-a01n01/srv01-psql_0_] | |

| | | | _______________________________ | | | | _______________________________ | | | |

| +-----------|--+--[_/dev/drbd/by-res/srv01-psql/0_]--------------+ | | +--------------[_/dev/drbd/by-res/srv01-psql/0_]--+--|-----------+ |

| | _|_______________________________ | | | | _______________________________|_ | |

| | [_/dev/an-a01n01_vg0/srv02-app1_0_] | | | | [_/dev/an-a01n01_vg0/srv02-app1_0_] | |

| | | | _______________________________ | | | | _______________________________ | | | |

| +-----------|--+--[_/dev/drbd/by-res/srv02-app1/0_]--------------+ | | +--------------[_/dev/drbd/by-res/srv02-app1/0_]--+--|-----------+ |

| | _|_____________________________ | | | | _______________________________|_ | |

| | [_/dev/an-a01n01_vg0/srv03-app2_] | | | | [_/dev/an-a01n01_vg0/srv03-app2_0_] | |

| | | | _______________________________ | | | | _______________________________ | | | |

| +-----------|--+--[_/dev/drbd/by-res/srv03-app2/0_]--------------+ | | +--------------[_/dev/drbd/by-res/srv03-app2/0_]--+--|-----------+ |

| | _|_____________________________ | | | | _______________________________|_ | |

| | [_/dev/an-a01n01_vg0/srv04-app3_] | | | | [_/dev/an-a01n01_vg0/srv04-app3_0_] | |

| | | | _______________________________ | | | | _______________________________ | | | |

| +-----------|--+--[_/dev/drbd/by-res/srv04-app3/0_]--------------+ | | +--------------[_/dev/drbd/by-res/srv04-app3/0_]--+--|-----------+ |

| | _|________________________________ | | | | ________________________________|_ | |

| | [_/dev/an-a01n01_vg0/srv05-admin_0_] | | | | [_/dev/an-a01n01_vg0/srv05-admin_0_] | |

| | | ________________________________ | | | | ________________________________ | | |

| \--------------+--[_/dev/drbd/by-res/srv05-admin/0_]-------------/ | | \-------------[_/dev/drbd/by-res/srv05-admin/0_]--+--------------/ |

| | _________________________ | |

| | | an-switch01 | | |

| __________________| |___ BCN ____| |__________________ |

| | IPMI =----------=_03_] VID 100 [_04_=---------= IPMI | |

| _________ _____ | 10.201.11.1 || |_________________________| || 10.201.11.2 | _____ _________ |

| {_sensors_}--[_BMC_]--|_________________|| | an-switch02 | ||_________________|--[_BMC_]--{_sensors_} |

| | | BCN | | |

| ______ ______ | | VID 100 | | ______ ______ |

| | PSU1 | PSU2 | | |____ ____ ____ ____| | | PSU1 | PSU2 | |

|____________________________________________________________|______|______|_| |_03_]_[_07_]_[_08_]_[_04_| |_|______|______|____________________________________________________________|

|| || | | | | || ||

/---------------------------||-||------------------|------/ \-------|------------------||-||---------------------------\

| || || | | || || |

_______________|___ || || __________|________ ________|__________ || || ___|_______________

_______ | an-ups01 | || || | an-pdu01 | | an-pdu02 | || || | an-ups02 | _______

{_Mains_}==| 10.201.3.1 |=======================||=||=======| 10.201.2.1 | | 10.201.2.2 |=======||=||=======================| 10.201.3.2 |=={_Mains_}

|___________________| || || |___________________| |___________________| || || |___________________|

|| || || || || || || ||

|| \\========[ Port 1 ]====// || || \\====[ Port 2 ]========// ||

\\===========[ Port 1 ]=======||=====// ||

\\==============[ Port 2 ]===========//

===========================================================================================================================================================================================================

-=] an-anvil-02 (Node 2)

____________________________________________________________________________ ____________________________________________________________________________

| an-a02n01.alteeve.com | | an-a02n02.alteeve.com |

| Network: _________________| |_________________ Network: |

| _________________ | ifn1_bond1 | _________________________ | ifn_bond1 | _________________ |

| Servers: | ifn_bridge1 |----| <ip on bridge> | | an-switch01 | | <ip on bridge> |----| ifn_bridge1 | Servers: |

| __________________________ | 10.255.12.1 | | _____________| |____ Internet-Facing ____| |____________ | | 10.255.12.2 | ............................ |

| | [ srv06-sql-qa ] | |_________________| | | ifn1_link1 =---------=_01_] Network [_02_=---------= ifn_link1 | | |_________________| : [ srv06-sql-qa ] : |

| | __________________| | | | |____________|| |_________________________| ||___________| | : : :__________________ : |

| | | NIC 1 =----/ | | | | an-switch02 | | | : -----= NIC 1 | : |

| | | 10.255.1.6 || | | _____________| |____ ____| |____________ | : :| 10.255.1.6 | : |

| | |_________________|| | | | ifn1_link2 =---------=_01_] VLAN ID 300 [_02_=---------= ifn_link2 | | : :|_________________| : |

| | | | | |____________|| |_________________________| ||___________| | : : : |

| | ____ | | |_________________| |_________________| : : ____ : |

| /--=--[_c:_] | | | | : : [_c:_]--=--\ |

| | |__________________________| | _________________| |_________________ : :..........................: | |

| | | | sn1_bond1 | | sn1_bond1 | : | |

| | __________________________ | | 10.121.12.1 | | 10.121.12.2 | : ............................ | |

| | | [ srv07-app-qa ] | | | ____________| |____________ | : : [ srv07-app-qa ] : | |

| | | __________________| | | | sn1_link1 =---------------------------------------------= sn1_link1 | | : :__________________ : | |

| | | | NIC 1 =-----/ | |___________|| ||___________| | ------= NIC 1 | : | |

| | | | 10.255.1.7 || | | | | :| 10.255.1.7 | : | |

| | | |_________________|| | ____________| |____________ | :|_________________| : | |

| | | | | | sn1_link2 =---------------------------------------------= sn1_link2 | | : : | |

| | | ____ | /---------| |___________|| ||___________| |---------\ : ____ : | |

| +---=--[_c:_] | | |_________________| |_________________| | : [_c:_]--=---+ |

| | |__________________________| | | | | :..........................: | |

| | | _________________| |_________________ | | |

| | | | mn1_bond1 | | mn1_bond1 | | | |

| | | | 10.199.12.1 | | 10.199.12.2 | | | |

| | | | ____________| |____________ | | | |

| | | | | mn1_link1 =---------------------------------------------= mn1_link1 | | | | |

| | | | |___________|| ||___________| | | | |

| | | | | | | | | |

| | | | ____________| |____________ | | | |

| | | | | mn1_link2 =---------------------------------------------= mn1_link2 | | | | |

| | | | |___________|| ||___________| | | | |

| | | |_________________| |_________________| | | |

| | | | | | | |

| | | _________________| |_________________ | | |

| | | | bcn1_bond1 | _________________________ | bcn1_bond1 | | | |

| | | | 10.201.12.1 | | an-switch01 | | 10.201.12.2 | | | |

| | | | _____________| |____ Back-Channel ____| |_____________ | | | |

| | | | | bcn1_link1 =---------=_13_] Network [_14_=---------= bcn1_link1 | | | | |

| | | | |____________|| |_________________________| ||____________| | | | |

| | | | | | an-switch02 | | | | | |

| | | | _____________| |____ ____| |_____________ | | | |

| | | | | bcn1 link2 =---------=_13_] VLAN ID 100 [_14_=---------= bcn1_link2 | | | | |

| | | | |____________|| |_________________________| ||____________| | | | |

| | | |_________________| |_________________| | | |

| | | | | | | | | |

| | | _______|_________ | | _________|_______ | | |

| | | | bcn1_bridge1 | | | | bcn1_bridge1 | | | |

| | | | 10.201.10.1 | | | | 10.201.10.2 | | | |

| | | |_________________| | | |_________________| | | |

| | | | | | | |

| | | | | | | |

| | Storage: | | | | Storage: | |

| | __________ | | | | __________ | |

| | [_/dev/sda_] | | | | [_/dev/sda_] | |

| | | ___________ ___________ | | | | ___________ ___________ | | |

| | +--[_/dev/sda1_]-------[_/boot/efi_] | | | | [_/boot/efi_]-------[_/dev/sda1_]--+ | |

| | | ___________ _______ | | | | _______ ___________ | | |

| | +--[_/dev/sda2_]-------[_/boot_] | | | | [_/boot_]-------[_/dev/sda1_]--+ | |

| | | ___________ ___________ | | | | ___________ ___________ | | |

| | +--[_/dev/sda3_]-------[_<lvm:_pv>_] | | | | [_<lvm:_pv>_]-------[_/dev/sda1_]--+ | |

| | ___________________| \------------------\ | | /------------------/ |___________________ | |

| | _|___________________ | | | | ___________________|_ | |

| | [_<lvm:_vg_an-a02n01>_] | | | | [_<lvm:_vg_an-a02n01>_] | |

| | _|___________________ ________ | | | | ________ ___________________|_ | |

| | [_/dev/an-a02n01/swap_]--[_<swap>_] | | | | [_<swap>_]--[_/dev/an-a02n02/swap_] | |

| | _|___________________ ___ | | | | ___ ___________________|_ | |

| | [_/dev/an-a02n01/root_]--[_/_] | | | | [_/_]--[_/dev/an-a02n02/root_] | |

| | |_____________________________ | | | | _____________________________|_ | |

| | [_/dev/an-a02n01/srv06-sql-qa_0_] | | | | [_/dev/an-a02n01/srv06-sql-qa_0_] | |

| | | | _________________________________ | | | | _________________________________ | | | |

| +-----------|--+--[_/dev/drbd/by-res/srv06-sql-qa/0_]------------+ | | +------------[_/dev/drbd/by-res/srv06-sql-qa/0_]--+--|-----------+ |

| | _|_________________________________ | | | | _________________________________|_ | |

| | [_/dev/an-a02n01_vg0/srv07-app-qa_0_] | | | | [_/dev/an-a02n01_vg0/srv07-app-qa_0_] | |

| | | _________________________________ | | | | _________________________________ | | |

| \--------------+--[_/dev/drbd/by-res/srv07-app-qa/0_]------------/ | | \------------[_/dev/drbd/by-res/srv07-app-qa/0_]--+--------------/ |

| | _________________________ | |

| | | an-switch01 | | |

| __________________| |___ BCN ____| |__________________ |

| | IPMI =----------=_03_] VID 100 [_04_=---------= IPMI | |

| _________ _____ | 10.201.13.1 || |_________________________| || 10.201.13.2 | _____ _________ |

| {_sensors_}--[_BMC_]--|_________________|| | an-switch02 | ||_________________|--[_BMC_]--{_sensors_} |

| | | BCN | | |

| ______ ______ | | VID 100 | | ______ ______ |

| | PSU1 | PSU2 | | |____ ____ ____ ____| | | PSU1 | PSU2 | |

|____________________________________________________________|______|______|_| |_03_]_[_07_]_[_08_]_[_04_| |_|______|______|____________________________________________________________|

|| || | | | | || ||

/---------------------------||-||------------------|------/ \-------|------------------||-||---------------------------\

| || || | | || || |

_______________|___ || || __________|________ ________|__________ || || ___|_______________

_______ | an-ups01 | || || | an-pdu01 | | an-pdu02 | || || | an-ups02 | _______

{_Mains_}==| 10.201.3.1 |=======================||=||=======| 10.201.2.1 | | 10.201.2.2 |=======||=||=======================| 10.201.3.2 |=={_Mains_}

|___________________| || || |___________________| |___________________| || || |___________________|

|| || || || || || || ||

|| \\========[ Port 3 ]====// || || \\====[ Port 4 ]========// ||

\\===========[ Port 3 ]=======||=====// ||

\\==============[ Port 4 ]===========//

|

In the diagram, we see the two dashboards, two nodes, the hosted servers within the subnodes and the foundation pack equipment. The servers with dotted lines shows that the server is ready to run there, but isn't at the time the diagram was made.

The dashboards and the foundation pack equipment has been discussed already, as has the networking. Below is the switch port assignment for a very typical foundation pack hosting two node pairs. Above, the two switches are split into four groups, just to try and make the diagram marginally sane. In truth, there are just the two switches. You can correlate the ports in the diagram above to the map below.

Ethernet Switch Port Assignment

The ethernet switch port assignment plays a central role in the consistency and security of the Anvil! platform. The port assignment below is the same across all installed Anvil! systems. The make, model and performance may change dramatically from install to install, but not the layout.

| Note: Here we show the Storage Network using the switch. If you plan to run the SN back to back between the subnodes, the SN VLAN can be removed. The Migration Network is never run through a switch, and is always back to back. |

| an-switch01 | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Stack | Back-Channel Network]] | Storage Network | Internet-Facing Network | ||||||||||||

| X1 (1/2/1) | X3 (1/2/3) | 1 (1/1/1) | 3 (1/1/3) | 5 (1/1/5) | 7 (1/1/7) | 9 (1/1/9) | 11 (1/1/11) | 13 (1/1/13) | 15 (1/1/15) | 17 (1/1/17) | 19 (1/1/19) | 21 (1/1/21) | 23 (1/1/23) | ||

| To an-switch02 X3 |

To an-switch02 X1 |

an-a01n01 BCN - Link 1 |

an-a01n01 IPMI / Fencing |

an-a02n01 BCN - Link 1 |

an-a02n01 IPMI / Fencing |

an-striker01 BCN - Link 2 |

an-a01n01 SN - Link 1 |

an-a02n01 SN - Link 1 |

an-a01n01 IFN - Link 1 |

an-a02n01 IFN - Link 1 |

an-striker01 IFN - Link 1 |

-- | -- | ||

| -- | -- | an-a01n02 BCN - Link 1 |

an-a01n02 IPMI / Fencing |

an-a02n02 BCN - Link 1 |

an-a02n02 BCN - Link 1 |

-- | an-a01n02 SN - Link 1 |

an-a02n02 SN - Link 1 |

an-a01n02 IFN - Link 1 |

an-a02n02 IFN - Link 1 |

-- | -- | Uplink 1 | ||

| X2 (1/2/2) | X4 (1/2/4) | 2 (1/1/2) | 4 (1/1/4) | 6 (1/1/6) | 8 (1/1/8) | 10 (1/1/10) | 12 (1/1/12) | 14 (1/1/14) | 16 (1/1/16) | 18 (1/1/18) | 20 (1/1/20) | 22 (1/1/22) | 24 (1/1/24) | ||

| Unused | VID 100 | VID 200 | VID 300 | ||||||||||||

| an-switch02 | |||||||||||||||

| Stack | Back-Channel Network]] | Storage Network | Internet-Facing Network | ||||||||||||

| X1 (2/2/1) | X3 (2/2/3) | 1 (2/1/1) | 3 (2/1/3) | 5 (2/1/5) | 7 (2/1/7) | 9 (2/1/9) | 11 (2/1/11) | 13 (2/1/13) | 15 (2/1/15) | 17 (2/1/17) | 19 (2/1/19) | 21 (2/1/21) | 23 (2/1/23) | ||

| To an-switch01 X3 |

To an-switch01 X1 |

an-a01n01 BCN - Link 2 |

an-pdu01 | an-a02n01 BCN - Link 2 |

an-ups01 | an-striker02 BCN - Link 1 |

an-a01n01 SN - Link 2 |

an-a02n01 SN - Link 2 |

an-a01n01 IFN - Link 2 |

an-a02n01 IFN - Link 2 |

an-striker01 IFN - Link 2 |

-- | -- | ||

| -- | -- | an-a01n02 BCN - Link 2 |

an-pdu02 | an-a02n02 BCN - Link 2 |

an-ups02 | -- | an-a01n02 SN - Link 2 |

an-a02n02 SN - Link 2 |

an-a01n02 IFN - Link 2 |

an-a02n02 IFN - Link 2 |

-- | -- | Uplink 2 | ||

| X2 (2/2/2) | X4 (2/2/4) | 2 (2/1/2) | 4 (2/1/4) | 6 (2/1/6) | 8 (2/1/8) | 10 (2/1/10) | 12 (2/1/12) | 14 (2/1/14) | 16 (2/1/16) | 18 (2/1/18) | 20 (2/1/20) | 22 (2/1/22) | 24 (2/1/24) | ||

| Unused | VID 100 | VID 200 | VID 300 | ||||||||||||

Subnets - Giving IP Addresses Meaning

| Note: This example below is specific to this sample install. For a more complete review of Networking in an Anvil! Cluster, please see: Anvil! Networking. |

To further build on consistency, the Anvil! platform uses, everywhere possible, the same subnets for the BCN and SN across all installs. The IFN is often outside of our control, so there is little we can do to enforce consistency there. That doesn't matter much though, because as stated earlier, all inter-Anvil! communication occurs on the BCN, save for the storage replication.

We use the subnets to both identify role and IP.

- The first two octets define the subnet.

- The third octet defines the device role.

- The fourth octet is identifies the device.

Lets look at this closer.

| Purpose | Subnet | Notes | ||

|---|---|---|---|---|

| Back-Channel Network 1 | 10.201.x.y/16 |

Each node will use 10.201.50.x where x matches the node ID.

| ||

| Internet-Facing Network 1 | variable |

In most all cases, the IFN exists before you arrive, and you have no influence over the available range of IP addresses. The existing network administrator will need to provide a static IP address for each subnode and dashboard on the network.

| ||

| Storage Network 1 | 10.101.x.y/16 |

| ||

| Migration Network 1 | 10.199.x.y/16 |

|

Hostnames - Cattle

Naming machines in an Anvil! definitely falls into the 'cattle' side of "Pets vs. Cattle" (ref: slide #29). The host names used in Anvil! systems serve a purpose.

All host names in Anvil! systems look like this;

- <prefix>-<purpose><sequence>.<domain_or_suffix>

- . The prefix is used to identify a group on Anvil! systems. Generally it is a short string used to identify a client, location or division.

- . The purpose indicates the role the device plays.

- . Nodes use the format 'aXXnYY', where 'XX' is the Anvil! sequence number and 'YY' is the node number.

- . All other devices have a descriptive name; 'switch' for ethernet switches, 'pdu' for switched PDUs, 'ups' for UPSes and 'striker' for dashboards.

- . The sequence number is a simple integer, zero-padded.

As an example, Alteeve's general use prefix is simply 'an' (Alteeve's Niche! Inc. is our full name) and our domain is 'alteeve.ca'. So with this, the first UPS on the first foundation pack would be 'an-ups01.alteeve.ca'. The first UPS in the second foundation pack would be 'an-ups03.alteeve.ca' and so on. The second node of the first node pair would be 'an-a01n02.alteeve.ca' (anvil 01, node 02). The first node of the fifth pair would be 'an-a05n01.alteeve.ca'.

To simplify things further, the Anvil! uses the local '/etc/hosts' file and a set of suffixes to denote specific networks on multi-homed devices, like nodes and dashboards. They are;

- '<short_hostname>.bcn' - Names that resolve to the IP of the target on the Back-Channel Network.

- '<short_hostname>.sn' - Names that resolve to the IP of the target on the Storage Network.

- '<short_hostname>.ifn' - Names that resolve to the IP of the target on the Internet-Facing Network.

- '<short_hostname>.ipmi' - Names that resolve to a node's IPMI BMC, used when fencing a target node.

Pretty simple, but again, consistency is a key part of availability.

Putting it together

This will be more clear with a diagram of a typical Anvil! IP load-out. In this example, we will use the IFN subnet '10.255.0.0/16' and use the third and fourth octets as we do in the BCN and SN. Of course, this is an ideal situation and rarely possible.

| Device | Back-Channel Network 1 IP | Internet-Facing Network 1 IP | Storage Network 1 IP | Migration Network 1 IP |

|---|---|---|---|---|

| an-switch01.alteeve.ca | 10.201.1.1 | -- | -- | -- |

| an-switch02.alteeve.ca | 10.201.1.2 ♦ | -- | -- | -- |

| an-pdu01.alteeve.ca | 10.201.2.1 | -- | -- | -- |

| an-pdu02.alteeve.ca | 10.201.2.2 | -- | -- | -- |

| an-ups01.alteeve.ca | 10.201.3.1 | -- | -- | -- |

| an-ups02.alteeve.ca | 10.201.3.2 | -- | -- | -- |

| an-striker01.alteeve.ca | 10.201.4.1 | 10.255.4.1 | -- | -- |

| an-striker02.alteeve.ca | 10.201.4.2 | 10.255.4.2 | -- | -- |

| an-a01n01.alteeve.ca | 10.201.10.1 | 10.255.10.1 | 10.101.10.1 | 10.199.10.1 |

| an-a01n01.ipmi | 10.201.11.1 | -- | -- | -- |

| an-a01n02.alteeve.ca | 10.201.10.2 | 10.255.10.2 | 10.101.10.2 | 10.199.10.2 |

| an-a01n02.ipmi | 10.201.11.2 | -- | -- | -- |

| an-a02n01.alteeve.ca | 10.201.12.1 | 10.255.12.1 | 10.101.12.1 | 10.199.12.1 |

| an-a02n01.ipmi | 10.201.13.1 | -- | -- | -- |

| an-a02n02.alteeve.ca | 10.201.12.2 | 10.255.12.2 | 10.101.12.2 | 10.199.12.2 |

| an-a02n02.ipmi | 10.201.13.2 | -- | -- | -- |

♦ Rarely used as most installs use stacked switches.

That's it. It might take a bit to explain at first, but once you see the pattern, it is pretty easy to follow. With this, you can walk up to any Anvil! and immediately know what everything is, how to access it and what the parts do.

ScanCore - The "Intelligence" In 'Intelligent Availability'

Thus far, what we have talked about describes a really good High Availability setup. We've covered all the ways that the system will be protected from a failure, but not all of these backup systems are fault tolerant.

More specifically, the failure of a node will cause any servers hosted on it to lose power, requiring a fresh boot to recover them. This is generally completed in 30~90 seconds, but the interruption can be significant.

ScanCore is Alteeve's answer to try and solve this problem. It runs on all nodes and dashboards, and collectively works together to try to predict failures before they happen, and migrate servers to the healthier peer before the node can fail. We'll explore this more in a moment.

But wait, there's more!

ScanCore also determines when threat models change. Consider this;

Under normal operation, the most likely cause of interruption is component failure. So during normal operation, the best thing to do is to maintain full redundancy.

- Want more detail? Please see the ScanCore page.

Load Shedding

What about when the power fails?

What about when HVAC fails and the room starts heating up?

In these scenarios, the greatest threat is no longer component failure. In both cases, though using different metrics, ScanCore will decide that the best thing to do is to shed load. This will reduce the draw on the batteries in the first scenario, or reduce the thermal output in the second case.

The goal in these cases is to buy time. Shedding load doesn't fix the underlying problem, but it gives you more time to hopefully fix the underlying problem in time to avoid a full shut down. If, however, the problem lasts for too long, then ScanCore will decide when it can't wait any longer and will gracefully shutdown hosted servers and then power off the remaining node.

What then?

The Striker dashboards are considered sacrificial lambs. That is to say, we're willing to risk them in order to protect the node pairs. So they are allowed to keep running until the hardware forcibly shuts down (either because the UPSes completely drain or the temperature goes so high that hardware safety system kick in). If this happens, the nodes are configured to boot on power restore, after which they will again start monitoring the general environmental health.

When power is restored and the UPSes have charged to a safe level, or when the temperature returns to safe levels, ScanCore on the dashboards will boot the nodes back up. If power was restored, or temperatures drop to safe levels, while in a load shed state, ScanCore will wait until minimum safe levels (charge or temperature) return before restoring full redundancy.

Failure Prediction

Sometimes, there is no warning. A server will fail dramatically and even in the post-mortem, no signs of the impending failure could be found.

An example of this was a rare, faulty voltage regulators on some node mainboards a tier-1 vendor was providing us. Up to a year or two into production, the voltage regulator would fail catastrophically. There were no voltage irregularities before hand that we could detect, and so, the failure took down any hosted servers.

In a scenario like this, Intelligent Availability provided no comfort.

Thankfully, this is the exception, not the rule!

There are many ways to predict a pending failure. Lets look at storage as an example, as this is something most people have experience with.

SAS hard drives and proper hardware RAID controllers will report a lot of useful information about each drive. Temperature, media error counters and so on. Drives almost always start showing signs of failure long before they actually die. Here's how ScanCore deal with this.

- . A drive starts to throw error counters. It's not unexpected for drives to throw periodic errors and so, this alone isn't enough for ScanCore to take action on its own. It will notify the admin, however.

- . If the error counters start to climb, the admin will contact their hardware vendor and request a pre-failure replacement.

- . If the drive is not replaced in time, the drive will fail and the RAID array will enter a degraded state. Now ScanCore acts!

In this scenario, the peer node is assumed to have no faults. So ScanCore says "ok, sure, the current host is alive, but it is degraded. So lets be safe and live migrate the servers over to the peer. Now, if a second drive were to fail and the node go offline, there would be no interruption because the servers have already vacated the old host!

All Failures Are Not Created Equal

In the example above, we looked at how ScanCore would deal with a faulty drive. Lets step back now, and start a new scenario where both nodes are healthy.

In this scenario, we'll say that node 1 is hosting the servers. So with the stage set, lets look at a case where both nodes develop problems.

- . Node 1 loses a cooling fan. The system can handle this without overheating, which is confirmed by no thermal sensors registering problems.

- . ScanCore says "well, node 2 is perfectly fine, so lets move the servers over" and then proceeds to do just that.

- . Within a few minutes, all servers have moved over to Node 2 without any user noticing anything and without any input from an admin.

- . Later, Node 2 loses a hard drive and its RAID array degrades.

- . ScanCore looks at the problems on both nodes and decides, "ya, you know what? A failed fan doesn't look so bad anymore" and live-migrates the servers back to Node 1.

- . A replacement hard drive is found and Node 2's array starts rebuilding. Nothing yet happens because the array remains degraded until the rebuild completes.

- . The rebuild completes, and once again, Node 2 is perfectly fine. For some reason though, Node 1 is still without a fan. So ScanCore says "Welp, Node 2 is healthier" and live-migrates the servers back to Node 2.

- . Finally, someone finds an unobtanium fan and fixes Node 1. ScanCore now sees that both nodes are healthy, but it decides to leave the servers on Node 2.

Why?

It is important to understand that in an Anvil! system, nodes don't have a "primary" or "backup" role. These roles are determined purely by where the servers happen to be at any given time. So in the scenario above, when Node 1 was fixed, there was no reason to do anything. Node 2 is just as good a host as Node 1, so ScanCore decides that Node 1 is now the backup and leaves things as they are.

Live-migrations of servers are considered very safe, but not perfectly safe.

Remember; Everything can fail.

This is another example of how accepting that everything can fail is a strength. It's natural to want to move the servers back to node 1. Why not? That's where they normally are and normal is good. This way of thinking saves us from that temptation.

Bonus! Self-Destruct Sequence, Engage!

We were challenged by a client to produce an Anvil! system that could render data forensically unrecoverable in a maximum of 300 seconds.

|

I won't lie, this was the most fun I've had in my 20+ year professional career.

For the record, no, thermite on the hard drives was not good enough. It make for great movies, but anything that is physical couldn't be sure to destroy the data thoroughly enough.

Wait, backup... Why would you want to destroy a platform designed to protect your systems?

For privacy reasons, we can't speak to details. Generally though, the threat model was one where an attacker with effectively limitless resources would gain physical control of the Anvil! system. This attacker could spend considerable time and money, with access to top level experts, to try and recover the data.

The Anvil! system is ideal for use in air-gapped environments. It can be extremely rugged and take a significant beating. It can be deployed in places where the user may not care if a server goes down for a few hours, but are located in a place where replacement parts can't be ordered. In these cases, the system must be able to live, even if it is limping, for weeks or months until repairs can be made.

This capability means that Anvil! systems can be found in potentially hostile locations. It is feasible that people will have to abandon their equipment and flee for safety. In such a scenario, the value of the hardware is hardly a concern. Securing the data, however, is still critical.

Lock-Out

| Note: This feature is available in M2, but pending addition to M3. If you have a use-case for this feature, please contact us and we'll try push this feature up the ToDo list. |

In this particular case, the client's equipment would be deployed in a secure location with extremely limited human access. If a node rebooted, it was not possible for a user to enter a boot-time passphrase.

With all this in mind, here is what we did.

- We used Self-Encrypting Drives that used hardware AES-256 encryption.

- We used Broadcom (formerly LSI) controllers with their SafeStore option.

Details of how we configure this can be found here.

Given the requirement for unattended booting, we had to store the passphrase in the RAID controller. The up-side to this is that we were able to develop an intermediary "lock-out" option, to sit between normal operation and full-on self-destruct.

If the client enters a situation where they think they might lose control over the system, they have the option to (remotely) set and require a boot-time passphrase. Once set, the nodes can be rebooted them. This will leave them recoverable by someone who knows the passphrase.

Of course, this leaves the system vulnerable to rubber-hose decryption. In the client's case, this was considered acceptable because they had a few secure remote access links available that would allow out-of-band access to both fire the script and restore normal access when the threat was passed. Thanks to this ability to remotely lock the system, it was deemed fairly safe as anyone who might be captured would not know the lock-out passphrase.

Later, when the threat passed, the client would use this access to unlock the storage and boot the nodes. Once booted, they could then re-enable normal boot.

Obviously, this approach is not without risk.

Killing Me Softly

When an evacuation was certain, local staff or remote users could access the nodes and fire the self-destruct script. Once complete, the system would have no data at all to recover. Even if the old passphrase was somehow known, the salt on the drives would be gone, so the data would be forever lost.

This is the core of the self-destruct. It doesn't make for great movies, but it is exceptionally effective.

Oh, and we can use this to destroy nearly 40 TB per node in under 30 seconds, 1/10th the maximum time.

So these are the steps that happen when the self-destruct sequence is initiated;

- . The script is invoked with a passphrase. The validity of this passphrase is checked. Note that this is weak protection, but is enough to prevent accidental invocation.

- . A RAM disk is created and certain needed tools are copied to this disk.

- . A list of virtual drives (arrays) and their disks are created.

- . The actual self-destruct sequence begins;

- . The cache policy is switched to write-through.

- . The cache is flushed.

- . The virtual drive(s) are deleted. At this point, the OS starts to throw significant errors.

- . The drives are sent the "instant secure erase" command, deleting their internal passphrase and salt. The data is now unrecoverable.

- . The old key is deleted from the controller, just to be safe.

- . The magic SysRq 'o is sent to the kernel, triggering a power off.

This leaves approximately 240 seconds for the RAM to drain, reducing the risk of any latent data being recoverable via an ice attack on the RAM itself.

Once complete, the hardware is left 100% intact and can be re-used.

| Any questions, feedback, advice, complaints or meanderings are welcome. | |||

| Alteeve's Niche! | Enterprise Support: Alteeve Support |

Community Support | |

| © Alteeve's Niche! Inc. 1997-2024 | Anvil! "Intelligent Availability®" Platform | ||

| legal stuff: All info is provided "As-Is". Do not use anything here unless you are willing and able to take responsibility for your own actions. | |||