Abandoned - 2-Node EL5 Cluster

|

Alteeve Wiki :: How To :: Abandoned - 2-Node EL5 Cluster |

This paper is VERY much a work in progress. It is NOT complete and should NOT be followed. It is largely a dumping ground for my thoughts and notes as I work through the process. Please do not reference or link to this talk until this warning is removed. Really, it would be very embarrassing for both of us. :)

Overview

This first cluster How-To will cover building a two node cluster. This is a good place to start as the step up to 3+ node is significant. Having a working 2-node will give you a much stronger foundation for that step up. In fact, this How-To will be a prerequisite for the 3+ Node How To.

The initial cluster will be built for two purposes;

- VM Hosting; Make a virtual server "float" between two real servers for high-availability.

- iSCSI Target on top of LVM + DRBD.

Progress

Feb. 02, 2010 I finally got the hardware in place to start building the cluster I will need to create this How To. Progress should now really start!

Oct. 15, 2009 I've gotten permission from Canada Equity's owner to use the docs I created for them on this public How To. I will post here once it is complete and signed off on.

Pics and Screenshots

These are in no particular order yet.

OS Install

Start with a stock CentOS 5 install. This How-To uses CentOS 5.4 x86_64, however it should be fairly easy to adapt to other CentOS 5*, RHEL5 or other RHEL5-based distributions.

Requirements

You will need two computer systems with multi-core CPUs with proper virtualization support. Each node will need a minimum of two network cards each, ideally three. This paper will use three, but if you only have two, you can merge the back-channel and Internet facing NICs (that will make more sense later).

The nodes used in this article are:

- ASUS M4A78L-M

- AMD Athlon II x2 250

- 2GB Kingston DDR2 KVR800D2N6K2/4G (split between the two nodes)

- 2x Intel 82540 PCI NICs

Note: This is not an endorsement of the above hardware. It is simply what determined to be the most economical hardware available at the time. Had budget been less of a concern, other hardware may well have been chosen.

Kickstart

This is a sample kickstart script used by this paper. Be sure to set your how password string and network settings.

Warning! This kickstart script will erase your hard drive! Adapt it, don't blindly use it.

# Kickstart file automatically generated by anaconda.

install

cdrom

lang en_US.UTF-8

keyboard us

xconfig --startxonboot

network --device eth0 --bootproto static --ip 192.168.1.71 --netmask 255.255.255.0 --gateway 192.168.1.1 --nameserver 192.139.81.117,192.139.81.1 --hostname san01.alteeve.com

rootpw --iscrypted #your_secret_hash

firewall --disabled

selinux --permissive

authconfig --enableshadow --enablemd5

timezone --utc America/Toronto

bootloader --location=mbr --driveorder=sda --append="rhgb quiet dom0_mem=512M"

# Hard drive info.

zerombr yes

clearpart --linux --drives=sda

part /boot --fstype ext3 --size=250 --asprimary

part pv.11 --size=100 --grow

volgroup san01 --pesize=32768 pv.11

logvol / --fstype ext3 --name=lv01 --vgname=san01 --size=20000

logvol swap --fstype swap --name=lv00 --vgname=san01 --size=2048

%packages

@cluster-storage

@development-libs

@editors

@text-internet

@virtualization

@gnome-desktop

@core

@base

@clustering

@base-x

@development-tools

@graphical-internet

kmod-gnbd-xen

kmod-gfs-xen

isns-utils

perl-XML-SAX

perl-XML-NamespaceSupport

lynx

bridge-utils

device-mapper-multipath

xorg-x11-server-Xnest

xorg-x11-server-Xvfb

pexpect

imake

-slrn

-fetchmail

-mutt

-cadaver

-vino

-evince

-gok

-gnome-audio

-esc

-gimp-print-utils

-desktop-printing

-im-chooser

-file-roller

-gnome-mag

-nautilus-sendto

-eog

-gnome-pilot

-orca

-mgetty

-dump

-dosfstools

-autofs

-pcmciautils

-dos2unix

-rp-pppoe

-unix2dos

-mtr

-mkbootdisk

-irda-utils

-rdist

-bluez-utils

-talk

-synaptics

-linuxwacom

-wdaemon

-evolution

-nspluginwrapper

-evolution-webcal

-ekiga

-evolution-connector

Post OS Install

Once the OS is installed, we need to do some ground work.

- Limit dom0's memory.

- Change the default run-level.

- Change when xend starts.

- Setup networking.

Limit dom0's Memory

Normally, dom0 will claim and use memory not allocated to virtual machines. This can cause trouble though if, for example, you've moved a VM off of a node and then want to move it or another VM back to the first node. For a period of time, the node will claim that there is not enough free memory for the migration. By setting a hard limit of dom0's memory usage, this scenario won't happen and you will not need to delay migrations.

To do this, add dom0_mem=512M to the Xen kernel image's first module line in grub. For example, you should have a line like:

title CentOS (2.6.18-164.11.1.el5xen)

root (hd0,0)

kernel /xen.gz-2.6.18-164.11.1.el5

module /vmlinuz-2.6.18-164.11.1.el5xen ro root=/dev/san02/lv01 rhgb quiet dom0_mem=512M

module /initrd-2.6.18-164.11.1.el5xen.img

You can change the '512M' with the amount of RAM you want to allocate to dom0.

REMEMBER!

If you update your kernel, be sure to re-add this argument to the new kernel's argument list.

Change the Default Run-Level

If you don't plan to work on your nodes directly, it makes sense to switch the default run-level from 5 to 3. This prevent Gnome from starting at boot, thus freeing up a lot of memory and system resources.

To do this, edit '/etc/inittab' and change the 'id:5:initdefault:' line to 'id:3:initdefault:'. Then, type:

init 3

Change when xend starts

Normally, xend starts at priority 98 in /etc/rc.X/. This can cause problems with other packages that expect the network to be stable. This is because xend takes all the networks down when it starts. To prevent these problems, we will move the xend init script to position 11.

First, edit the actual initialization script and change the line '# chkconfig: 2345 98 01' to 'chkconfig: 2345 11 89'.

vim /etc/init.d/xend

Change:

# chkconfig: 2345 98 01

To:

# chkconfig: 2345 11 89

Now, use chkconfig to change the run-level priority to start at 11 and exit at 89:

chkconfig --del xend

chkconfig --add xend

You should now see the file /etc/rc3.d/S11xend.

Though it is outside the scope of this How-To, you may want to know more about networking in Xen. Please see this article:

eth1 and eth2

Configure your eth1 and eth2 devices to also be static IPs. If your hardware has an integrated IPMI controller piggy-backing on one of the interfaces, set that device to be your back-channel. This usually piggy-backs on eth0. If this is the case for you, use eth0 as you back-channel and eth2 as you Internet-facing device.

Next, set you highest-performance/bandwidth device to be your DRBD LAN. Use your remaining ethernet device for your Internet facing card.

This paper used the following:

- eth0

- Internet-facing on the 192.168.1.0/24 subnet.

- eth1

- DRBD LAN on the 10.0.0.0/24 subnet.

- eth2

- Back-channel LAN on the 10.0.1.0/24 subnet.

Which interface and what subnets your use are entirely up to you to decide. The only thing to pay attention to are:

- Your DRBD LAN is used for nothing but DRBD communication.

- Your back-channel be setup on your IPMI/management interfaces, where applicable.

- Your Internet-facing LAN be the one with the default gateway setup.

/etc/hosts

Add an entry for your two nodes using the back-channel IPs.

Add to both nodes (adapt for your network)

192.168.1.71 an_san01 an_san01.alteeve.com

192.168.1.72 an_san02 an_san02.alteeve.com

iptables

Be sure to flush IPTables and disable it from starting on your nodes.

chkconfig --level 2345 iptables off

/etc/init.d/iptables stop

chkconfig --level 2345 ip6tables off

/etc/init.d/ip6tables stop

Initial Cluster Setup

Some things, like cluster-aware LVM, won't work until the cluster is setup. For this reason, we need to setup the cluster infrastructure before proceeding.

If you didn't read up on Networking in Xen works in the 'Change when xend starts' section, now would be a very good time to do so. A lot of the networking from here on in will seem cryptic otherwise when it's actually fairly straight forward.

Adding New NICs to Xen

To start, check to see if all of your ethernet devices are under Xen's control. You can tell this by running ifconfig and checking to see if there is a pethX corresponding to each ethX device. For example, here is what you would see if only eth0 was under Xen's control:

ifconfig

eth0 Link encap:Ethernet HWaddr 90:E6:BA:71:82:D8

inet addr:192.168.1.71 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::92e6:baff:fe71:82d8/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3035 errors:0 dropped:0 overruns:0 frame:0

TX packets:920 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:611332 (597.0 KiB) TX bytes:118807 (116.0 KiB)

eth1 Link encap:Ethernet HWaddr 00:0E:0C:59:45:78

inet addr:10.0.0.71 Bcast:10.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::20e:cff:fe59:4578/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:15543 errors:0 dropped:0 overruns:0 frame:0

TX packets:15459 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:14170257 (13.5 MiB) TX bytes:14177375 (13.5 MiB)

Base address:0xec00 Memory:febe0000-fec00000

eth2 Link encap:Ethernet HWaddr 00:21:91:19:96:53

inet addr:10.0.1.71 Bcast:10.0.1.255 Mask:255.255.255.0

inet6 addr: fe80::221:91ff:fe19:9653/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:36 errors:0 dropped:0 overruns:0 frame:0

TX packets:58 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:7958 (7.7 KiB) TX bytes:10588 (10.3 KiB)

Interrupt:16

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:34 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5183 (5.0 KiB) TX bytes:5183 (5.0 KiB)

peth0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:3049 errors:0 dropped:0 overruns:0 frame:0

TX packets:933 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:612202 (597.8 KiB) TX bytes:120937 (118.1 KiB)

Interrupt:252 Base address:0x6000

vif0.0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:926 errors:0 dropped:0 overruns:0 frame:0

TX packets:3041 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:121875 (119.0 KiB) TX bytes:611728 (597.3 KiB)

virbr0 Link encap:Ethernet HWaddr 00:00:00:00:00:00

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

inet6 addr: fe80::200:ff:fe00:0/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:52 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:10166 (9.9 KiB)

xenbr0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:1991 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:477450 (466.2 KiB) TX bytes:0 (0.0 b)

You'll notice that there is no peth1 or peth2 device, nor their associated virtual devices or bridges. This is because, in my case, I installed the OS with only one NIC installed. If you had all three of your installed, you can probably skip this step as all your NICs will be shown above.

Create /etc/xen/scripts/an-network-script

This script will be used by Xen to create bridges for all NICs.

Please note two things;

- You don't need to use the name 'an-network-script'. I suggest this name mainly to keep in line with the rest of the 'AN!x' naming used here.

- If you install convirt, it will create it's own bridge script called convirt-xen-multibridge.

First, touch the file and then chmod it to be executable.

touch /etc/xen/scripts/an-network-script

chmod 755 /etc/xen/scripts/an-network-script

Now edit it to contain the following:

vim /etc/xen/scripts/an-network-script

#!/bin/sh

dir=$(dirname "$0")

"$dir/network-bridge" "$@" vifnum=0 netdev=eth0 bridge=xenbr0

"$dir/network-bridge" "$@" vifnum=1 netdev=eth1 bridge=xenbr1

"$dir/network-bridge" "$@" vifnum=2 netdev=eth2 bridge=xenbr2

Now tell Xen to reference that script by editing /etc/xen/xend-config.sxp:

vim /etc/xen/xend-config.sxp

Change the line:

(network-script network-bridge)

To:

#(network-script network-bridge)

(network-script an-network-script)

Now restart xend

/etc/init.d/xend restat

restart xend: [ OK ]

If everything worked, you should now be able to run ifconfig and see that all the ethX devices have matching pethX, virtual and bridge devices.

ifconfig

eth0 Link encap:Ethernet HWaddr 90:E6:BA:71:82:D8

inet addr:192.168.1.71 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::92e6:baff:fe71:82d8/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4662 errors:0 dropped:0 overruns:0 frame:0

TX packets:1366 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:977980 (955.0 KiB) TX bytes:204807 (200.0 KiB)

eth1 Link encap:Ethernet HWaddr 00:0E:0C:59:45:78

inet addr:10.0.0.71 Bcast:10.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::20e:cff:fe59:4578/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:25 errors:0 dropped:0 overruns:0 frame:0

TX packets:49 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2035 (1.9 KiB) TX bytes:11162 (10.9 KiB)

eth2 Link encap:Ethernet HWaddr 00:21:91:19:96:53

inet addr:10.0.1.71 Bcast:10.0.1.255 Mask:255.255.255.0

inet6 addr: fe80::221:91ff:fe19:9653/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:20 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:6540 (6.3 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:34 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5183 (5.0 KiB) TX bytes:5183 (5.0 KiB)

peth0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:4705 errors:0 dropped:0 overruns:0 frame:0

TX packets:1413 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:980764 (957.7 KiB) TX bytes:211245 (206.2 KiB)

Interrupt:252 Base address:0x6000

peth1 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:16562 errors:0 dropped:0 overruns:0 frame:0

TX packets:16440 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:14242544 (13.5 MiB) TX bytes:14254697 (13.5 MiB)

Base address:0xec00 Memory:febe0000-fec00000

peth2 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:36 errors:0 dropped:0 overruns:0 frame:0

TX packets:78 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:7958 (7.7 KiB) TX bytes:17128 (16.7 KiB)

Interrupt:16

vif0.0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:1425 errors:0 dropped:0 overruns:0 frame:0

TX packets:4705 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:214477 (209.4 KiB) TX bytes:980818 (957.8 KiB)

vif0.1 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:49 errors:0 dropped:0 overruns:0 frame:0

TX packets:25 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:11162 (10.9 KiB) TX bytes:2035 (1.9 KiB)

vif0.2 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

inet6 addr: fe80::fcff:ffff:feff:ffff/64 Scope:Link

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:20 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:6540 (6.3 KiB) TX bytes:0 (0.0 b)

virbr0 Link encap:Ethernet HWaddr 00:00:00:00:00:00

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

inet6 addr: fe80::200:ff:fe00:0/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:52 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:10166 (9.9 KiB)

xenbr0 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:3117 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:776532 (758.3 KiB) TX bytes:0 (0.0 b)

xenbr1 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:26 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8907 (8.6 KiB) TX bytes:0 (0.0 b)

xenbr2 Link encap:Ethernet HWaddr FE:FF:FF:FF:FF:FF

UP BROADCAST RUNNING NOARP MTU:1500 Metric:1

RX packets:20 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:6260 (6.1 KiB) TX bytes:0 (0.0 b)

cluster.conf

The core of the cluster is the /etc/cluster/cluster.conf XML configuration file.

Here is the one AN!Cluster uses, with in-line comments. Here is a great everview of the cluster.conf file.

<!--

The cluster's name is "an_san" and, as this is the first version of this file,

it is set to version "1". Each time this file changes in any way, the version

number will have to be incremented by 1.

-->

<cluster name="an_san" config_version="1">

<!--

This is a special cman argument to enable cluster services to run

without quorum. Being a two-node cluster, quorum with a failed node is

quit impossible. :)

If we had no need for this, we'd just put in the self-closing argument:

<cman/>

-->

<cman two_node="1" expected_votes="1">

</cman>

<!--

This is where the nodes in this cluster are defined.

-->

<clusternodes>

<!-- SAN Node 1 -->

<clusternode name="an_san01" nodeid="1">

<fence>

<!--

The entries here reference devices defined

below in the <fencedevices/> section. The

options passed control how the device is

called. When multiple devices are listed, they

are tried in the order that the are listed

here.

-->

<!-- MADI: would changing 'power' to 'reboot' make more sense? -->

<method name="power">

<device name="node_assassin"

switch="1" port="01"/>

</method>

</fence>

</clusternode>

<!-- SAN Node 2 -->

<clusternode name="an_san02" nodeid="2">

<fence>

<method name="power">

<device name="node_assassin"

switch="1" port="02"/>

</method>

</fence>

</clusternode>

</clusternodes>

<!--

The fence device is mandatory and it defined how the cluster will

handle nodes that have dropped out of communication. In our case,

we will use the Node Assassin fence device.

-->

<fencedevices>

<!--

This names the device, the agent (script) to controls it,

where to find it and how to access it.

-->

<fencedevice name="node_assassin" agent="fence_na"

ipaddr="ariel.alteeve.com" login="ariel" passwd="mermaid">

</fencedevice>

<!--

If you have two or more fence devices, you can add the extra

one(s) below. The cluster will attempt to fence a bad node

using these devices in the order that they appear.

-->

</fencedevices>

<!-- When the cluster starts, any nodes not yet in the cluster may be

fenced. By default, there is a 6 second buffer, but this isn't very

much time. The following argument increases the time window where other

nodes can join before being fenced. I like to give up to one minute.

-->

<fence_daemon post_join_delay="60">

</fence_daemon>

</cluster>

Once you're comfortable with your changes to the file, you need to validate it. Run:

xmllint --relaxng /usr/share/system-config-cluster/misc/cluster.ng /path/to/cluster.conf

If there are errors, address them. Once you see /path/to/cluster.conf validates, you can copy it to /etc/cluster/cluster.conf.

cp /path/to/cluster.conf /etc/cluster/cluster.conf

Now to work on OpenAIS.

openais.conf

The ... who am I kidding? I don't understand this yet... this is a place-holder for now.

# Please read the openais.conf.5 manual page

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.2.0

mcastaddr: 226.94.1.1

mcastport: 5405

}

}

logging {

debug: off

timestamp: on

}

amf {

mode: disabled

}

DRBD

DRBD will be used to provide redundancy of our iSCSI-hosted data by providing a real-time, redundant block device. On top of this, a new LVM PV will be created for a virtual machine that will be able to "float" between the two nodes. This way, should one of the nodes fail, the virtual machine would be able to quickly be brought back up on the surviving node with minimal interruption.

Install

If you used the kickstart script, then everything you need should be installed. If not,, or if you want to double-check, run:

yum -y install drbd83.x86_64 kmod-drbd83-xen.x86_64

Create the LVM Logical Volume

Most of the remaining space on either node's LVM PV will be allocated to a new LV. This new LV will host either node's side of the DRBD resource. In essence, these LVs will act as normal block devices for DRBD to exist on.

Create the Logical Volume for the DRBD device on each node. Replace san01 with your Volume Group name.

an_san01

lvcreate -L 400G -n lv02 /dev/san01

an_san02

lvcreate -L 400G -n lv02 /dev/san02

Create or Edit /etc/drbd.conf

The main configuration file will need to exist as identical copies on both nodes. This How-To doesn't cover why the configuration is the way it is because the main DRBD website does a much better job.

On both nodes, edit or create /etc/drbd.conf so that it is like this:

global {

usage-count yes;

}

common {

protocol C;

syncer { rate 33M; }

}

resource r0 {

device /dev/drbd0;

meta-disk internal;

net {

allow-two-primaries;

}

startup {

become-primary-on both;

}

# The 'on' name must be the same as the output of 'uname -n'.

on an_san01.alteeve.com {

address 10.0.0.71:7789;

disk /dev/san01/lv02;

}

on an_san02.alteeve.com {

address 10.0.0.72:7789;

disk /dev/san02/lv02;

}

}

The main things to note are:

- The one argument must match the name returned by the 'uname -n' shell call.

- 'Protocol C' tells DRBD to not tell the OS that a write was complete until both nodes have done so. This effects performance but is required for the later step when we will configure cluster-aware LVM.

With that file in place on both nodes, run the following command and make sure the output is the contents of the file above in a somewhat altered syntax. If you get an error, address it before proceeding.

drbdadm dump

If it's all good, you should see something like this:

# /etc/drbd.conf

common {

protocol C;

syncer {

rate 33M;

}

}

# resource r0 on an_san02.alteeve.com: not ignored, not stacked

resource r0 {

on an_san01.alteeve.com {

device /dev/drbd0 minor 0;

disk /dev/san01/lv02;

address ipv4 10.0.0.71:7789;

meta-disk internal;

}

on an_san02.alteeve.com {

device /dev/drbd0 minor 0;

disk /dev/san02/lv02;

address ipv4 10.0.0.72:7789;

meta-disk internal;

}

net {

allow-two-primaries;

}

startup {

become-primary-on both;

}

}

Once you see this, you can proceed.

Setup the DRBD Resource r0

From the rest of this section, pay attention to whether you see

- Primary

- Secondary

- Both

These indicate which node to run the following commands on. There is no functional difference between either node, so just randomly choose one to be Primary and the other will be Secondary. Once you've chosen which is which, be consistent with which node you run the commands on. Of course, if a command block is proceeded by Both, run the following code block on both nodes.

Both

/etc/init.d/drbd restart

You should see output like this:

--== Thank you for participating in the global usage survey ==--

The server's response is:

you are the 2278th user to install this version

Restarting all DRBD resources: 0: Failure: (119) No valid meta-data signature found.

==> Use 'drbdadm create-md res' to initialize meta-data area. <==

Command '/sbin/drbdsetup 0 disk /dev/san01/lv02 /dev/san02/lv02 internal --set-defaults --create-device' terminated with exit code 10

Don't worry about those errors.

Primary: Initiate the device by run the following commands one at a time:

drbdadm create-md r0

drbdadm attach r0

drbdadm syncer r0

drbdadm connect r0

Secondary: Configure and connect the device on the second node by run ningthe following commands one at a time:

drbdadm create-md r0

drbdadm attach r0

drbdadm connect r0

Primary: Start the sync between the two nodes by calling:

drbdadm -- --overwrite-data-of-peer primary r0

Secondary: At this point, we need to promote the secondary node to 'Primary' position.

drbdadm primary r0

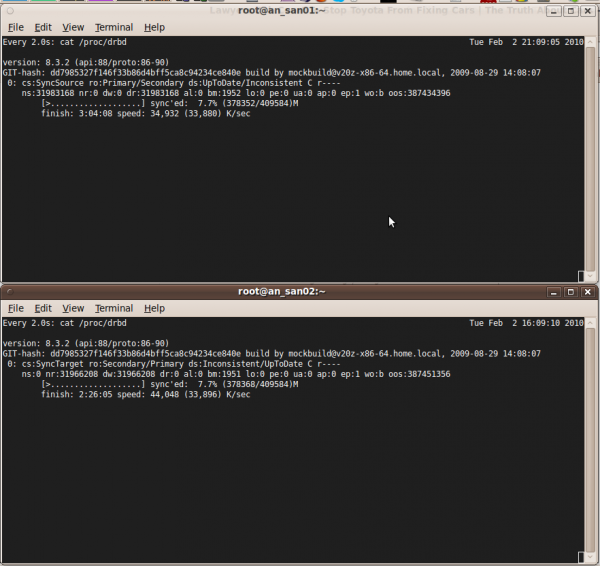

Both: Make sure that both nodes are sync'ing by watching the sync process by running:

watch cat /proc/drbd

Both: You should see something like:

Every 2.0s: cat /proc/drbd Tue Feb 2 23:53:29 2010

version: 8.3.2 (api:88/proto:86-90)

GIT-hash: dd7985327f146f33b86d4bff5ca8c94234ce840e build by mockbuild@v20z-x86-64.home.local, 2009-08-29 14:08:07

0: cs:SyncSource ro:Primary/Primary ds:UpToDate/Inconsistent C r----

ns:366271552 nr:0 dw:0 dr:366271552 al:0 bm:22355 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:53146012

[================>...] sync'ed: 87.4% (51900/409584)M

finish: 0:22:27 speed: 39,364 (33,888) K/sec

You do not need to wait for the sync. to complete before proceeding.

LVM

LVM plays a roll at three levels of the AN!Cluster, and now is a good time to discuss why and how.

- First; Hosts the dom0's OS.

- Why? By using LVM over the bare block devices (or raid devices), we have an underlying disk system that can be expanded easily. Because the DRBD sits on top of this base level, we can grow the DRBD partitions later. This level was implemented by the kickstart script found at the beginning of this How-To.

- Second; The base DRBD partition becomes a PV for the iSCSI VM to use. This way, once the DRBD is grown, or if a second DRBD devices is added, it can be added to the LVM's VG.

- Third; Inside the Virtual Machines. This allows the virtual machines to grow in disk space as well.

By having all three levels using LVM, the cluster and all resources can be grown "on the fly" without ever taking the cluster offline.

Creating an LVM on the DRBD Partition

First, install the LVM programs if you haven't already. It's quite likely these packages are already installed because of the LVM partition under DRBD. However, let's double check:

yum install -y lvm2 lvm2-cluster

From here on, the same Primary, Secondary, Both and Either prefixes will be shown before shell commands as was introduced above. Please be sure to continue using the same convention you selected earlier. That is, pick one node to be "Primary" and stick to it.

If you check now using pvdisplay, you should see only one LVM PV:

Either:

pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name san01

PV Size 465.51 GB / not usable 14.52 MB

Allocatable yes

PE Size (KByte) 32768

Total PE 14896

Free PE 1407

Allocated PE 13489

PV UUID PfKywt-t7or-ZfqD-f104-dDHR-8ILi-6ZswMq

Lets now create an LVM PV using the /dev/drbd0.

Primary:

pvcreate /dev/drbd0

Physical volume "/dev/drbd0" successfully created

If we check pvdisplay again, we should now see both PVs.

Both:

pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name san01

PV Size 465.51 GB / not usable 14.52 MB

Allocatable yes

PE Size (KByte) 32768

Total PE 14896

Free PE 1407

Allocated PE 13489

PV UUID PfKywt-t7or-ZfqD-f104-dDHR-8ILi-6ZswMq

"/dev/san01/lv02" is a new physical volume of "399.99 GB"

--- NEW Physical volume ---

PV Name /dev/san01/lv02

VG Name

PV Size 399.99 GB

Allocatable NO

PE Size (KByte) 0

Total PE 0

Free PE 0

Allocated PE 0

PV UUID alpyBY-ukAR-9KEz-CQt9-u86N-8fg8-W5DPvV

Run pvcreate from both nodes after creating the new PV on /dev/drbd0. Even though you only added the DRBD device on one node, you should see the PV on both. That's the first sign that your DRBD is working well!

Making the DRBD LVM Cluster Aware

Because this LVM exists on a Primary/Primary DRBD partition where both nodes can write at the same time, the LVM layer MUST be configured to be cluster-aware or else you WILL corrupt your data eventually.

To switch LVM to cluster aware mode, edit /etc/lvm/lvm.conf on both nodes:

Both:

vim /etc/lvm/lvm.conf

Search for filter and locate the lines:

# By default we accept every block device:

filter = [ "a/.*/" ]

Copy the filter = [ "a/.*/" ] line, comment out the first one and change the second one to [ "a|drbd.*|", "r|.*|" ]. The entry should now read:

# By default we accept every block device:

#filter = [ "a/.*/" ]

filter = [ "a|drbd.*|", "r|.*|" ]

In a similar manner, change the locking_type value from 1 to 3. The edited section should look like:

# Type of locking to use. Defaults to local file-based locking (1).

# Turn locking off by setting to 0 (dangerous: risks metadata corruption

# if LVM2 commands get run concurrently).

# Type 2 uses the external shared library locking_library.

# Type 3 uses built-in clustered locking.

#locking_type = 1

locking_type = 3

Now, tell LVM to become cluster aware. On Both nodes, run:

lvmconf --enable-cluster

There will be no output if it worked, it will just return to the shell.

That's it for now.

| Any questions, feedback, advice, complaints or meanderings are welcome. | |||

| Alteeve's Niche! | Alteeve Enterprise Support | Community Support | |

| © 2025 Alteeve. Intelligent Availability® is a registered trademark of Alteeve's Niche! Inc. 1997-2025 | |||

| legal stuff: All info is provided "As-Is". Do not use anything here unless you are willing and able to take responsibility for your own actions. | |||