AN!Cluster Tutorial 2: Difference between revisions

| (483 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

{{howto_header}} | {{howto_header}} | ||

[[image:RN3-m2_01.jpg|thumb|right|400px|A typical ''Anvil!'' build-out]] | |||

This paper has one goal: | |||

* Create an easy to use, fully redundant platform for virtual servers. | |||

Oh, and do have fun! | |||

= What's New? = | = What's New? = | ||

| Line 16: | Line 14: | ||

* Many refinements to the cluster stack that protect against corner cases seen over the last two years. | * Many refinements to the cluster stack that protect against corner cases seen over the last two years. | ||

* Configuration naming convention changes to support the new [[ | * Configuration naming convention changes to support the new [[Striker]] dashboard. | ||

* Addition of the [[AN!CM]] monitoring and alert system. | * Addition of the [[AN!CM]] monitoring and alert system. | ||

* Security improved; [[selinux]] and [[iptables]] now enabled and used. | |||

{{note|1=Changes made on Apr. 3, 2015}} | |||

* New network interface, bond and bridge naming convention used. | |||

* New Anvil and node naming convention. | |||

** ie: <span class="code">an-anvil-05</span> -> <span class="code">an-anvil-05</span>, <span class="code">cn-a05n01</span> -> <span class="code">an-a05n01</span>. | |||

* References to 'AN!CM' now point to 'Striker'. | |||

* Foundation pack host names have been expanded to be more verbose. | |||

** ie: <span class="code">an-s01</span> -> <span class="code">an-switch01</span>, <span class="code">an-m01</span> -> <span class="code">an-striker01</span>. | |||

== A Note on Terminology == | == A Note on Terminology == | ||

In this tutorial, we will use the following terms | In this tutorial, we will use the following terms: | ||

* ''Anvil!'': This is our name for the HA platform as a whole. | * ''Anvil!'': This is our name for the HA platform as a whole. | ||

| Line 28: | Line 36: | ||

* ''Compute Pack'': This describes a pair of nodes that work together to power highly-available servers. | * ''Compute Pack'': This describes a pair of nodes that work together to power highly-available servers. | ||

* ''Foundation Pack'': This describes the switches, [[PDU]]s and [[UPS]]es used to power and connect the nodes. | * ''Foundation Pack'': This describes the switches, [[PDU]]s and [[UPS]]es used to power and connect the nodes. | ||

* '' | * ''Striker Dashboard'': This describes the equipment used for the [[Striker]] management dashboard. | ||

== Why Should I Follow This (Lengthy) Tutorial? == | == Why Should I Follow This (Lengthy) Tutorial? == | ||

Following this tutorial is not the lightest undertaking. It is designed to teach you all the inner details of building an HA platform for | Following this tutorial is not the lightest undertaking. It is designed to teach you all the inner details of building an HA platform for virtual servers. When finished, you will have a detailed and deep understanding of what it takes to build a fully redundant, mostly fault-tolerant high-availability platform. Though lengthy, it is very worthwhile if you want to understand high-availability. | ||

In either case, when finished, you will have the following benefits | In either case, when finished, you will have the following benefits: | ||

* Totally open source. Everything. This guide and all software used is open! | * Totally open source. Everything. This guide and all software used is open! | ||

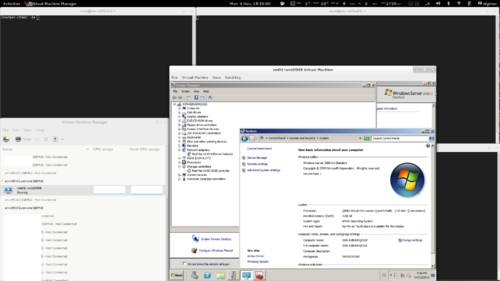

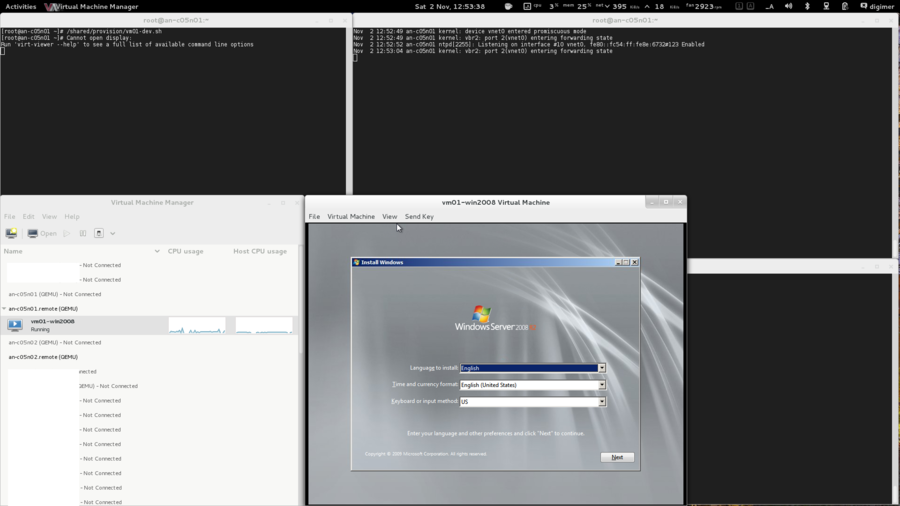

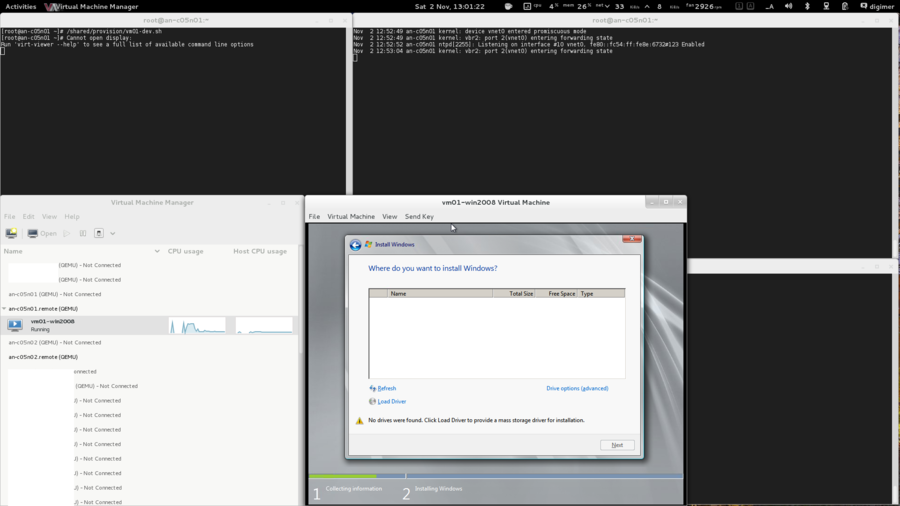

* You can host | * You can host servers running almost any operating system. | ||

* The HA platform requires no access to the servers and no special software needs to be installed. Your users may well never know that they're on a virtual machine. | * The HA platform requires no access to the servers and no special software needs to be installed. Your users may well never know that they're on a virtual machine. | ||

* Your servers will | * Your servers will operate just like servers installed on bare-iron machines. No special configuration is required. The high-availability components will be hidden behind the scenes. | ||

* The worst failures of core components, such as a mainboard failure in a node, will cause an outage of roughly 30 to 90 seconds. | |||

* Storage is synchronously replicated, guaranteeing that the total destruction of a node will cause no more data loss than a traditional server losing power. | * Storage is synchronously replicated, guaranteeing that the total destruction of a node will cause no more data loss than a traditional server losing power. | ||

* Storage is replicated without the need for a [[SAN]], reducing cost and providing | * Storage is replicated without the need for a [[SAN]], reducing cost and providing total storage redundancy. | ||

* Live-migration of | * Live-migration of servers enables upgrading and node maintenance without downtime. No more weekend maintenance! | ||

* | * AN!CM; The "AN! Cluster Monitor", watches the HA stack is continually. It sends alerts for many events from predictive hardware failure to simple live migration in a single application. | ||

* Most failures are fault-tolerant and will cause no interruption in services at all. | |||

Ask your local | Ask your local VMware or Microsoft Hyper-V sales person what they'd charge for all this. :) | ||

== High-Level Explanation of How HA Clustering Works == | == High-Level Explanation of How HA Clustering Works == | ||

{{note|1=This section is an adaptation of [http://lists.linux-ha.org/pipermail/linux-ha/2013-October/047633.html this post] to the [http://lists.linux-ha.org/mailman/listinfo/linux-ha Linux-HA] mailing list.}} | {{note|1=This section is an adaptation of [http://lists.linux-ha.org/pipermail/linux-ha/2013-October/047633.html this post] to the [http://lists.linux-ha.org/mailman/listinfo/linux-ha Linux-HA] mailing list. If you find this section hard to follow, please don't worry. Each component is explained in the "Concepts" section below.}} | ||

Before digging into the details, it might help to start with a high-level explanation of how HA clustering works. | Before digging into the details, it might help to start with a high-level explanation of how HA clustering works. | ||

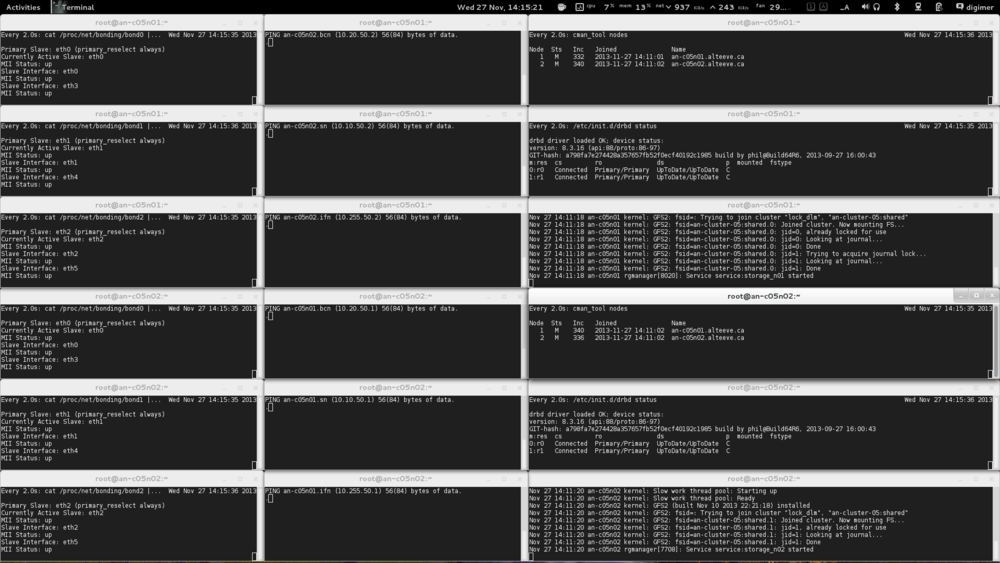

[[ | [[Corosync]] uses the [[totem]] protocol for "heartbeat"-like monitoring of the other node's health. A token is passed around to each node, the node does some work (like acknowledge old messages, send new ones), and then it passes the token on to the next node. This goes around and around all the time. Should a node not pass its token on after a short time-out period, the token is declared lost, an error count goes up and a new token is sent. If too many tokens are lost in a row, the node is declared lost. | ||

Once the node is declared lost, the remaining nodes reform a new cluster. If enough nodes are left to form [[ | Once the node is declared lost, the remaining nodes reform a new cluster. If enough nodes are left to form [[quorum]] (simple majority), then the new cluster will continue to provide services. In two-node clusters, like the ones we're building here, quorum is disabled so each node can work on its own. | ||

Corosync itself only cares about who is a cluster member and making sure all members get all [[CPG|messages]]. What happens after the cluster reforms is up to the cluster manager, <span class="code">cman</span>, and the resource group manager, [[rgmanager]]. | |||

The first thing <span class="code">cman</span> does after being notified that a node was lost is initiate a [[ | The first thing <span class="code">cman</span> does after being notified that a node was lost is initiate a [[fence]] against the lost node. This is a process where the lost node is powered off by the healthy node (power fencing), or cut off from the network/storage (fabric fencing). In either case, the idea is to make sure that the lost node is in a known state. If this is skipped, the node could recover later and try to provide cluster services, not having realized that it was removed from the cluster. This could cause problems from confusing switches to corrupting data. | ||

When rgmanager is told that membership has changed because a node died, it looks to see what services might have been lost. Once it knows what was lost, it looks at the rules it's been given and decides what to do. These rules are defined in the <span class="code">[[ | When rgmanager is told that membership has changed because a node died, it looks to see what services might have been lost. Once it knows what was lost, it looks at the rules it's been given and decides what to do. These rules are defined in the <span class="code">[[RHCS v3 cluster.conf|cluster.conf]]'s</span> <span class="code"><rm></span> element. We'll go into detail on this later. | ||

In two-node clusters, there is also a chance of a "split-brain". | In two-node clusters, there is also a chance of a "split-brain". Quorum has to be disabled, so it is possible for both nodes to think the other node is dead and both try to provide the same cluster services. By using fencing, after the nodes break from one another (which could happen with a network failure, for example), neither node will offer services until one of them has fenced the other. The faster node will win and the slower node will shut down (or be isolated). The survivor can then run services safely without risking a split-brain. | ||

Once the dead/slower node has been fenced, <span class="code">rgmanager</span> then decides what to do with the services that had been running on the lost node. Generally, this means | Once the dead/slower node has been fenced, <span class="code">rgmanager</span> then decides what to do with the services that had been running on the lost node. Generally, this means restarting the services locally that had been running on the dead node. The details of this are decided by you when you configure the resources in <span class="code">rgmanager</span>. As we will see with each node's local storage service, the service is not recovered but instead left stopped. | ||

= The Task Ahead = | |||

Before we start, let's take a few minutes to discuss clustering and its complexities. | Before we start, let's take a few minutes to discuss clustering and its complexities. | ||

| Line 83: | Line 90: | ||

Coming back to earth: | Coming back to earth: | ||

Many technologies can be learned by creating a very simple base and then building on it. The classic "Hello, World!" script created when first learning a programming language is an example of this. Unfortunately, there is no real analogue to this in clustering. Even the most basic cluster requires several pieces be in place and working together. If you try to rush by ignoring pieces you think are not important, you will almost certainly waste time. A good example is setting aside [[fencing]], thinking that your test cluster's data isn't important. The cluster software has no concept of "test". It treats everything as critical all the time and ''will'' shut down if anything goes wrong. | Many technologies can be learned by creating a very simple base and then building on it. The classic "Hello, World!" script created when first learning a programming language is an example of this. Unfortunately, there is no real analogue to this in clustering. Even the most basic cluster requires several pieces be in place and working well together. If you try to rush, by ignoring pieces you think are not important, you will almost certainly waste time. A good example is setting aside [[fencing]], thinking that your test cluster's data isn't important. The cluster software has no concept of "test". It treats everything as critical all the time and ''will'' shut down if anything goes wrong. | ||

Take your time, work through these steps, and you will have the foundation cluster sooner than you realize. Clustering is fun '''because''' it is a challenge. | Take your time, work through these steps, and you will have the foundation cluster sooner than you realize. Clustering is fun '''because''' it is a challenge. | ||

| Line 89: | Line 96: | ||

== Technologies We Will Use == | == Technologies We Will Use == | ||

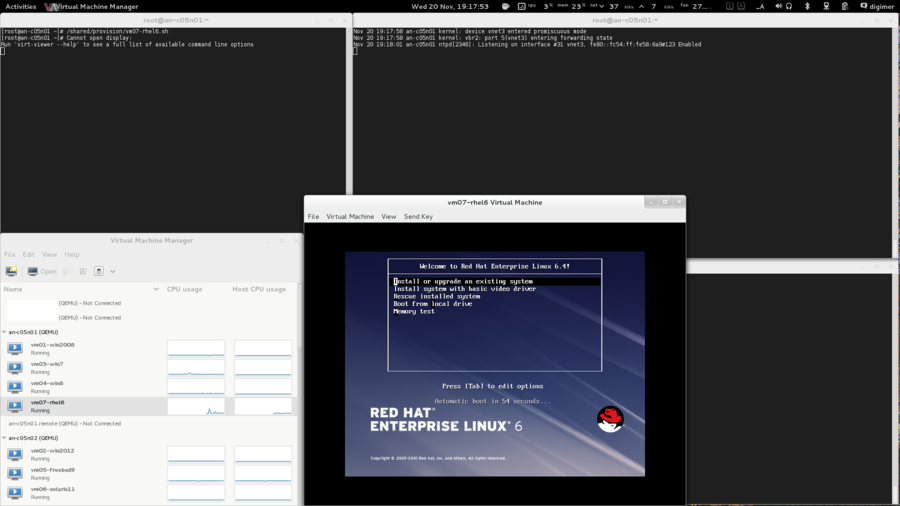

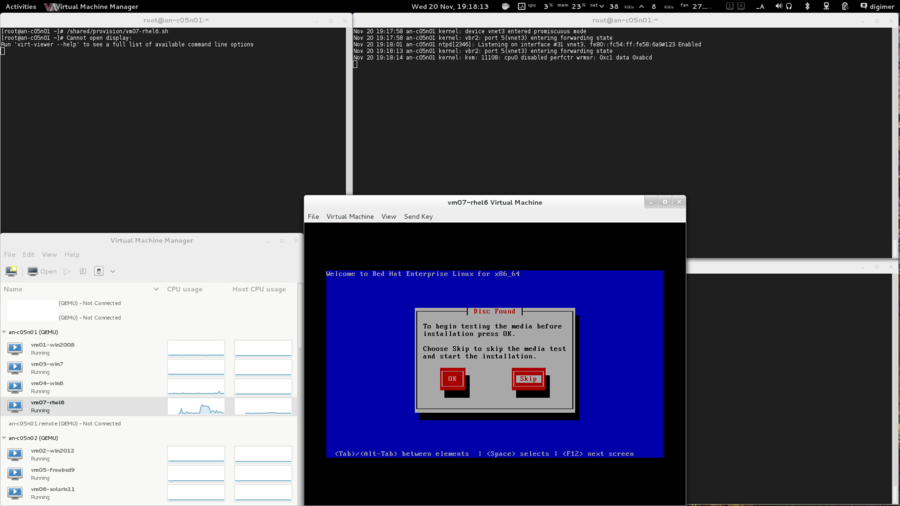

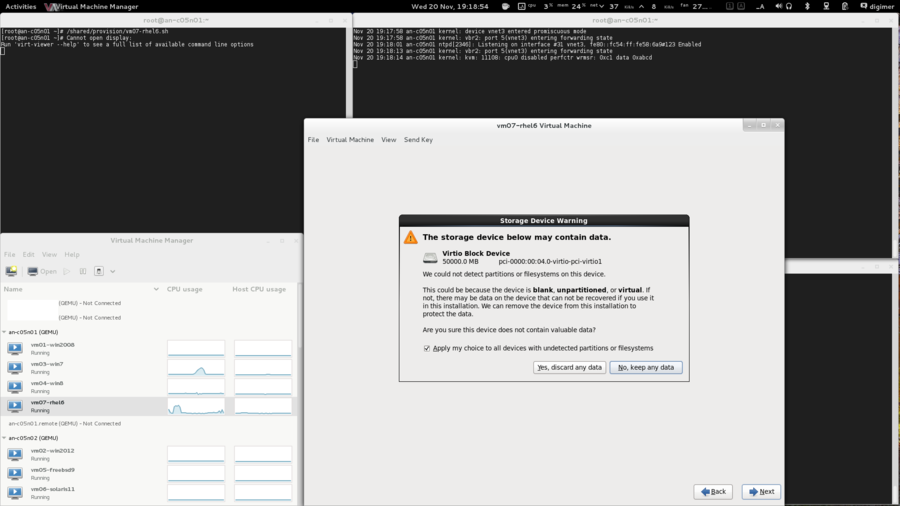

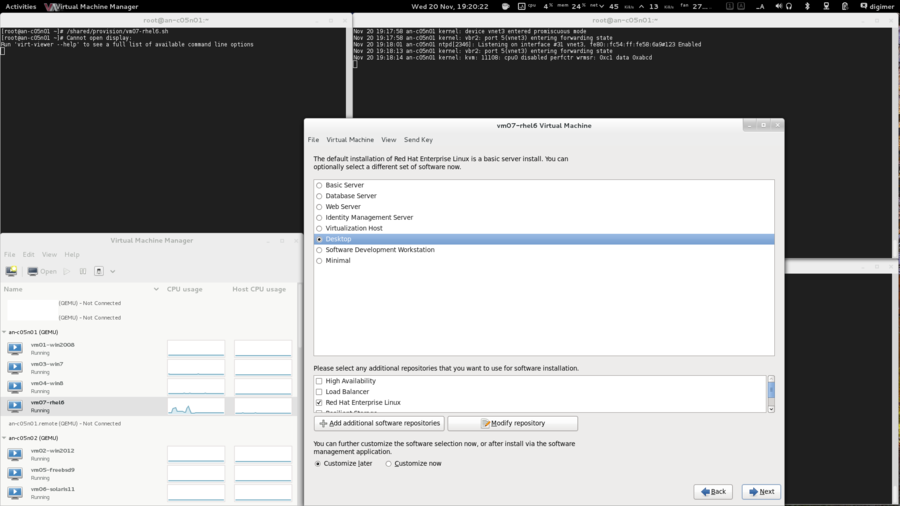

* ''Red Hat Enterprise Linux 6'' ([[EL6]]); You can use a derivative like [[CentOS]] v6. Specifically, we're using 6. | * ''Red Hat Enterprise Linux 6'' ([[EL6]]); You can use a derivative like [[CentOS]] v6. Specifically, we're using 6.5. | ||

* ''Red Hat Cluster Services'' "Stable" version 3. This describes the following core components: | * ''Red Hat Cluster Services'' "Stable" version 3. This describes the following core components: | ||

** ''Corosync''; Provides cluster communications using the [[totem]] protocol. | ** ''Corosync''; Provides cluster communications using the [[totem]] protocol. | ||

| Line 95: | Line 102: | ||

** ''Resource Manager'' (<span class="code">[[rgmanager]]</span>); Manages cluster resources and services. Handles service recovery during failures. | ** ''Resource Manager'' (<span class="code">[[rgmanager]]</span>); Manages cluster resources and services. Handles service recovery during failures. | ||

** ''Clustered Logical Volume Manager'' (<span class="code">[[clvm]]</span>); Cluster-aware (disk) volume manager. Backs [[GFS2]] [[filesystem]]s and [[KVM]] virtual machines. | ** ''Clustered Logical Volume Manager'' (<span class="code">[[clvm]]</span>); Cluster-aware (disk) volume manager. Backs [[GFS2]] [[filesystem]]s and [[KVM]] virtual machines. | ||

** ''Global File | ** ''Global File System'' version 2 (<span class="code">[[gfs2]]</span>); Cluster-aware, concurrently mountable file system. | ||

* ''Distributed Redundant Block Device'' ([[DRBD]]); Keeps shared data synchronized across cluster nodes. | * ''Distributed Redundant Block Device'' ([[DRBD]]); Keeps shared data synchronized across cluster nodes. | ||

* ''KVM''; [[Hypervisor]] that controls and supports virtual machines. | * ''KVM''; [[Hypervisor]] that controls and supports virtual machines. | ||

* Alteeve's Niche! Cluster | * Alteeve's Niche! Cluster Dashboard and Cluster Monitor | ||

== A Note on Hardware == | == A Note on Hardware == | ||

| Line 104: | Line 111: | ||

[[Image:RX300-S7_close-up_01.jpg|thumb|right|500px|RX300 S7]] | [[Image:RX300-S7_close-up_01.jpg|thumb|right|500px|RX300 S7]] | ||

Another new change is that Alteeve's Niche!, after years of experimenting with various hardware | Another new change is that Alteeve's Niche!, after years of experimenting with various hardware, has partnered with [[Fujitsu]]. We chose them because of the unparalleled quality of their equipment. | ||

This tutorial can be used on any manufacturer's hardware, provided it meets the minimum requirements listed below. That said, we strongly recommend readers give Fujitsu's [http://www.fujitsu.com/fts/products/computing/servers/primergy/rack/ RX-line] of servers a close look. We do not get a discount for this recommendation, we genuinely love the quality of their gear. The only technical argument for using Fujitsu hardware is that we do all our cluster stack monitoring software development on Fujitsu RX200 and RX300 servers | This tutorial can be used on any manufacturer's hardware, provided it meets the minimum requirements listed below. That said, we strongly recommend readers give Fujitsu's [http://www.fujitsu.com/fts/products/computing/servers/primergy/rack/ RX-line] of servers a close look. We do not get a discount for this recommendation, we genuinely love the quality of their gear. The only technical argument for using Fujitsu hardware is that we do all our cluster stack monitoring software development on Fujitsu RX200 and RX300 servers, so we can say with confidence that the AN! software components will work well on their kit. | ||

If you use any other hardware vendor and run into any trouble, please don't hesitate to [[Support|contact us]]. We want to make sure that our HA stack works on as many systems as possible and will be happy to help out. Of course, all Alteeve code is open source, so [https://github.com/digimer/an-cdb contributions] are always welcome, too! | If you use any other hardware vendor and run into any trouble, please don't hesitate to [[Support|contact us]]. We want to make sure that our HA stack works on as many systems as possible and will be happy to help out. Of course, all Alteeve code is open source, so [https://github.com/digimer/an-cdb contributions] are always welcome, too! | ||

| Line 114: | Line 121: | ||

The goal of this tutorial is to help you build an HA platform with zero single points of failure. In order to do this, certain minimum technical requirements must be met. | The goal of this tutorial is to help you build an HA platform with zero single points of failure. In order to do this, certain minimum technical requirements must be met. | ||

Bare minimum requirements | Bare minimum requirements: | ||

* Two servers with the following; | * Two servers with the following; | ||

** | ** A CPU with [https://en.wikipedia.org/wiki/Hardware-assisted_virtualization hardware-accelerated virtualization] | ||

** Redundant power supplies | ** Redundant power supplies | ||

** [[IPMI]] or vendor-specific [https://en.wikipedia.org/wiki/Integrated_Remote_Management_Controller out-of-band management], like Fujitsu's iRMC, HP's iLO, Dell's iDRAC, etc | ** [[IPMI]] or vendor-specific [https://en.wikipedia.org/wiki/Integrated_Remote_Management_Controller out-of-band management], like Fujitsu's iRMC, HP's iLO, Dell's iDRAC, etc | ||

** Six network interfaces, 1 Gbit or faster (yes, six!) | ** Six network interfaces, 1 [[Gbit]] or faster (yes, six!) | ||

** 2 [[GiB]] of RAM and 44.5 GiB of storage for the host operating system, plus sufficient RAM and storage for your VMs | ** 2 [[GiB]] of RAM and 44.5 GiB of storage for the host operating system, plus sufficient RAM and storage for your VMs | ||

* Two switched [[PDU]]s; APC-brand recommended but any with a supported [[fence agent]] is fine | * Two switched [[PDU]]s; APC-brand recommended but any with a supported [[fence agent]] is fine | ||

* Two network switches | * Two network switches | ||

== Recommended Hardware; A Little More Detail == | == Recommended Hardware; A Little More Detail == | ||

The previous section | The previous section covered the bare-minimum system requirements for following this tutorial. If you are looking to build an ''Anvil!'' for production, we need to discuss important considerations for selecting hardware. | ||

=== The Most Important Consideration - Storage === | === The Most Important Consideration - Storage === | ||

| Line 133: | Line 140: | ||

There is probably no single consideration more important than choosing the storage you will use. | There is probably no single consideration more important than choosing the storage you will use. | ||

In our years of building Anvil! HA platforms, we've found no single issue more important than storage latency. This is true for all virtualized environments, in fact. | In our years of building ''Anvil!'' HA platforms, we've found no single issue more important than storage latency. This is true for all virtualized environments, in fact. | ||

The problem is this: | The problem is this: | ||

Multiple servers on shared storage can cause particularly random storage access. Traditional hard drives have disks with mechanical read/write heads on the ends of arms that sweep back and forth across the disk surfaces. These platters are broken up into "tracks" and each track is itself cut up into "sectors". | Multiple servers on shared storage can cause particularly random storage access. Traditional hard drives have disks with mechanical read/write heads on the ends of arms that sweep back and forth across the disk surfaces. These platters are broken up into "tracks" and each track is itself cut up into "sectors". When a server needs to read or write data, the hard drive needs to sweep the arm over the track it wants and then wait there for the sector it wants to pass underneath. | ||

This time taken to get the read/write head onto the track and then wait for the sector to pass underneath is called "seek latency". How long this latency actually | This time taken to get the read/write head onto the track and then wait for the sector to pass underneath is called "seek latency". How long this latency actually is depends on a few things: | ||

* How fast are the platters rotating? The faster the platter speed, the less time it takes for a sector to pass under the read/write head. | * How fast are the platters rotating? The faster the platter speed, the less time it takes for a sector to pass under the read/write head. | ||

* How fast the read/write arms can move and how far they have to travel between tracks | * How fast the read/write arms can move and how far do they have to travel between tracks? Highly random read/write requests can cause a lot of head travel and increase seek time. | ||

* How many read/write requests | * How many read/write requests ([[IOPS]]) can your storage handle? If your storage can not process the incoming read/write requests fast enough, your storage can slow down or stall entirely. | ||

When many people think about hard drives, they generally worry about maximum write speeds. For environments with many virtual servers, this is actually far less important than it might seem. Reducing latency to ensure that read/write requests don't back up is far more important. If too many requests back | When many people think about hard drives, they generally worry about maximum write speeds. For environments with many virtual servers, this is actually far less important than it might seem. Reducing latency to ensure that read/write requests don't back up is far more important. This is measured as the storage's [[IOPS]] performance. If too many requests back up in the cache, storage performance can collapse or stall out entirely. | ||

This is particularly problematic when multiple servers try to boot at the same time. If, for example, a node with multiple servers dies, the surviving node will try to start the lost servers at nearly the same time. This causes a sudden dramatic rise in read requests and can cause all servers to hang entirely, a condition called a "boot storm". | This is particularly problematic when multiple servers try to boot at the same time. If, for example, a node with multiple servers dies, the surviving node will try to start the lost servers at nearly the same time. This causes a sudden dramatic rise in read requests and can cause all servers to hang entirely, a condition called a "boot storm". | ||

| Line 151: | Line 158: | ||

Thankfully, this latency problem can be easily dealt with in one of three ways; | Thankfully, this latency problem can be easily dealt with in one of three ways; | ||

# Use solid-state drives. These have no moving parts, so there is | # Use solid-state drives. These have no moving parts, so there is less penalty for highly random read/write requests. | ||

# Use fast platter drives and proper [[RAID]] controllers with [[write-back]] caching. | # Use fast platter drives and proper [[RAID]] controllers with [[write-back]] caching. | ||

# Isolate each server onto dedicated platter drives. | # Isolate each server onto dedicated platter drives. | ||

| Line 162: | Line 169: | ||

!Con | !Con | ||

|- | |- | ||

|Fast drives + Write-back caching | |Fast drives + [[Write-back caching]] | ||

|15,000rpm SAS drives are extremely reliable and the high rotation speeds minimize latency caused by waiting for sectors to pass under the read/write heads. Using multiple drives in [[RAID]] [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_5|level 5]] or [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_6|level 6]] breaks up reads and writes into smaller pieces, allowing requests to be serviced quickly and | |15,000rpm SAS drives are extremely reliable and the high rotation speeds minimize latency caused by waiting for sectors to pass under the read/write heads. Using multiple drives in [[RAID]] [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_5|level 5]] or [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_6|level 6]] breaks up reads and writes into smaller pieces, allowing requests to be serviced quickly and to help keep the read/write buffer empty. Write-back caching allows RAM-like write speeds and the ability to re-order disk access to minimize head movement. | ||

|The main con is the number of disks needed to get effective performance gains from [[TLUG_Talk:_Storage_Technologies_and_Theory#RAID|striping]]. | |The main con is the number of disks needed to get effective performance gains from [[TLUG_Talk:_Storage_Technologies_and_Theory#RAID|striping]]. Alteeve always uses a minimum of six disks, but many entry-level servers support a maximum of 4 drives. You need to account for the number of disks you plan to use when selecting your hardware. | ||

|- | |- | ||

|SSDs | |SSDs | ||

| | |They have no moving parts, so read and write requests do not have to wait for mechanical movements to happen, drastically reducing latency. The minimum number of drives for SSD-based configuration is two. | ||

|Solid state drives use [[NAND]] flash, which can only be written to a finite number of times. All drives in our Anvil! will be written to roughly the same amount, so hitting this write-limit could mean that all drives in both nodes would fail at nearly the same time. Avoiding this requires careful monitoring of the drives and replacing them before their write limits are hit. | |Solid state drives use [[NAND]] flash, which can only be written to a finite number of times. All drives in our ''Anvil!'' will be written to roughly the same amount, so hitting this write-limit could mean that all drives in both nodes would fail at nearly the same time. Avoiding this requires careful monitoring of the drives and replacing them before their write limits are hit. | ||

{{note|1=Enterprise grade SSDs are designed to handle highly random, multi-threaded workloads and come at a significant cost. Consumer-grade SSDs are designed principally for single threaded, large accesses and do not offer the same benefits.}} | |||

|- | |- | ||

|Isolated Storage | |Isolated Storage | ||

|Dedicating hard drives to virtual servers avoids the highly random read/write issues found when multiple servers share the same storage. This allows for the safe use of cheap, inexpensive hard drives. This also means that dedicated hardware RAID controllers with battery-backed cache are not needed. This makes it possible to save a good amount of money in the hardware design. | |Dedicating hard drives to virtual servers avoids the highly random read/write issues found when multiple servers share the same storage. This allows for the safe use of cheap, inexpensive hard drives. This also means that dedicated hardware RAID controllers with battery-backed cache are not needed. This makes it possible to save a good amount of money in the hardware design. | ||

|The obvious down-side to isolated storage is that you significantly limit the number of servers you can host on your Anvil!. If you only need to support one or two servers | |The obvious down-side to isolated storage is that you significantly limit the number of servers you can host on your ''Anvil!''. If you only need to support one or two servers, this should not be an issue. | ||

|} | |} | ||

| Line 180: | Line 188: | ||

* SAS drives are generally aimed at the enterprise environment and are built to much higher quality standards. SAS HDDs have rotational speeds of up to 15,000rpm and can handle far more read/write operations per second. Enterprise SSDs using the SAS interface are also much more reliable than their commercial counterpart. The main downside to SAS drives is their cost. | * SAS drives are generally aimed at the enterprise environment and are built to much higher quality standards. SAS HDDs have rotational speeds of up to 15,000rpm and can handle far more read/write operations per second. Enterprise SSDs using the SAS interface are also much more reliable than their commercial counterpart. The main downside to SAS drives is their cost. | ||

In all production environments, we strongly, strongly recommend SAS-connected drives. For | In all production environments, we strongly, strongly recommend SAS-connected drives. For non-production environments, SATA drives are fine. | ||

==== Extra Security - LSI SafeStore ==== | |||

If security is a particular concern of yours, then you can look at using [https://en.wikipedia.org/wiki/Hardware-based_full_disk_encryption self-encrypting] hard drives along with LSI's [[LSI SafeStore|SafeStore]] option. An example hard drive, which we've tested and validated, would be the Seagate [http://www.seagate.com/internal-hard-drives/enterprise-hard-drives/hdd/enterprise-performance-10K-hdd/ ST1800MM0038] drives. In general, if the drive advertises "[http://www.seagate.com/tech-insights/protect-data-with-seagate-secure-self-encrypting-drives-master-ti/ SED]" support, it should work fine. | |||

RAM is a far simpler topic than storage, thankfully. Here, all you need to do is add up how much RAM you plan to assign to servers, add at least 2 [[GiB]] for the host, and then install that much memory in your nodes. | The provides the ability to: | ||

* Encrypt all data with [https://en.wikipedia.org/wiki/Advanced_Encryption_Standard AES-256] grade encryption without a performance hit. | |||

* Require a pass phrase on boot to decrypt the server's data. | |||

* Protect the contents of the drives while "at rest" (ie: while being shipped somewhere). | |||

* Execute a [https://github.com/digimer/striker/blob/master/tools/anvil-self-destruct self-destruct] sequence. | |||

Obviously, most users won't need this, but it might be useful to some users in sensitive environments like embassies in less than friendly host countries. | |||

=== RAM - Preparing for Degradation === | |||

RAM is a far simpler topic than storage, thankfully. Here, all you need to do is add up how much RAM you plan to assign to servers, add at least 2 [[GiB]] for the host (we recommend 4), and then install that much memory in both of your nodes. | |||

In production, there are two technologies you will want to consider; | In production, there are two technologies you will want to consider; | ||

* [[ECC]], error | * [[ECC]], error-correcting code, provide the ability for RAM to recover from single-[[bit]] errors. If you are familiar with how [[TLUG_Talk:_Storage_Technologies_and_Theory#RAID|parity]] in RAID arrays work, ECC in RAM is the same idea. This is often included in server-class hardware by default. It is highly recommended. | ||

* [http://www.fujitsu.com/global/services/computing/server/sparcenterprise/technology/availability/memory.html Memory Mirroring] is, continuing our storage comparison, [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_1|RAID level 1]] for RAM. All writes to memory go to two different chips. Should one fail, the contents of the RAM can still be read from the surviving module. | * [http://www.fujitsu.com/global/services/computing/server/sparcenterprise/technology/availability/memory.html Memory Mirroring] is, continuing our storage comparison, [[TLUG_Talk:_Storage_Technologies_and_Theory#Level_1|RAID level 1]] for RAM. All writes to memory go to two different chips. Should one fail, the contents of the RAM can still be read from the surviving module. | ||

=== Never Over Provision! === | === Never Over-Provision! === | ||

"Over-provisioning", also called "[https://en.wikipedia.org/wiki/Thin_provisioning | "Over-provisioning", also called "[https://en.wikipedia.org/wiki/Thin_provisioning thin provisioning]" is a concept made popular in many "cloud" technologies. It is a concept that has almost no place in HA environments. | ||

A common example is creating virtual disks of a given apparent size, but which only pull space from real storage as needed. So if you created a "thin" virtual disk that was 80 [[GiB]] large, but only 20 GiB worth of data was used, only 20 GiB from the real storage would be used. | A common example is creating virtual disks of a given apparent size, but which only pull space from real storage as needed. So if you created a "thin" virtual disk that was 80 [[GiB]] large, but only 20 GiB worth of data was used, only 20 GiB from the real storage would be used. | ||

In essence; Over-provisioning is where you allocate more resources to servers than the nodes can actually provide, banking on the hopes that most servers will not use all of the | In essence; Over-provisioning is where you allocate more resources to servers than the nodes can actually provide, banking on the hopes that most servers will not use all of the resources allocated to them. The danger here, and the reason it has almost no place in HA, is that if the servers collectively use more resources than the nodes can provide, something is going to crash. | ||

=== CPU Cores - Possibly Acceptable Over-Provisioning === | === CPU Cores - Possibly Acceptable Over-Provisioning === | ||

| Line 203: | Line 223: | ||

Over provisioning of RAM and storage is never acceptable in an HA environment, as mentioned. Over-allocating CPU cores is possibly acceptable though. | Over provisioning of RAM and storage is never acceptable in an HA environment, as mentioned. Over-allocating CPU cores is possibly acceptable though. | ||

When selecting which CPUs to use in your nodes, the number of cores and the speed of the cores will determine how much computational horse-power you have to allocate to your servers. The main considerations are | When selecting which CPUs to use in your nodes, the number of cores and the speed of the cores will determine how much computational horse-power you have to allocate to your servers. The main considerations are: | ||

* Core speed; Any given "[https://en.wikipedia.org/wiki/Thread_%28computing%29 thread]" can be processed by a single CPU core at a time. The faster the given core is, the faster it can process any given request. Many applications do not support [https://en.wikipedia.org/wiki/Thread_%28computing%29#Multithreading multithreading], meaning that the only way to improve performance is to use faster cores, not more cores. | * Core speed; Any given "[https://en.wikipedia.org/wiki/Thread_%28computing%29 thread]" can be processed by a single CPU core at a time. The faster the given core is, the faster it can process any given request. Many applications do not support [https://en.wikipedia.org/wiki/Thread_%28computing%29#Multithreading multithreading], meaning that the only way to improve performance is to use faster cores, not more cores. | ||

| Line 210: | Line 230: | ||

In processing, each CPU "[https://en.wikipedia.org/wiki/Multi-core_processor core]" can handle one program "[https://en.wikipedia.org/wiki/Thread_%28computing%29 thread]" at a time. Since the earliest days of [https://en.wikipedia.org/wiki/Multitasking multitasking], operating systems have been able to handle threads waiting for a CPU resource to free up. So the risk of over-provisioning CPUs is restricted to performance issues only. | In processing, each CPU "[https://en.wikipedia.org/wiki/Multi-core_processor core]" can handle one program "[https://en.wikipedia.org/wiki/Thread_%28computing%29 thread]" at a time. Since the earliest days of [https://en.wikipedia.org/wiki/Multitasking multitasking], operating systems have been able to handle threads waiting for a CPU resource to free up. So the risk of over-provisioning CPUs is restricted to performance issues only. | ||

If you're building an Anvil! to support multiple servers and it's important that, no matter how busy the other servers are, the performance of each server can not degrade, then you need to be sure you have as many real CPU cores as you plan to assign to servers. | If you're building an ''Anvil!'' to support multiple servers and it's important that, no matter how busy the other servers are, the performance of each server can not degrade, then you need to be sure you have as many real CPU cores as you plan to assign to servers. | ||

So for example, if you plan to have three servers and you plan to allocate each server four virtual CPU cores, you need a minimum of 13 real CPU cores (3 servers x 4 cores each plus at least one core for the node). In this scenario, you will want to choose servers with dual 8-core CPUs, for a total of 16 available real CPU cores. You may choose to buy two 6-core CPUs, for a total of 12 real cores, but you risk congestion still. If all three servers fully utilize their four cores at the same time, the host OS will be left with no available core for | So for example, if you plan to have three servers and you plan to allocate each server four virtual CPU cores, you need a minimum of 13 real CPU cores (3 servers x 4 cores each plus at least one core for the node). In this scenario, you will want to choose servers with dual 8-core CPUs, for a total of 16 available real CPU cores. You may choose to buy two 6-core CPUs, for a total of 12 real cores, but you risk congestion still. If all three servers fully utilize their four cores at the same time, the host OS will be left with no available core for its software, which manages the HA stack. | ||

In many cases, however, risking a performance loss under periods of high CPU load is acceptable. In these cases, allocating more virtual cores than you have real cores is fine. Should the load of the servers climb to a point where all real cores are under 100% utilization, then some applications will slow down as they wait for their turn in the CPU. | In many cases, however, risking a performance loss under periods of high CPU load is acceptable. In these cases, allocating more virtual cores than you have real cores is fine. Should the load of the servers climb to a point where all real cores are under 100% utilization, then some applications will slow down as they wait for their turn in the CPU. | ||

In the end, the decision whether to over-provision CPU cores or not, and if so | In the end, the decision whether to over-provision CPU cores or not, and if so by how much, is up to you, the reader. Remember to consider balancing out faster cores with the number of cores. If your expected load will be short bursts of computationally intense jobs, then few-but-faster cores may be the best solution. | ||

==== A Note on Hyper-Threading ==== | ==== A Note on Hyper-Threading ==== | ||

Intel's [https://en.wikipedia.org/wiki/Hyper-threading hyper-threading] technology can make a CPU appear to the OS to have twice as many real cores than it actually has. For example, a CPU listed as "4c/8t" (four cores, eight threads) will appear to the node as | Intel's [https://en.wikipedia.org/wiki/Hyper-threading hyper-threading] technology can make a CPU appear to the OS to have twice as many real cores than it actually has. For example, a CPU listed as "4c/8t" (four cores, eight threads) will appear to the node as an 8-core CPU. In fact, you only have four cores and the additional four cores are emulated attempts to make more efficient use of the processing of each core. | ||

Simply put, the idea behind this technology is to "slip in" a second thread when the CPU would otherwise be idle. For example, if the CPU core has to wait for memory to be fetched for the currently active thread, instead of sitting idle, a thread in the second core will be worked on. | Simply put, the idea behind this technology is to "slip in" a second thread when the CPU would otherwise be idle. For example, if the CPU core has to wait for memory to be fetched for the currently active thread, instead of sitting idle, a thread in the second core will be worked on. | ||

How much benefit this gives you in the real world is debatable and highly depended on your applications. For the purposes of HA, it's recommended to not count the "HT cores" as real cores. That is to say, when calculating load, treat "4c/8t" CPUs as | How much benefit this gives you in the real world is debatable and highly depended on your applications. For the purposes of HA, it's recommended to not count the "HT cores" as real cores. That is to say, when calculating load, treat "4c/8t" CPUs as a 4-core CPUs. | ||

=== Six Network Interfaces, Seriously? === | === Six Network Interfaces, Seriously? === | ||

| Line 230: | Line 250: | ||

Yes, seriously. | Yes, seriously. | ||

Obviously, you can put everything on a single network card and your HA software will work, but it would be | Obviously, you can put everything on a single network card and your HA software will work, but it would not be advised. | ||

We will go into the network configuration at length later on. For now, here's an overview | We will go into the network configuration at length later on. For now, here's an overview: | ||

* Each network needs two links in order to be fault-tolerant. One link will go to the first network switch and the second link will go to the second network switch. This way, the failure of a network cable, port or switch will not interrupt traffic. | * Each network needs two links in order to be fault-tolerant. One link will go to the first network switch and the second link will go to the second network switch. This way, the failure of a network cable, port or switch will not interrupt traffic. | ||

* There are three main networks in an Anvil!; | * There are three main networks in an ''Anvil!''; | ||

** Back-Channel Network; This is used by the cluster stack and is sensitive to latency. Delaying traffic on this network can cause the nodes | ** Back-Channel Network; This is used by the cluster stack and is sensitive to latency. Delaying traffic on this network can cause the nodes to "partition", breaking the cluster stack. | ||

** Storage Network; All disk writes will travel over this network. As such, it is easy to saturate this network. Sharing this traffic with other services would mean that it's very possible to significantly impact network performance under high disk write loads. For this reason, it is isolated. | ** Storage Network; All disk writes will travel over this network. As such, it is easy to saturate this network. Sharing this traffic with other services would mean that it's very possible to significantly impact network performance under high disk write loads. For this reason, it is isolated. | ||

** Internet-Facing Network; This network carries traffic to and from your servers. By isolating this network, users of your servers will never experience performance loss during storage or cluster high loads. Likewise, if your users place a high load on this network, it will not impact the ability of the Anvil! to function properly. It also isolates untrusted network traffic. | ** Internet-Facing Network; This network carries traffic to and from your servers. By isolating this network, users of your servers will never experience performance loss during storage or cluster high loads. Likewise, if your users place a high load on this network, it will not impact the ability of the ''Anvil!'' to function properly. It also isolates untrusted network traffic. | ||

So, three networks, each using two links for redundancy, means that we need six network interfaces. | So, three networks, each using two links for redundancy, means that we need six network interfaces. It is strongly recommended that you use three separate dual-port network cards. Using a single network card, as we will discuss in detail later, leaves you vulnerable to losing entire networks should the controller fail. | ||

==== A Note on Dedicated IPMI Interfaces ==== | ==== A Note on Dedicated IPMI Interfaces ==== | ||

| Line 248: | Line 268: | ||

Whenever possible, it is recommended that you go with a dedicated IPMI connection. | Whenever possible, it is recommended that you go with a dedicated IPMI connection. | ||

We've found that it rarely, if ever, is possible for a node to talk to | We've found that it rarely, if ever, is possible for a node to talk to its own network interface using a shared physical port. This is not strictly a problem, but it can certainly make testing and diagnostics easier when the node can ping and query its own IPMI interface over the network. | ||

=== Network Switches === | === Network Switches === | ||

The ideal switches to use in HA | The ideal switches to use in HA clusters are [https://en.wikipedia.org/wiki/Stackable_switch stackable] and [https://en.wikipedia.org/wiki/Managed_switch managed] switches in pairs. At the very least, a pair of switches that support [https://en.wikipedia.org/wiki/Virtual_LAN VLANs] is recommended. None of this is strictly required, but here are the reasons they're recommended: | ||

* VLAN allows for totally isolating the [[BCN]], [[SN]] and [[IFN]] traffic. This adds security and reduces broadcast traffic. | * VLAN allows for totally isolating the [[BCN]], [[SN]] and [[IFN]] traffic. This adds security and reduces broadcast traffic. | ||

* | * Managed switches provide a unified interface for configuring both switches at the same time. This drastically simplifies complex configurations, like setting up VLANs that span the physical switches. | ||

* Stacking provides a link between the two switches that effectively makes them work like one. Generally, | * Stacking provides a link between the two switches that effectively makes them work like one. Generally, the bandwidth available in the stack cable is much higher than the bandwidth of individual ports. This provides a high-speed link for all three VLANs in one cable and it allows for multiple links to fail without risking performance degradation. We'll talk more about this later. | ||

Beyond these suggested features, there are a few other things to consider when choosing switches | Beyond these suggested features, there are a few other things to consider when choosing switches: | ||

{|class="wikitable" | {|class="wikitable" | ||

| Line 265: | Line 285: | ||

|- | |- | ||

|[https://en.wikipedia.org/wiki/Maximum_transmission_unit MTU] size | |[https://en.wikipedia.org/wiki/Maximum_transmission_unit MTU] size | ||

|# The default packet size on a network | | | ||

# If you have particularly large chunks of data to transmit, you may want to enable the largest MTU possible. This maximum value is determined by the smallest MTU in your network equipment. If you nice network cards that support traditional 9 [[KiB]] MTU but you have a cheap switch that supports a small jumbo frame, say | # The default packet size on a network is 1500 [[bytes]]. If you build your VLANs in software, you need to account for the extra size needed for the VLAN header. If your switch supports "[https://en.wikipedia.org/wiki/Jumbo_Frames Jumbo Frames]", then there should be no problem. However, some cheap switches do not support jumbo frames, requiring you to reduce the MTU size value for the interfaces on your nodes. | ||

# If you have particularly large chunks of data to transmit, you may want to enable the largest MTU possible. This maximum value is determined by the smallest MTU in your network equipment. If you have nice network cards that support traditional 9 [[KiB]] MTU, but you have a cheap switch that supports a small jumbo frame, say 4 KiB, your effective MTU is 4 [[KiB]]. | |||

|- | |- | ||

|Packets Per Second | |Packets Per Second | ||

| Line 272: | Line 293: | ||

|- | |- | ||

|[[Multicast]] Groups | |[[Multicast]] Groups | ||

|Some fancy switches, like some Cisco hardware, | |Some fancy switches, like some Cisco hardware, don't maintain multicast groups persistently. The cluster software uses multicast for communication, so if your switch drops a multicast group, it will cause your cluster to partition. If you have a managed switch, ensure that persistent multicast groups are enabled. We'll talk more about this later. | ||

|- | |- | ||

|Port speed and count versus Internal Fabric Bandwidth | |Port speed and count versus Internal Fabric Bandwidth | ||

|A switch that has, say, 48 [[Gbps]] ports may not be able to route 48 Gbps. This is a problem similar to over-provisioning we discussed above. If an inexpensive 48 port switch has an internal switch fabric of only 20 Gbps, then it can handle only up to 20 saturated ports at a time. Be sure to review the internal fabric capacity and make sure it's high enough to handle all connected interfaces running full speed. Note, of course, that only one link in a given bond will be active at a time. | |A switch that has, say, 48 [[Gbps]] ports may not be able to route 48 Gbps. This is a problem similar to over-provisioning we discussed above. If an inexpensive 48 port switch has an internal switch fabric of only 20 Gbps, then it can handle only up to 20 saturated ports at a time. Be sure to review the internal fabric capacity and make sure it's high enough to handle all connected interfaces running full speed. Note, of course, that only one link in a given [[network bonding|bond]] will be active at a time. | ||

|- | |- | ||

|Uplink speed | |Uplink speed | ||

|If you have a gigabit switch and you simply link the ports between the two switches, the link speed will be limited to 1 gigabit. Normally, all traffic will be kept on one switch, so this is fine | |If you have a gigabit switch and you simply link the ports between the two switches, the link speed will be limited to 1 gigabit. Normally, all traffic will be kept on one switch, so this is fine. If a single link fails over to the backup switch, then its traffic will bounce up via the uplink cable to the main switch at full speed. However, if a second link fails, both will be sharing the single gigabit uplink, so there is a risk of congestion on the link. If you can't get stacked switches, which generally have 10 Gbps speeds or higher, then look for switches with 10 Gbps dedicated uplink ports and use those for uplinks. | ||

|- | |||

|Uplinks and VLANs | |||

|When using normal ports for uplinks with VLANs defined in the switch, each uplink port will be restricted to the VLAN it is a member of. In this case, you will need one uplink cable per VLAN. | |||

|- | |- | ||

|Port [https://en.wikipedia.org/wiki/Link_aggregation Trunking] | |Port [https://en.wikipedia.org/wiki/Link_aggregation Trunking] | ||

| Line 284: | Line 308: | ||

|} | |} | ||

There are numerous other valid considerations when choosing network switches for your Anvil!. These are the most prescient considerations, though. | There are numerous other valid considerations when choosing network switches for your ''Anvil!''. These are the most prescient considerations, though. | ||

=== Why Switched PDUs? === | === Why Switched PDUs? === | ||

| Line 296: | Line 320: | ||

There is a problem with this though. Actually, two. | There is a problem with this though. Actually, two. | ||

# The IPMI draws | # The IPMI draws its power from the same power source as the server itself. If the host node loses power entirely, IPMI goes down with the host. | ||

# The IPMI BMC has a single network interface and it is a single device. | # The IPMI BMC has a single network interface and it is a single device. | ||

If we relied on IPMI-based fencing alone, we'd have a single point of failure. If the surviving node can not put the lost node into a known state, it will intentionally hang. The logic being that a hung cluster is better than risking corruption or a [[split-brain]]. This means that, with IPMI-based fencing alone, the loss of power to a single node would not be automatically recoverable. | |||

That just will not do! | That just will not do! | ||

| Line 305: | Line 329: | ||

To make fencing redundant, we will use switched [[PDU]]s. Think of these as network-connected power bars. | To make fencing redundant, we will use switched [[PDU]]s. Think of these as network-connected power bars. | ||

Imagine now that one of the nodes blew itself up. The surviving node would try to connect to | Imagine now that one of the nodes blew itself up. The surviving node would try to connect to its IPMI interface and, of course, get no response. Then it would log into both PDUs (one behind either side of the redundant power supplies) and cut the power going to the node. By doing this, we now have a way of putting a lost node into a known state. | ||

So now, no matter how badly things go wrong, we can always recover! | So now, no matter how badly things go wrong, we can always recover! | ||

| Line 311: | Line 335: | ||

=== Network Managed UPSes Are Worth It === | === Network Managed UPSes Are Worth It === | ||

We have found that a surprising number of issues that | We have found that a surprising number of issues that affect service availability are power related. A network-connected smart UPS allows you to monitor the power coming from the building mains. Thanks to this, we've been able to detect far more than simple "lost power" events. We've been able to detect failing transformers and regulators, over and under voltage events and so on. Events that, if caught ahead of time, avoid full power outages. It also protects the rest of your gear that isn't behind a UPS. | ||

So strictly speaking, you don't need network managed UPSes. However, we have found them worth their weight in gold | So strictly speaking, you don't need network managed UPSes. However, we have found them to be [http://www.wolframalpha.com/share/clip?f=d41d8cd98f00b204e9800998ecf8427ebgdg6ijlui worth their weight in gold]. We will, of course, be using them in this tutorial. | ||

=== Dashboard Servers === | === Dashboard Servers === | ||

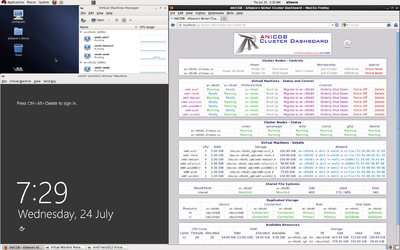

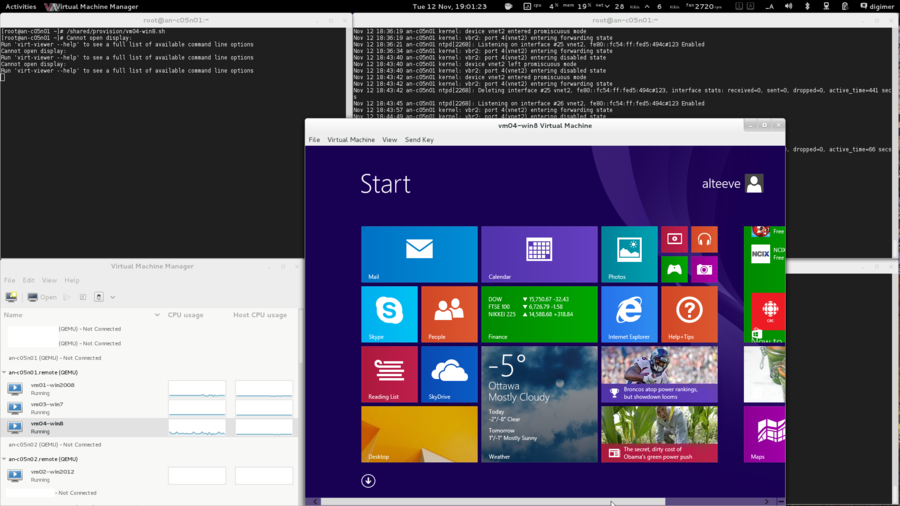

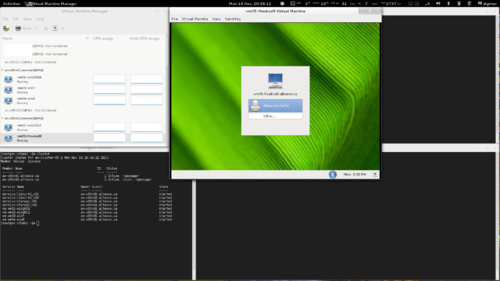

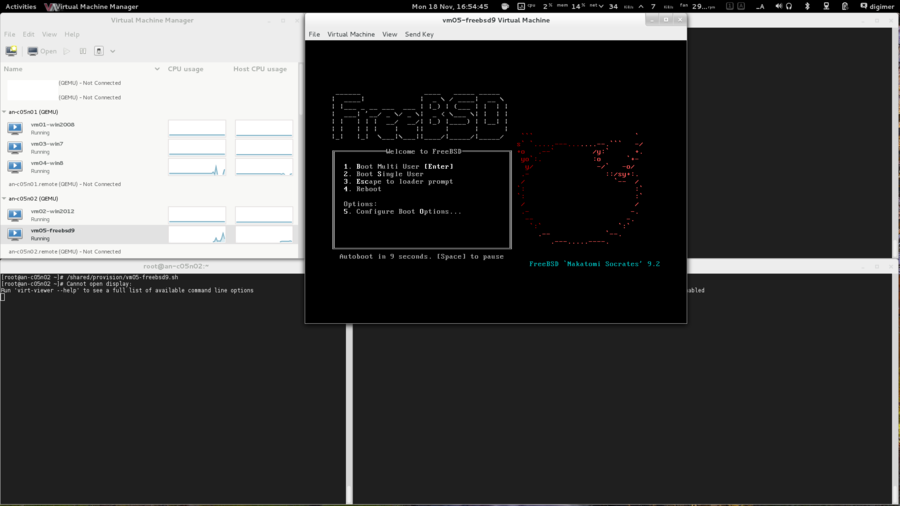

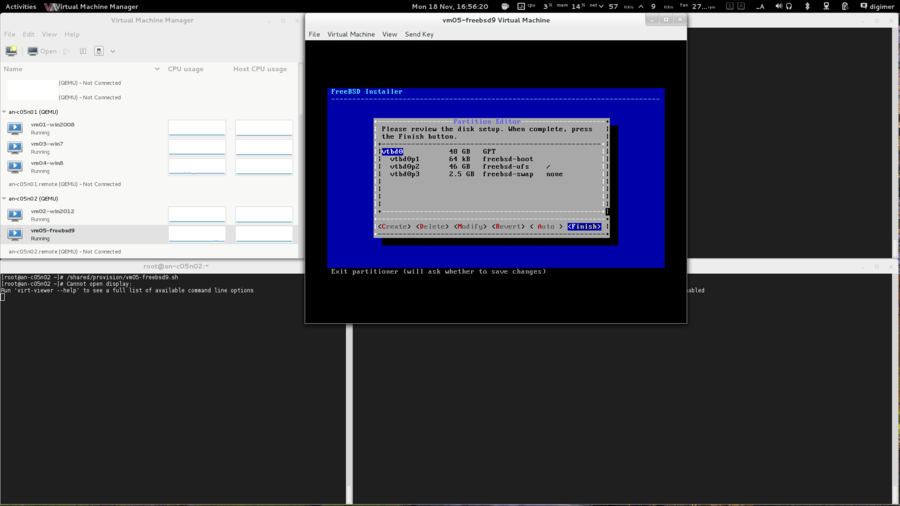

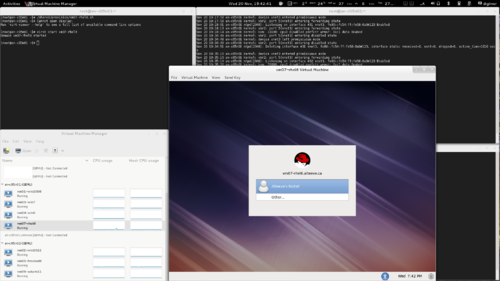

The Anvil! will be managed by [[ | The ''Anvil!'' will be managed by [[Striker - Cluster Dashboard]], a small little dedicated server. This can be a virtual machine on a laptop or desktop, or a dedicated little server. All that matters is that it can run [[RHEL]] or [[CentOS]] version 6 with a minimal desktop. | ||

Normally, we setup a couple [http://www.asus.com/ca-en/Eee_Box_PCs/EeeBox_PC_EB1033 ASUS EeeBox] machines, for redundancy of course, hanging off the back of a monitor. | Normally, we setup a couple of [http://www.asus.com/ca-en/Eee_Box_PCs/EeeBox_PC_EB1033 ASUS EeeBox] machines, for redundancy of course, hanging off the back of a monitor. Users can connect to the dashboard using a browser from any device and control the servers and nodes easily from it. It also provides [https://en.wikipedia.org/wiki/KVM_switch KVM-like] access to the servers on the ''Anvil!'', allowing them to work on the servers when they can't connect over the network. For this reason, you will probably want to pair up the dashboard machines with a monitor that offers a decent resolution to make it easy to see the desktop of the hosted servers. | ||

== What You Should Know Before Beginning == | == What You Should Know Before Beginning == | ||

| Line 329: | Line 353: | ||

Patience is vastly more important than any pre-existing skill. | Patience is vastly more important than any pre-existing skill. | ||

== A Word | == A Word on Complexity == | ||

Introducing the <span class="code">Fabimer | Introducing the <span class="code">Fabimer principle</span>: | ||

Clustering is not inherently hard, but it is inherently complex. Consider: | Clustering is not inherently hard, but it is inherently complex. Consider: | ||

| Line 338: | Line 362: | ||

** [[RHCS]] uses; <span class="code">cman</span>, <span class="code">corosync</span>, <span class="code">dlm</span>, <span class="code">fenced</span>, <span class="code">rgmanager</span>, and many more smaller apps. | ** [[RHCS]] uses; <span class="code">cman</span>, <span class="code">corosync</span>, <span class="code">dlm</span>, <span class="code">fenced</span>, <span class="code">rgmanager</span>, and many more smaller apps. | ||

** We will be adding <span class="code">DRBD</span>, <span class="code">GFS2</span>, <span class="code">clvmd</span>, <span class="code">libvirtd</span> and <span class="code">KVM</span>. | ** We will be adding <span class="code">DRBD</span>, <span class="code">GFS2</span>, <span class="code">clvmd</span>, <span class="code">libvirtd</span> and <span class="code">KVM</span>. | ||

** Right there, we have <span class="code">N | ** Right there, we have <span class="code">N * 10</span> possible bugs. We'll call this <span class="code">A</span>. | ||

* A cluster has <span class="code">Y</span> nodes. | * A cluster has <span class="code">Y</span> nodes. | ||

** In our case, <span class="code">2</span> nodes, each with <span class="code">3</span> networks across <span class="code">6</span> interfaces bonded into pairs. | ** In our case, <span class="code">2</span> nodes, each with <span class="code">3</span> networks across <span class="code">6</span> interfaces bonded into pairs. | ||

** The network infrastructure (Switches, routers, etc). We will be using two managed switches, adding another layer of complexity. | ** The network infrastructure (Switches, routers, etc). We will be using two managed switches, adding another layer of complexity. | ||

** This gives us another <span class="code">Y | ** This gives us another <span class="code">Y * (2*(3*2))+2</span>, the <span class="code">+2</span> for managed switches. We'll call this <span class="code">B</span>. | ||

* Let's add the human factor. Let's say that a person needs roughly 5 years of cluster experience to be considered an proficient. For each year less than this, add a <span class="code">Z</span> "oops" factor, <span class="code">(5-Z) | * Let's add the human factor. Let's say that a person needs roughly 5 years of cluster experience to be considered an proficient. For each year less than this, add a <span class="code">Z</span> "oops" factor, <span class="code">(5-Z) * 2</span>. We'll call this <span class="code">C</span>. | ||

* So, finally, add up the complexity, using this tutorial's layout, 0-years of experience and managed switches. | * So, finally, add up the complexity, using this tutorial's layout, 0-years of experience and managed switches. | ||

** <span class="code">(N | ** <span class="code">(N * 10) * (Y * (2*(3*2))+2) * ((5-0) * 2) == (A * B * C)</span> == an-unknown-but-big-number. | ||

This isn't meant to scare you away, but it is meant to be a sobering statement. Obviously, those numbers are somewhat artificial, but the point remains. | This isn't meant to scare you away, but it is meant to be a sobering statement. Obviously, those numbers are somewhat artificial, but the point remains. | ||

| Line 367: | Line 391: | ||

* Clustering is easy, but it has a complex web of inter-connectivity. You must grasp this network if you want to be an effective cluster administrator! | * Clustering is easy, but it has a complex web of inter-connectivity. You must grasp this network if you want to be an effective cluster administrator! | ||

== Component; | == Component; Cman == | ||

The <span class="code">cman</span> portion of the the cluster is the '''c'''luster '''man'''ager. In the 3.0 series used in [[EL6]], <span class="code">cman</span> acts mainly as a [[quorum]] provider. That is, is adds up the votes from the cluster members and decides if there is a simple majority. If there is, the cluster is "quorate" and is allowed to provide cluster services. | The <span class="code">cman</span> portion of the the cluster is the '''c'''luster '''man'''ager. In the 3.0 series used in [[EL6]], <span class="code">cman</span> acts mainly as a [[quorum]] provider. That is, is adds up the votes from the cluster members and decides if there is a simple majority. If there is, the cluster is "quorate" and is allowed to provide cluster services. | ||

| Line 373: | Line 397: | ||

The <span class="code">cman</span> service will be used to start and stop all of the components needed to make the cluster operate. | The <span class="code">cman</span> service will be used to start and stop all of the components needed to make the cluster operate. | ||

== Component; | == Component; Corosync == | ||

Corosync is the heart of the cluster. Almost all other cluster compnents operate though this. | Corosync is the heart of the cluster. Almost all other cluster compnents operate though this. | ||

In Red Hat clusters, <span class="code">corosync</span> is configured via the central <span class="code">cluster.conf</span> file. | In Red Hat clusters, <span class="code">corosync</span> is configured via the central <span class="code">cluster.conf</span> file. In other cluster stacks, like pacemaker, it can be configured directly in <span class="code">corosync.conf</span>, but given that we will be building an RHCS cluster, this is not used. We will only use <span class="code">cluster.conf</span>. That said, almost all <span class="code">corosync.conf</span> options are available in <span class="code">cluster.conf</span>. This is important to note as you will see references to both configuration files when searching the Internet. | ||

Corosync sends messages using [[multicast]] messaging by default. Recently, [[unicast]] support has been added, but due to network latency, it is only recommended for use with small clusters of two to four nodes. We will be using [[multicast]] in this tutorial. | Corosync sends messages using [[multicast]] messaging by default. Recently, [[unicast]] support has been added, but due to network latency, it is only recommended for use with small clusters of two to four nodes. We will be using [[multicast]] in this tutorial. | ||

=== A Little History === | === A Little History === | ||

Please see this article for a better discussion on the history of HA: | |||

* [[High-Availability Clustering in the Open Source Ecosystem]] | |||

There were significant changes between [[RHCS]] the old version 2 and version 3 available on [[EL6]], which we are using. | There were significant changes between [[RHCS]] the old version 2 and version 3 available on [[EL6]], which we are using. | ||

| Line 395: | Line 423: | ||

In [[EL6]], <span class="code">corosync</span> is version 1.4. Upstream, however, it's passed version 2. One of the major changes in the 2+ version is that <span class="code">corosync</span> becomes a quorum provider, helping to remove the need for <span class="code">cman</span>. If you experiment with clustering on [[Fedora]], for example, you will find that cman is gone entirely. | In [[EL6]], <span class="code">corosync</span> is version 1.4. Upstream, however, it's passed version 2. One of the major changes in the 2+ version is that <span class="code">corosync</span> becomes a quorum provider, helping to remove the need for <span class="code">cman</span>. If you experiment with clustering on [[Fedora]], for example, you will find that cman is gone entirely. | ||

== Concept; | == Concept; Quorum == | ||

[[Quorum]] is defined as the minimum set of hosts required in order to provide clustered services and is used to prevent [[split-brain]] situations. | [[Quorum]] is defined as the minimum set of hosts required in order to provide clustered services and is used to prevent [[split-brain]] situations. | ||

| Line 403: | Line 431: | ||

The idea behind quorum is that, when a cluster splits into two or more partitions, which ever group of machines has quorum can safely start clustered services knowing that no other lost nodes will try to do the same. | The idea behind quorum is that, when a cluster splits into two or more partitions, which ever group of machines has quorum can safely start clustered services knowing that no other lost nodes will try to do the same. | ||

Take this scenario | Take this scenario: | ||

* You have a cluster of four nodes, each with one vote. | * You have a cluster of four nodes, each with one vote. | ||

| Line 429: | Line 457: | ||

This is provided by <span class="code">corosync</span> using "closed process groups", <span class="code">[[CPG]]</span>. A closed process group is simply a private group of processes in a cluster. Within this closed group, all messages between members are ordered. Delivery, however, is not guaranteed. If a member misses messages, it is up to the member's application to decide what action to take. | This is provided by <span class="code">corosync</span> using "closed process groups", <span class="code">[[CPG]]</span>. A closed process group is simply a private group of processes in a cluster. Within this closed group, all messages between members are ordered. Delivery, however, is not guaranteed. If a member misses messages, it is up to the member's application to decide what action to take. | ||

Let's look at two scenarios showing how locks are handled using CPG | Let's look at two scenarios showing how locks are handled using CPG: | ||

* The cluster starts up cleanly with two members. | * The cluster starts up cleanly with two members. | ||

* Both members are able to start <span class="code">service:foo</span>. | * Both members are able to start <span class="code">service:foo</span>. | ||

* Both want to start it, but need a lock from [[DLM]] to do so. | * Both want to start it, but need a lock from [[DLM]] to do so. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n01</span> member has its totem token, and sends its request for the lock. | ||

** DLM issues a lock for that service to <span class="code">an- | ** DLM issues a lock for that service to <span class="code">an-a05n01</span>. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n02</span> member requests a lock for the same service. | ||

** DLM rejects the lock request. | ** DLM rejects the lock request. | ||

* The <span class="code">an- | * The <span class="code">an-a05n01</span> member successfully starts <span class="code">service:foo</span> and announces this to the CPG members. | ||

* The <span class="code">an- | * The <span class="code">an-a05n02</span> sees that <span class="code">service:foo</span> is now running on <span class="code">an-a05n01</span> and no longer tries to start the service. | ||

* The two members want to write to a common area of the <span class="code">/shared</span> GFS2 partition. | * The two members want to write to a common area of the <span class="code">/shared</span> GFS2 partition. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n02</span> sends a request for a DLM lock against the FS, gets it. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n01</span> sends a request for the same lock, but DLM sees that a lock is pending and rejects the request. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n02</span> member finishes altering the file system, announces the changed over CPG and releases the lock. | ||

** The <span class="code">an- | ** The <span class="code">an-a05n01</span> member updates its view of the filesystem, requests a lock, receives it and proceeds to update the filesystems. | ||

** It completes the changes, annouces the changes over CPG and releases the lock. | ** It completes the changes, annouces the changes over CPG and releases the lock. | ||

| Line 507: | Line 535: | ||

We prefer the term "fencing" because the fundamental goal is to put the target node into a state where it can not effect cluster resources or provide clustered services. This can be accomplished by powering it off, called "power fencing", or by disconnecting it from SAN storage and/or network, a process called "fabric fencing". | We prefer the term "fencing" because the fundamental goal is to put the target node into a state where it can not effect cluster resources or provide clustered services. This can be accomplished by powering it off, called "power fencing", or by disconnecting it from SAN storage and/or network, a process called "fabric fencing". | ||

The term "STONITH", based on | The term "STONITH", based on its acronym, implies power fencing. This is not a big deal, but it is the reason this tutorial sticks with the term "fencing". | ||

== Component; | == Component; Totem == | ||

The <span class="code">[[totem]]</span> protocol defines message passing within the cluster and it is used by <span class="code">corosync</span>. A token is passed around all the nodes in the cluster, and nodes can only send messages while they have the token. A node will keep its messages in memory until it gets the token back with no "not ack" messages. This way, if a node missed a message, it can request it be resent when it gets its token. If a node isn't up, it will simply miss the messages. | The <span class="code">[[totem]]</span> protocol defines message passing within the cluster and it is used by <span class="code">corosync</span>. A token is passed around all the nodes in the cluster, and nodes can only send messages while they have the token. A node will keep its messages in memory until it gets the token back with no "not ack" messages. This way, if a node missed a message, it can request it be resent when it gets its token. If a node isn't up, it will simply miss the messages. | ||

| Line 515: | Line 543: | ||

The <span class="code">totem</span> protocol supports something called '<span class="code">rrp</span>', '''R'''edundant '''R'''ing '''P'''rotocol. Through <span class="code">rrp</span>, you can add a second backup ring on a separate network to take over in the event of a failure in the first ring. In RHCS, these rings are known as "<span class="code">ring 0</span>" and "<span class="code">ring 1</span>". The RRP is being re-introduced in RHCS version 3. Its use is experimental and should only be used with plenty of testing. | The <span class="code">totem</span> protocol supports something called '<span class="code">rrp</span>', '''R'''edundant '''R'''ing '''P'''rotocol. Through <span class="code">rrp</span>, you can add a second backup ring on a separate network to take over in the event of a failure in the first ring. In RHCS, these rings are known as "<span class="code">ring 0</span>" and "<span class="code">ring 1</span>". The RRP is being re-introduced in RHCS version 3. Its use is experimental and should only be used with plenty of testing. | ||

== Component; | == Component; Rgmanager == | ||

When the cluster membership changes, <span class="code">corosync</span> tells the <span class="code">rgmanager</span> that it needs to recheck its services. It will examine what changed and then will start, stop, migrate or recover cluster resources as needed. | When the cluster membership changes, <span class="code">corosync</span> tells the <span class="code">rgmanager</span> that it needs to recheck its services. It will examine what changed and then will start, stop, migrate or recover cluster resources as needed. | ||

| Line 527: | Line 555: | ||

[[Pacemaker]] is also a resource manager, like rgmanager. You can not use both in the same cluster. | [[Pacemaker]] is also a resource manager, like rgmanager. You can not use both in the same cluster. | ||

Back prior to 2008, there were two distinct open-source cluster projects | Back prior to 2008, there were two distinct open-source cluster projects: | ||

* Red Hat's Cluster Service | * Red Hat's Cluster Service | ||

* Linux-HA's Heartbeat | * Linux-HA's Heartbeat | ||

Pacemaker was born out of the Linux-HA project as an advanced resource manager that could use either heartbeat or openais for cluster membership and communication. Unlike RHCS and heartbeat, | Pacemaker was born out of the Linux-HA project as an advanced resource manager that could use either heartbeat or openais for cluster membership and communication. Unlike RHCS and heartbeat, its sole focus was resource management. | ||

In 2008, plans were made to begin the slow process of merging the two independent stacks into one. As mentioned in the corosync overview, it replaced openais and became the default cluster membership and communication layer for both RHCS and Pacemaker. Development of heartbeat was ended, though [http://www.linbit.com/en/company/news/125-linbit-takes-over-heartbeat-maintenance Linbit] continues to maintain the heartbeat code to this day. | In 2008, plans were made to begin the slow process of merging the two independent stacks into one. As mentioned in the corosync overview, it replaced openais and became the default cluster membership and communication layer for both RHCS and Pacemaker. Development of heartbeat was ended, though [http://www.linbit.com/en/company/news/125-linbit-takes-over-heartbeat-maintenance Linbit] continues to maintain the heartbeat code to this day. | ||

| Line 539: | Line 567: | ||

Red Hat introduced pacemaker as "Tech Preview" in [[RHEL]] 6.0. It has been available beside RHCS ever since, though support is not offered yet[https://access.redhat.com/site/documentation/en-US/Red_Hat_Enterprise_Linux/6-Beta/html/Configuring_the_Red_Hat_High_Availability_Add-On_with_Pacemaker/ *]. | Red Hat introduced pacemaker as "Tech Preview" in [[RHEL]] 6.0. It has been available beside RHCS ever since, though support is not offered yet[https://access.redhat.com/site/documentation/en-US/Red_Hat_Enterprise_Linux/6-Beta/html/Configuring_the_Red_Hat_High_Availability_Add-On_with_Pacemaker/ *]. | ||

{{note|1=Pacemaker entered full support with the release of RHEL 6.5. It is also the only available HA stack on RHEL 7 beta. This is a strong indication that, indeed, corosync and pacemaker will be the future HA stack on RHEL.}} | |||

Red Hat has a strict policy of not saying what will happen in the future. That said, the speculation is that Pacemaker will become supported soon and will replace rgmanager entirely in RHEL 7, given that cman and rgmanager no longer exist upstream in Fedora. | Red Hat has a strict policy of not saying what will happen in the future. That said, the speculation is that Pacemaker will become supported soon and will replace rgmanager entirely in RHEL 7, given that cman and rgmanager no longer exist upstream in Fedora. | ||

| Line 546: | Line 576: | ||

We believe that, no matter how promising software looks, stability is king. Pacemaker on other distributions has been stable and supported for a long time. However, on RHEL, it's a recent addition and the developers have been doing a tremendous amount of work on pacemaker and associated tools. For this reason, we feel that on RHEL 6, pacemaker is too much of a moving target at this time. That said, we do intend to switch to pacemaker some time in the next year or two, depending on how the Red Hat stack evolves. | We believe that, no matter how promising software looks, stability is king. Pacemaker on other distributions has been stable and supported for a long time. However, on RHEL, it's a recent addition and the developers have been doing a tremendous amount of work on pacemaker and associated tools. For this reason, we feel that on RHEL 6, pacemaker is too much of a moving target at this time. That said, we do intend to switch to pacemaker some time in the next year or two, depending on how the Red Hat stack evolves. | ||

== Component; | == Component; Qdisk == | ||

{{note|1=<span class="code">qdisk</span> does not work reliably on a DRBD resource, so we will not be using it in this tutorial.}} | {{note|1=<span class="code">qdisk</span> does not work reliably on a DRBD resource, so we will not be using it in this tutorial.}} | ||

| Line 562: | Line 592: | ||

Think of it this way; | Think of it this way; | ||

With traditional software raid, you would take | With traditional software raid, you would take: | ||

* <span class="code">/dev/sda5</span> + <span class="code">/dev/sdb5</span> -> <span class="code">/dev/md0</span> | * <span class="code">/dev/sda5</span> + <span class="code">/dev/sdb5</span> -> <span class="code">/dev/md0</span> | ||

With DRBD, you have this | With DRBD, you have this: | ||

* <span class="code">node1:/dev/sda5</span> + <span class="code">node2:/dev/sda5</span> -> <span class="code">both:/dev/drbd0</span> | * <span class="code">node1:/dev/sda5</span> + <span class="code">node2:/dev/sda5</span> -> <span class="code">both:/dev/drbd0</span> | ||

| Line 571: | Line 603: | ||

The main difference with DRBD is that the <span class="code">/dev/drbd0</span> will always be the same on both nodes. If you write something to node 1, it's instantly available on node 2, and vice versa. Of course, this means that what ever you put on top of DRBD has to be "cluster aware". That is to say, the program or file system using the new <span class="code">/dev/drbd0</span> device has to understand that the contents of the disk might change because of another node. | The main difference with DRBD is that the <span class="code">/dev/drbd0</span> will always be the same on both nodes. If you write something to node 1, it's instantly available on node 2, and vice versa. Of course, this means that what ever you put on top of DRBD has to be "cluster aware". That is to say, the program or file system using the new <span class="code">/dev/drbd0</span> device has to understand that the contents of the disk might change because of another node. | ||

== Component; DLM == | |||

One of the major roles of a cluster is to provide [[DLM|distributed locking]] for clustered storage and resource management. | |||

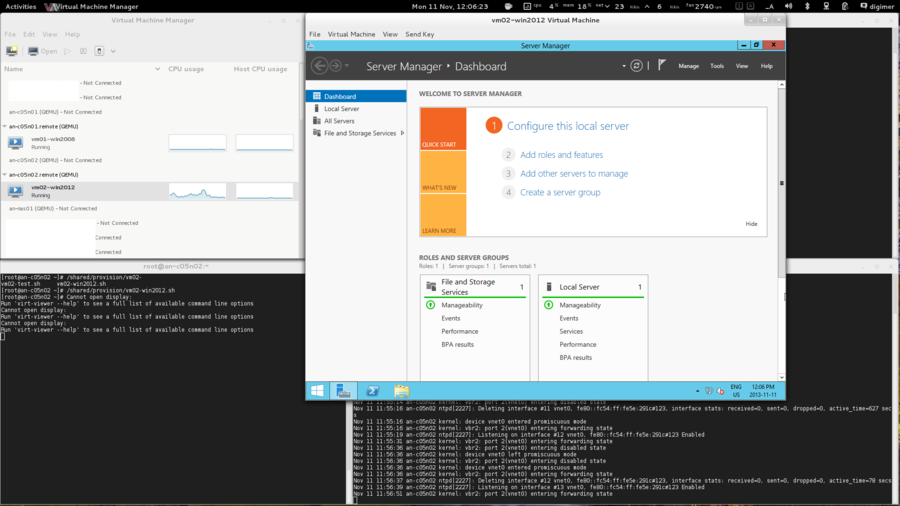

Whenever a resource, GFS2 filesystem or clustered LVM LV needs a lock, it sends a request to <span class="code">dlm_controld</span> which runs in userspace. This communicates with DLM in kernel. If the lockspace does not yet exist, DLM will create it and then give the lock to the requester. Should a subsequant lock request come in for the same lockspace, it will be rejected. Once the application using the lock is finished with it, it will release the lock. After this, another node may request and receive a lock for the lockspace. | |||